C. Garcia

Satellogic Inc

IQUAFLOW: A new framework to measure image quality

Oct 24, 2022

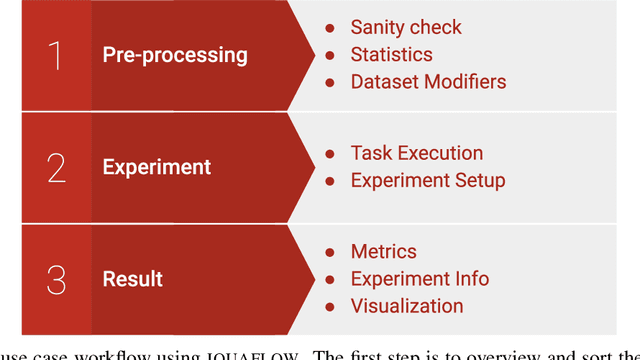

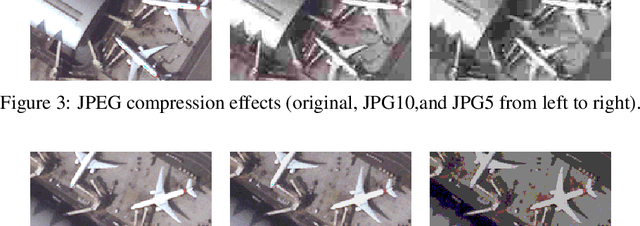

Abstract:IQUAFLOW is a new image quality framework that provides a set of tools to assess image quality. The user can add custom metrics that can be easily integrated. Furthermore, iquaflow allows to measure quality by using the performance of AI models trained on the images as a proxy. This also helps to easily make studies of performance degradation of several modifications of the original dataset, for instance, with images reconstructed after different levels of lossy compression; satellite images would be a use case example, since they are commonly compressed before downloading to the ground. In this situation, the optimization problem consists in finding the smallest images that provide yet sufficient quality to meet the required performance of the deep learning algorithms. Thus, a study with iquaflow is suitable for such case. All this development is wrapped in Mlflow: an interactive tool used to visualize and summarize the results. This document describes different use cases and provides links to their respective repositories. To ease the creation of new studies, we include a cookie-cutter repository. The source code, issue tracker and aforementioned repositories are all hosted on GitHub https://github.com/satellogic/iquaflow.

Low-complexity Approximate Convolutional Neural Networks

Jul 29, 2022

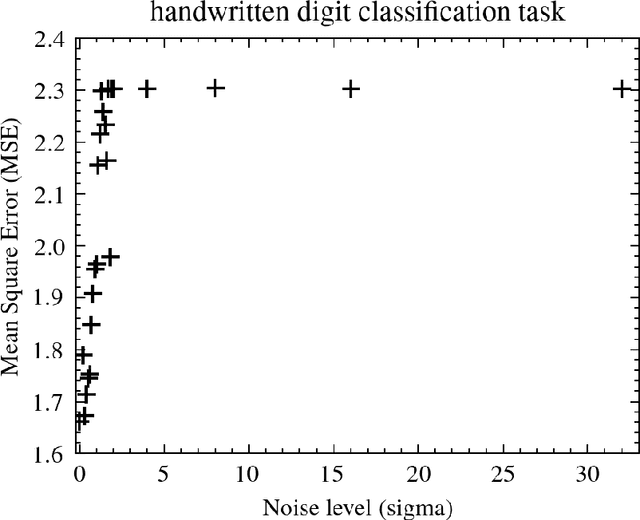

Abstract:In this paper, we present an approach for minimizing the computational complexity of trained Convolutional Neural Networks (ConvNet). The idea is to approximate all elements of a given ConvNet and replace the original convolutional filters and parameters (pooling and bias coefficients; and activation function) with efficient approximations capable of extreme reductions in computational complexity. Low-complexity convolution filters are obtained through a binary (zero-one) linear programming scheme based on the Frobenius norm over sets of dyadic rationals. The resulting matrices allow for multiplication-free computations requiring only addition and bit-shifting operations. Such low-complexity structures pave the way for low-power, efficient hardware designs. We applied our approach on three use cases of different complexity: (i) a "light" but efficient ConvNet for face detection (with around 1000 parameters); (ii) another one for hand-written digit classification (with more than 180000 parameters); and (iii) a significantly larger ConvNet: AlexNet with $\approx$1.2 million matrices. We evaluated the overall performance on the respective tasks for different levels of approximations. In all considered applications, very low-complexity approximations have been derived maintaining an almost equal classification performance.

* 13 pages, 4 figures, 8 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge