Bruno Roy

FunPhase: A Periodic Functional Autoencoder for Motion Generation via Phase Manifolds

Dec 10, 2025Abstract:Learning natural body motion remains challenging due to the strong coupling between spatial geometry and temporal dynamics. Embedding motion in phase manifolds, latent spaces that capture local periodicity, has proven effective for motion prediction; however, existing approaches lack scalability and remain confined to specific settings. We introduce FunPhase, a functional periodic autoencoder that learns a phase manifold for motion and replaces discrete temporal decoding with a function-space formulation, enabling smooth trajectories that can be sampled at arbitrary temporal resolutions. FunPhase supports downstream tasks such as super-resolution and partial-body motion completion, generalizes across skeletons and datasets, and unifies motion prediction and generation within a single interpretable manifold. Our model achieves substantially lower reconstruction error than prior periodic autoencoder baselines while enabling a broader range of applications and performing on par with state-of-the-art motion generation methods.

A Scalable Attention-Based Approach for Image-to-3D Texture Mapping

Sep 05, 2025Abstract:High-quality textures are critical for realistic 3D content creation, yet existing generative methods are slow, rely on UV maps, and often fail to remain faithful to a reference image. To address these challenges, we propose a transformer-based framework that predicts a 3D texture field directly from a single image and a mesh, eliminating the need for UV mapping and differentiable rendering, and enabling faster texture generation. Our method integrates a triplane representation with depth-based backprojection losses, enabling efficient training and faster inference. Once trained, it generates high-fidelity textures in a single forward pass, requiring only 0.2s per shape. Extensive qualitative, quantitative, and user preference evaluations demonstrate that our method outperforms state-of-the-art baselines on single-image texture reconstruction in terms of both fidelity to the input image and perceptual quality, highlighting its practicality for scalable, high-quality, and controllable 3D content creation.

UniMoGen: Universal Motion Generation

May 28, 2025

Abstract:Motion generation is a cornerstone of computer graphics, animation, gaming, and robotics, enabling the creation of realistic and varied character movements. A significant limitation of existing methods is their reliance on specific skeletal structures, which restricts their versatility across different characters. To overcome this, we introduce UniMoGen, a novel UNet-based diffusion model designed for skeleton-agnostic motion generation. UniMoGen can be trained on motion data from diverse characters, such as humans and animals, without the need for a predefined maximum number of joints. By dynamically processing only the necessary joints for each character, our model achieves both skeleton agnosticism and computational efficiency. Key features of UniMoGen include controllability via style and trajectory inputs, and the ability to continue motions from past frames. We demonstrate UniMoGen's effectiveness on the 100style dataset, where it outperforms state-of-the-art methods in diverse character motion generation. Furthermore, when trained on both the 100style and LAFAN1 datasets, which use different skeletons, UniMoGen achieves high performance and improved efficiency across both skeletons. These results highlight UniMoGen's potential to advance motion generation by providing a flexible, efficient, and controllable solution for a wide range of character animations.

Attention-Based Learning for Fluid State Interpolation and Editing in a Time-Continuous Framework

Jun 12, 2024Abstract:In this work, we introduce FluidsFormer: a transformer-based approach for fluid interpolation within a continuous-time framework. By combining the capabilities of PITT and a residual neural network (RNN), we analytically predict the physical properties of the fluid state. This enables us to interpolate substep frames between simulated keyframes, enhancing the temporal smoothness and sharpness of animations. We demonstrate promising results for smoke interpolation and conduct initial experiments on liquids.

Neural Shape Diameter Function for Efficient Mesh Segmentation

Jun 14, 2023Abstract:Partitioning a polygonal mesh into meaningful parts can be challenging. Many applications require decomposing such structures for further processing in computer graphics. In the last decade, several methods were proposed to tackle this problem, at the cost of intensive computational times. Recently, machine learning has proven to be effective for the segmentation task on 3D structures. Nevertheless, these state-of-the-art methods are often hardly generalizable and require dividing the learned model into several specific classes of objects to avoid overfitting. We present a data-driven approach leveraging deep learning to encode a mapping function prior to mesh segmentation for multiple applications. Our network reproduces a neighborhood map using our knowledge of the \textsl{Shape Diameter Function} (SDF) method using similarities among vertex neighborhoods. Our approach is resolution-agnostic as we downsample the input meshes and query the full-resolution structure solely for neighborhood contributions. Using our predicted SDF values, we can inject the resulting structure into a graph-cut algorithm to generate an efficient and robust mesh segmentation while considerably reducing the required computation times.

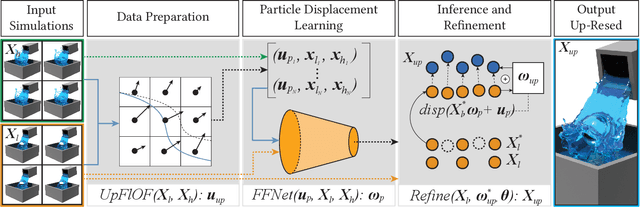

Neural UpFlow: A Scene Flow Learning Approach to Increase the Apparent Resolution of Particle-Based Liquids

Jun 09, 2021

Abstract:We present a novel up-resing technique for generating high-resolution liquids based on scene flow estimation using deep neural networks. Our approach infers and synthesizes small- and large-scale details solely from a low-resolution particle-based liquid simulation. The proposed network leverages neighborhood contributions to encode inherent liquid properties throughout convolutions. We also propose a particle-based approach to interpolate between liquids generated from varying simulation discretizations using a state-of-the-art bidirectional optical flow solver method for fluids in addition to a novel key-event topological alignment constraint. In conjunction with the neighborhood contributions, our loss formulation allows the inference model throughout epochs to reward important differences in regard to significant gaps in simulation discretizations. Even when applied in an untested simulation setup, our approach is able to generate plausible high-resolution details. Using this interpolation approach and the predicted displacements, our approach combines the input liquid properties with the predicted motion to infer semi-Lagrangian advection. We furthermore showcase how the proposed interpolation approach can facilitate generating large simulation datasets with a subset of initial condition parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge