Boris Rubinstein

It's a super deal -- train recurrent network on noisy data and get smooth prediction free

Jun 09, 2022

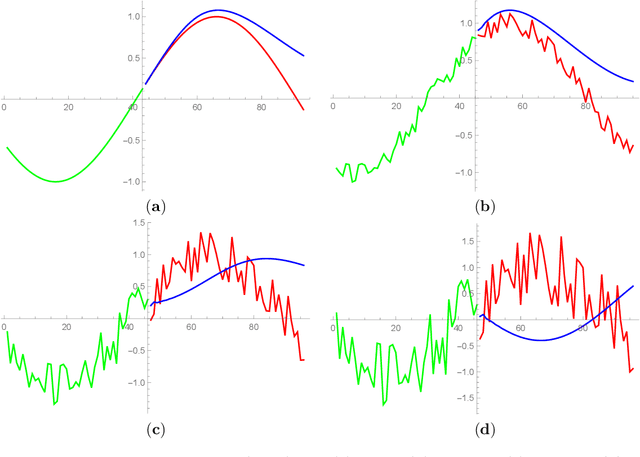

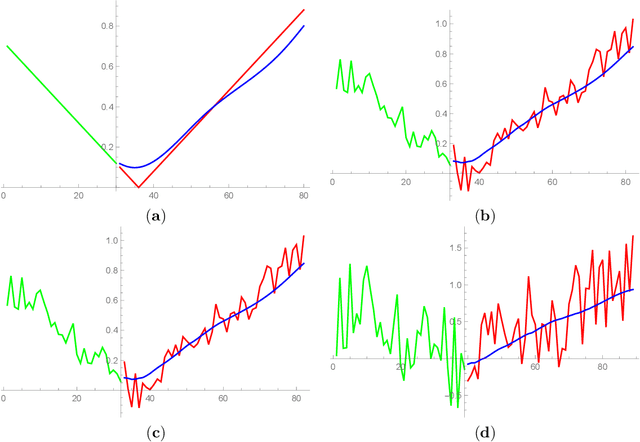

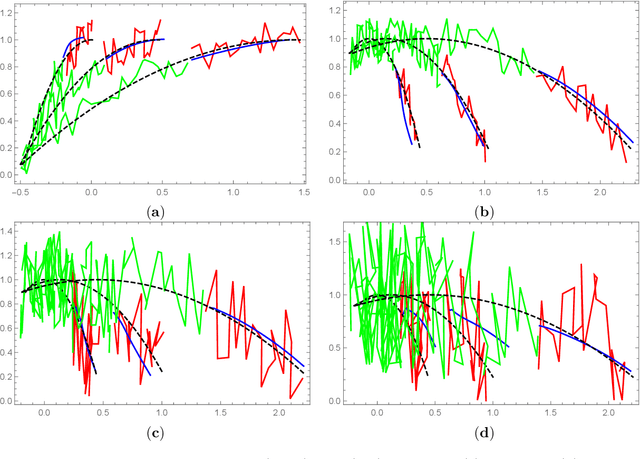

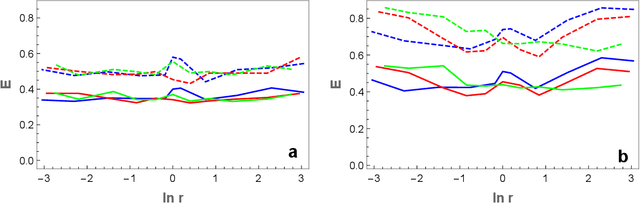

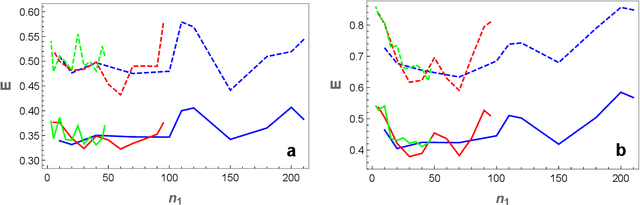

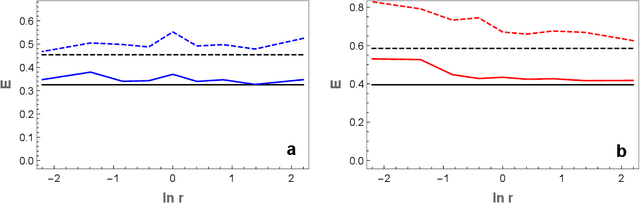

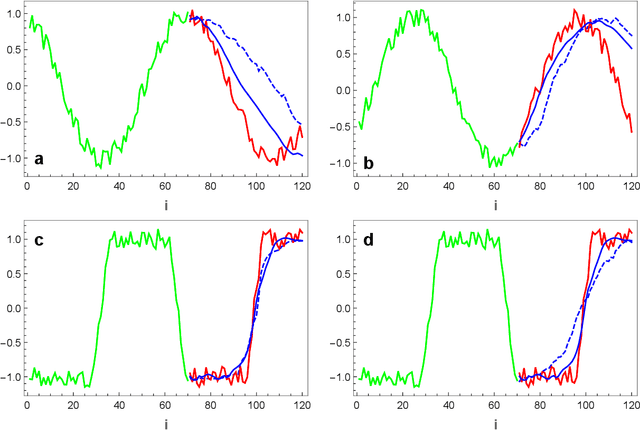

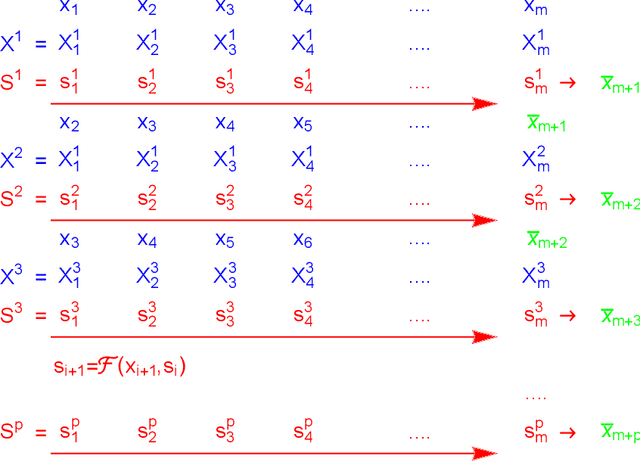

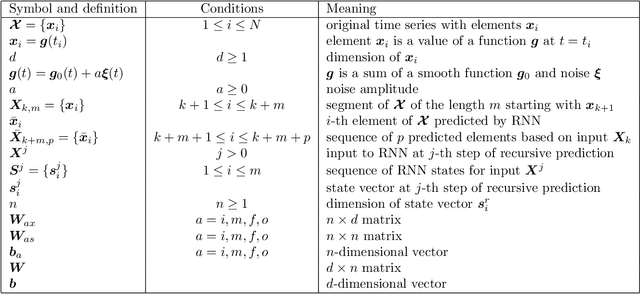

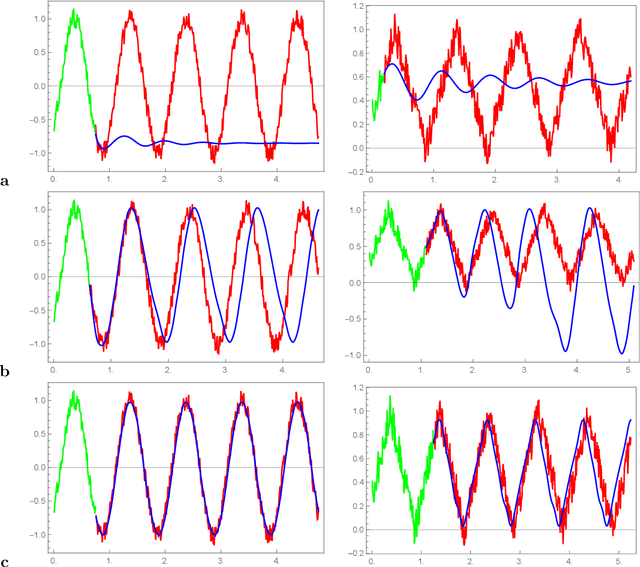

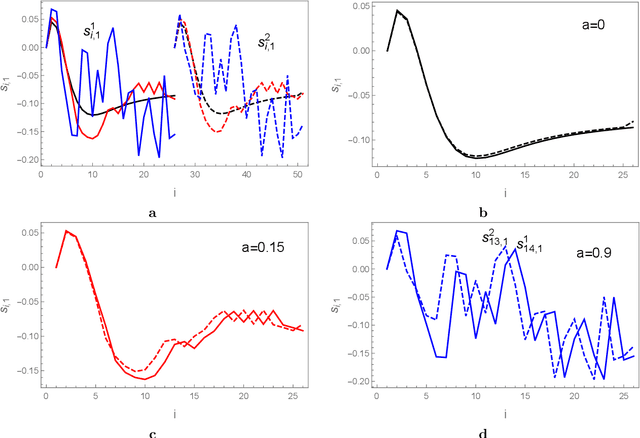

Abstract:Recent research demonstrate that prediction of time series by predictive recurrent neural networks based on the noisy input generates a {\it smooth} anticipated trajectory. We examine influence of the noise component in both the training data sets and the input sequences on network prediction quality. We propose and discuss an explanation of the observed noise compression in the predictive process. We also discuss importance of this property of recurrent networks in the neuroscience context for the evolution of living organisms.

On a novel training algorithm for sequence-to-sequence predictive recurrent networks

Jun 27, 2021

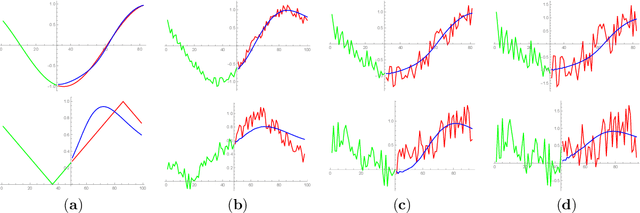

Abstract:Neural networks mapping sequences to sequences (seq2seq) lead to significant progress in machine translation and speech recognition. Their traditional architecture includes two recurrent networks (RNs) followed by a linear predictor. In this manuscript we perform analysis of a corresponding algorithm and show that the parameters of the RNs of the well trained predictive network are not independent of each other. Their dependence can be used to significantly improve the network effectiveness. The traditional seq2seq algorithms require short term memory of a size proportional to the predicted sequence length. This requirement is quite difficult to implement in a neuroscience context. We present a novel memoryless algorithm for seq2seq predictive networks and compare it to the traditional one in the context of time series prediction. We show that the new algorithm is more robust and makes predictions with higher accuracy than the traditional one.

A fast noise filtering algorithm for time series prediction using recurrent neural networks

Aug 12, 2020

Abstract:Recent research demonstrate that prediction of time series by recurrent neural networks (RNNs) based on the noisy input generates a smooth anticipated trajectory. We examine the internal dynamics of RNNs and establish a set of conditions required for such behavior. Based on this analysis we propose a new approximate algorithm and show that it significantly speeds up the predictive process without loss of accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge