Atsushi Noda

A Model of Causal Explanation on Neural Networks for Tabular Data

Dec 25, 2025

Abstract:The problem of explaining the results produced by machine learning methods continues to attract attention. Neural network (NN) models, along with gradient boosting machines, are expected to be utilized even in tabular data with high prediction accuracy. This study addresses the related issues of pseudo-correlation, causality, and combinatorial reasons for tabular data in NN predictors. We propose a causal explanation method, CENNET, and a new explanation power index using entropy for the method. CENNET provides causal explanations for predictions by NNs and uses structural causal models (SCMs) effectively combined with the NNs although SCMs are usually not used as predictive models on their own in terms of predictive accuracy. We show that CEN-NET provides such explanations through comparative experiments with existing methods on both synthetic and quasi-real data in classification tasks.

* \c{opyright}2025. This manuscript version is made available under the CC-BY-NC-ND 4.0 license https://creativecommons.org/licenses/by-nc-nd/4.0/

Practically Effective Adjustment Variable Selection in Causal Inference

Feb 04, 2025Abstract:In the estimation of causal effects, one common method for removing the influence of confounders is to adjust the variables that satisfy the back-door criterion. However, it is not always possible to uniquely determine sets of such variables. Moreover, real-world data is almost always limited, which means it may be insufficient for statistical estimation. Therefore, we propose criteria for selecting variables from a list of candidate adjustment variables along with an algorithm to prevent accuracy degradation in causal effect estimation. We initially focus on directed acyclic graphs (DAGs) and then outlines specific steps for applying this method to completed partially directed acyclic graphs (CPDAGs). We also present and prove a theorem on causal effect computation possibility in CPDAGs. Finally, we demonstrate the practical utility of our method using both existing and artificial data.

* 20 pages, 8 figures

DNN Architecture for High Performance Prediction on Natural Videos Loses Submodule's Ability to Learn Discrete-World Dataset

Apr 16, 2019

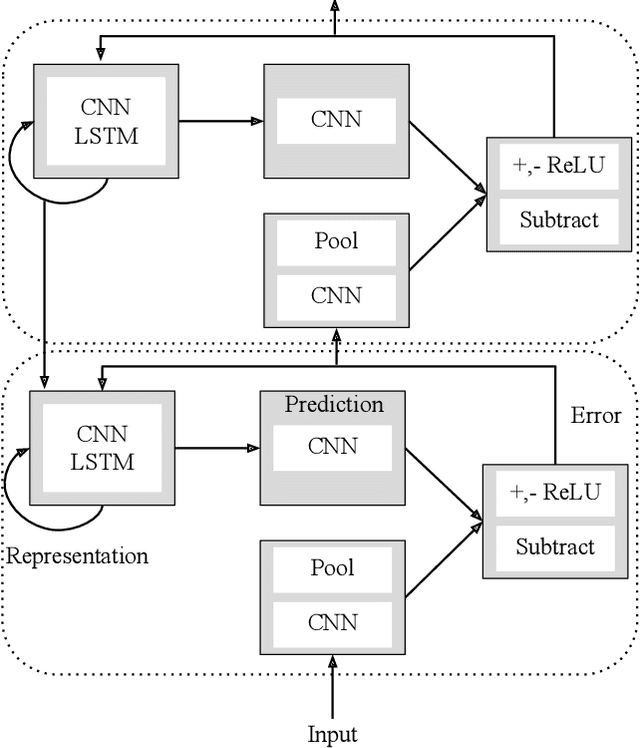

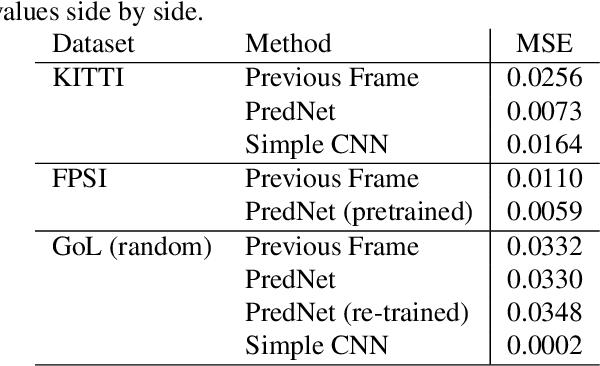

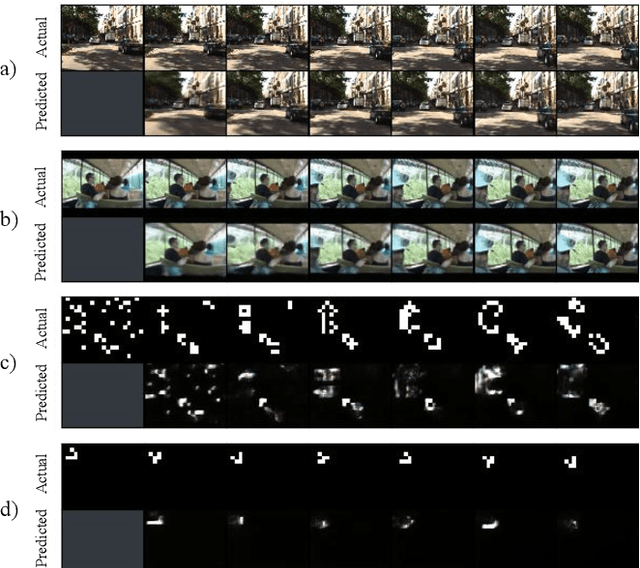

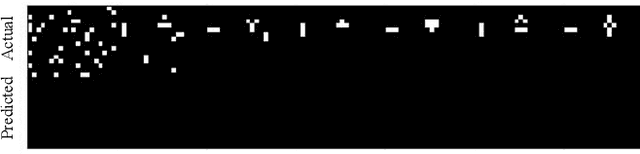

Abstract:Is cognition a collection of loosely connected functions tuned to different tasks, or can there be a general learning algorithm? If such an hypothetical general algorithm did exist, tuned to our world, could it adapt seamlessly to a world with different laws of nature? We consider the theory that predictive coding is such a general rule, and falsify it for one specific neural architecture known for high-performance predictions on natural videos and replication of human visual illusions: PredNet. Our results show that PredNet's high performance generalizes without retraining on a completely different natural video dataset. Yet PredNet cannot be trained to reach even mediocre accuracy on an artificial video dataset created with the rules of the Game of Life (GoL). We also find that a submodule of PredNet, a Convolutional Neural Network trained alone, reaches perfect accuracy on the GoL while being mediocre for natural videos, showing that PredNet's architecture itself is responsible for both the high performance on natural videos and the loss of performance on the GoL. Just as humans cannot predict the dynamics of the GoL, our results suggest that there might be a trade-off between high performance on sensory inputs with different sets of rules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge