Arunava Nag

COSMOS: A Data-Driven Probabilistic Time Series simulator for Chemical Plumes across Spatial Scales

May 28, 2025Abstract:The development of robust odor navigation strategies for automated environmental monitoring applications requires realistic simulations of odor time series for agents moving across large spatial scales. Traditional approaches that rely on computational fluid dynamics (CFD) methods can capture the spatiotemporal dynamics of odor plumes, but are impractical for large-scale simulations due to their computational expense. On the other hand, puff-based simulations, although computationally tractable for large scales and capable of capturing the stochastic nature of plumes, fail to reproduce naturalistic odor statistics. Here, we present COSMOS (Configurable Odor Simulation Model over Scalable Spaces), a data-driven probabilistic framework that synthesizes realistic odor time series from spatial and temporal features of real datasets. COSMOS generates similar distributions of key statistical features such as whiff frequency, duration, and concentration as observed in real data, while dramatically reducing computational overhead. By reproducing critical statistical properties across a variety of flow regimes and scales, COSMOS enables the development and evaluation of agent-based navigation strategies with naturalistic odor experiences. To demonstrate its utility, we compare odor-tracking agents exposed to CFD-generated plumes versus COSMOS simulations, showing that both their odor experiences and resulting behaviors are quite similar.

FLIVVER: Fly Lobula Inspired Visual Velocity Estimation & Ranging

Apr 10, 2020

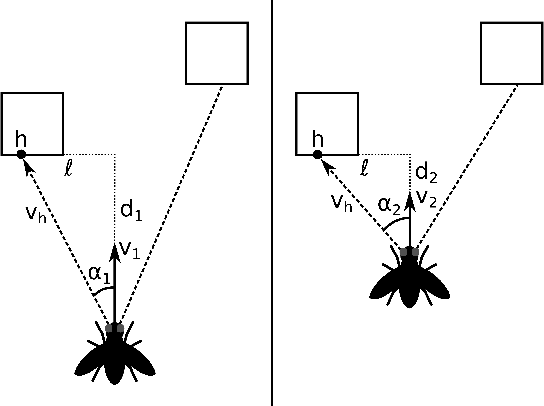

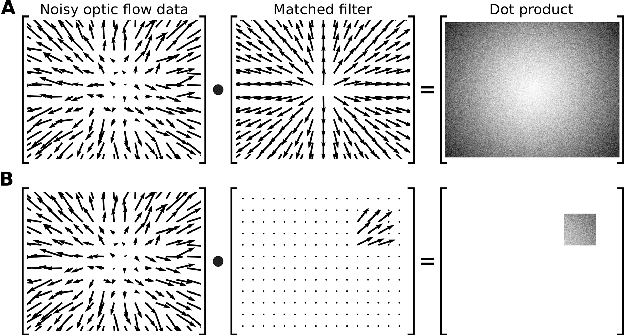

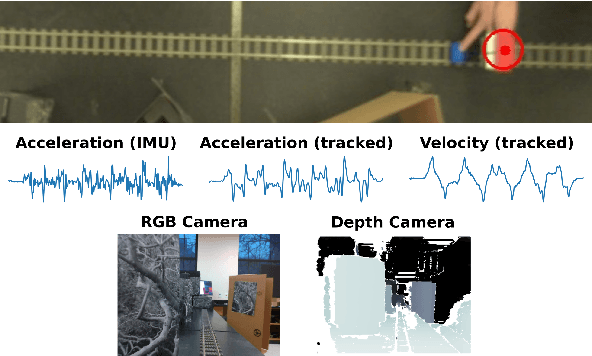

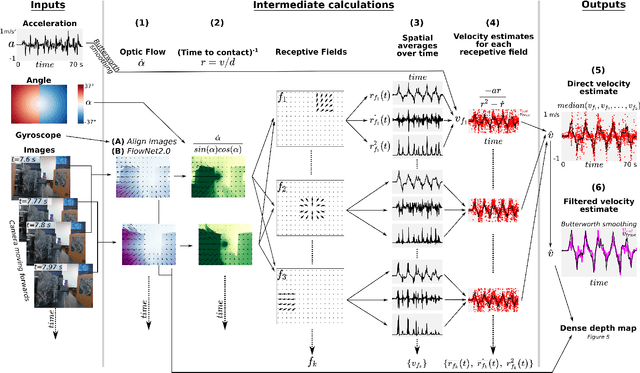

Abstract:The mechanism by which a tiny insect or insect-sized robot could estimate its absolute velocity and distance to nearby objects remains unknown. However, this ability is critical for behaviors that require estimating wind direction during flight, such as odor-plume tracking. Neuroscience and behavior studies with insects have shown that they rely on the perception of image motion, or optic flow, to estimate relative motion, equivalent to a ratio of their velocity and distance to objects in the world. The key open challenge is therefore to decouple these two states from a single measurement of their ratio. Although modern SLAM (Simultaneous Localization and Mapping) methods provide a solution to this problem for robotic systems, these methods typically rely on computations that insects likely cannot perform, such as simultaneously tracking multiple individual visual features, remembering a 3D map of the world, and solving nonlinear optimization problems using iterative algorithms. Here we present a novel algorithm, FLIVVER, which combines the geometry of dynamic forward motion with inspiration from insect visual processing to \textit{directly} estimate absolute ground velocity from a combination of optic flow and acceleration information. Our algorithm provides a clear hypothesis for how insects might estimate absolute velocity, and also provides a theoretical framework for designing fast analog circuitry for efficient state estimation, which could be applied to insect-sized robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge