Anuj Rathore

PLGC: Pseudo-Labeled Graph Condensation

Jan 15, 2026Abstract:Large graph datasets make training graph neural networks (GNNs) computationally costly. Graph condensation methods address this by generating small synthetic graphs that approximate the original data. However, existing approaches rely on clean, supervised labels, which limits their reliability when labels are scarce, noisy, or inconsistent. We propose Pseudo-Labeled Graph Condensation (PLGC), a self-supervised framework that constructs latent pseudo-labels from node embeddings and optimizes condensed graphs to match the original graph's structural and feature statistics -- without requiring ground-truth labels. PLGC offers three key contributions: (1) A diagnosis of why supervised condensation fails under label noise and distribution shift. (2) A label-free condensation method that jointly learns latent prototypes and node assignments. (3) Theoretical guarantees showing that pseudo-labels preserve latent structural statistics of the original graph and ensure accurate embedding alignment. Empirically, across node classification and link prediction tasks, PLGC achieves competitive performance with state-of-the-art supervised condensation methods on clean datasets and exhibits substantial robustness under label noise, often outperforming all baselines by a significant margin. Our findings highlight the practical and theoretical advantages of self-supervised graph condensation in noisy or weakly-labeled environments.

Streaming Video Analytics On The Edge With Asynchronous Cloud Support

Oct 04, 2022

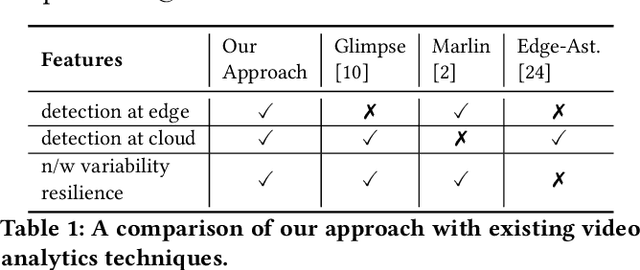

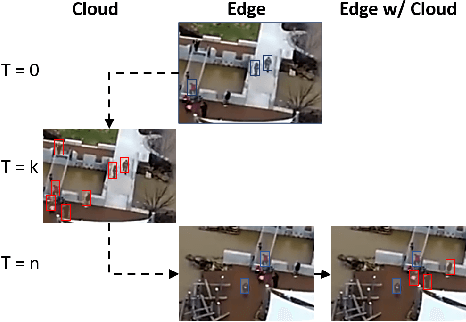

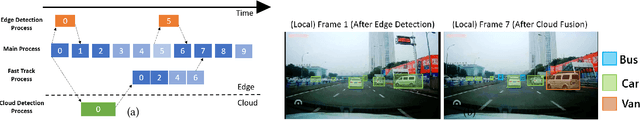

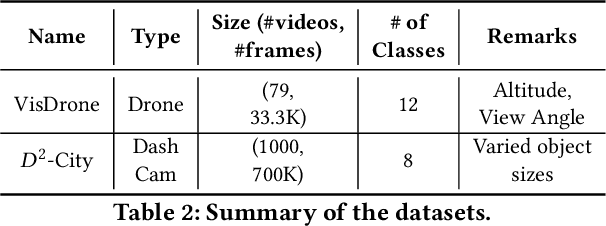

Abstract:Emerging Internet of Things (IoT) and mobile computing applications are expected to support latency-sensitive deep neural network (DNN) workloads. To realize this vision, the Internet is evolving towards an edge-computing architecture, where computing infrastructure is located closer to the end device to help achieve low latency. However, edge computing may have limited resources compared to cloud environments and thus, cannot run large DNN models that often have high accuracy. In this work, we develop REACT, a framework that leverages cloud resources to execute large DNN models with higher accuracy to improve the accuracy of models running on edge devices. To do so, we propose a novel edge-cloud fusion algorithm that fuses edge and cloud predictions, achieving low latency and high accuracy. We extensively evaluate our approach and show that our approach can significantly improve the accuracy compared to baseline approaches. We focus specifically on object detection in videos (applicable in many video analytics scenarios) and show that the fused edge-cloud predictions can outperform the accuracy of edge-only and cloud-only scenarios by as much as 50%. We also show that REACT can achieve good performance across tradeoff points by choosing a wide range of system parameters to satisfy use-case specific constraints, such as limited network bandwidth or GPU cycles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge