Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Andreas Gerken

Continuous Value Iteration (CVI) Reinforcement Learning and Imaginary Experience Replay (IER) for learning multi-goal, continuous action and state space controllers

Aug 27, 2019Figures and Tables:

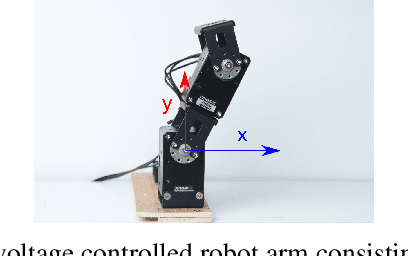

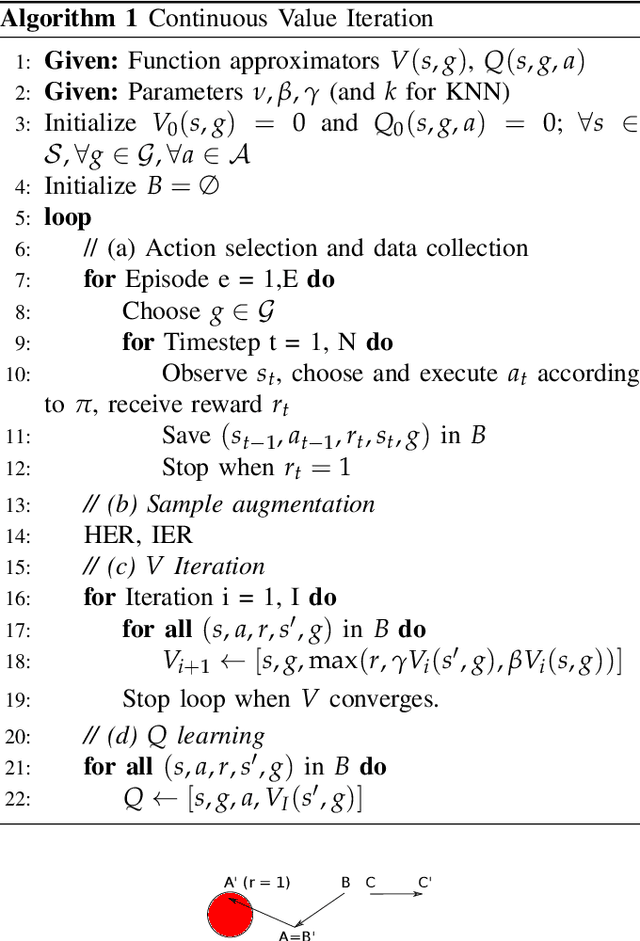

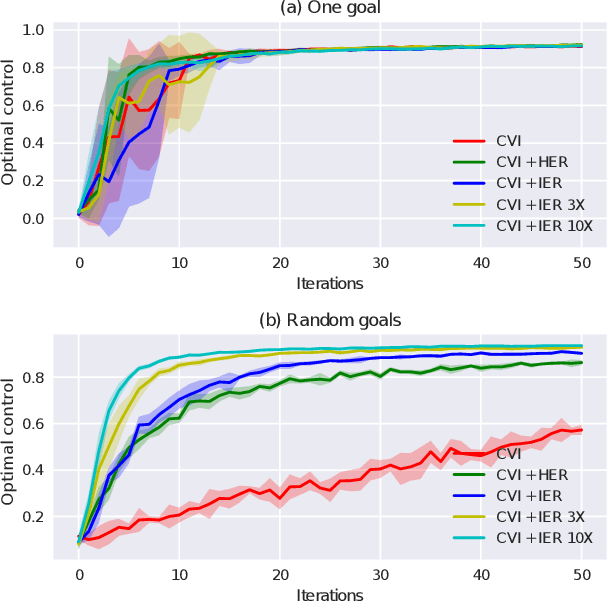

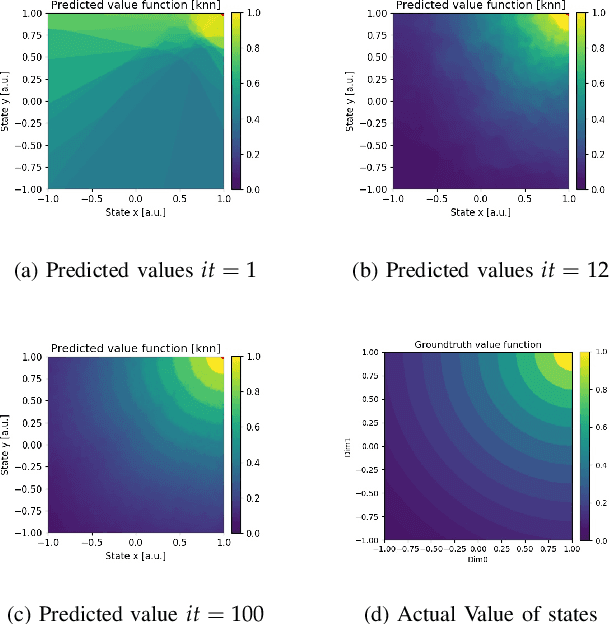

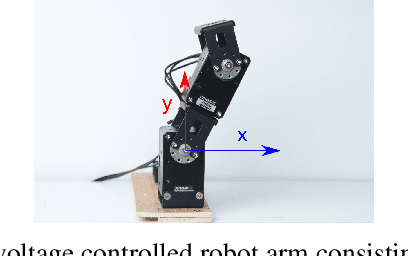

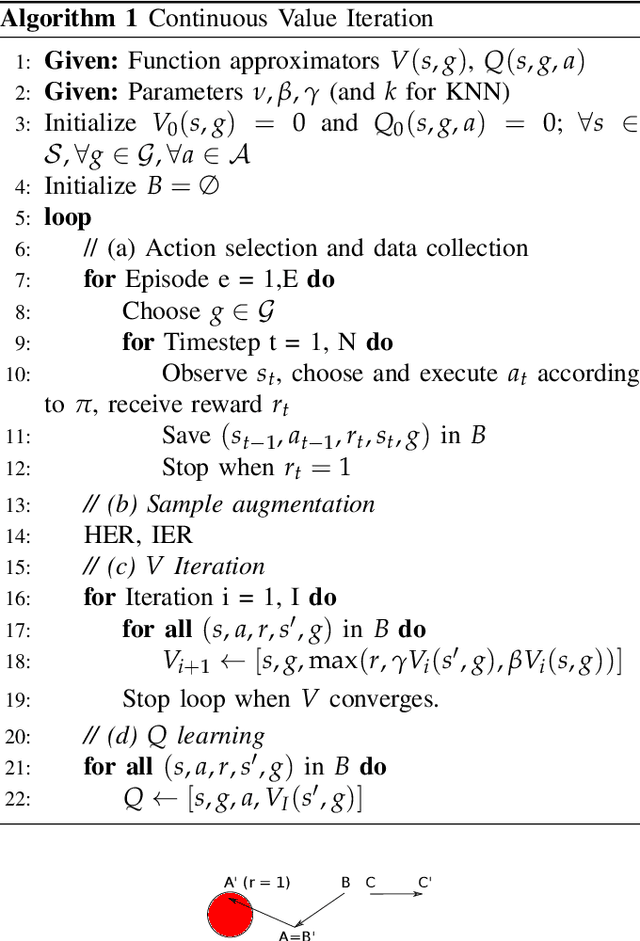

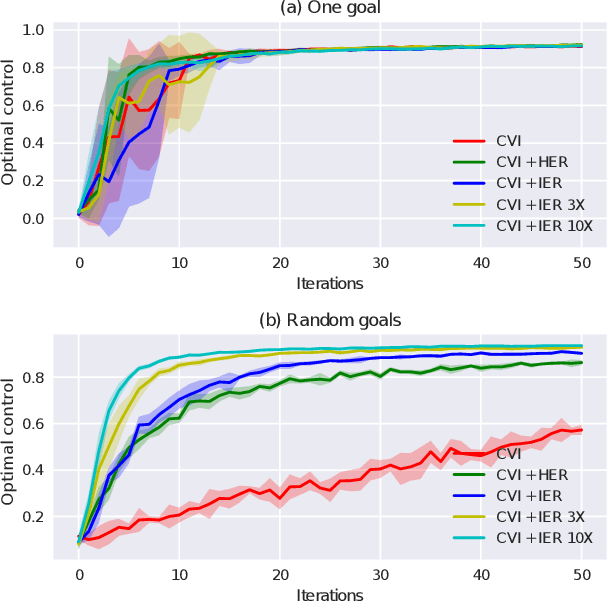

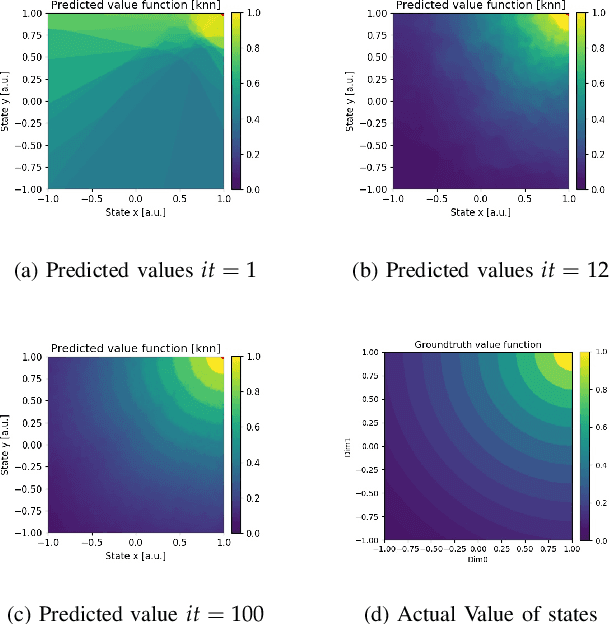

Abstract:This paper presents a novel model-free Reinforcement Learning algorithm for learning behavior in continuous action, state, and goal spaces. The algorithm approximates optimal value functions using non-parametric estimators. It is able to efficiently learn to reach multiple arbitrary goals in deterministic and nondeterministic environments. To improve generalization in the goal space, we propose a novel sample augmentation technique. Using these methods, robots learn faster and overall better controllers. We benchmark the proposed algorithms using simulation and a real-world voltage controlled robot that learns to maneuver in a non-observable Cartesian task space.

* Published in 2019 International Conference on Robotics and Automation

(ICRA) 20-24 May 2019

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge