Andrea Ferrario

Epistemology gives a Future to Complementarity in Human-AI Interactions

Jan 14, 2026Abstract:Human-AI complementarity is the claim that a human supported by an AI system can outperform either alone in a decision-making process. Since its introduction in the human-AI interaction literature, it has gained traction by generalizing the reliance paradigm and by offering a more practical alternative to the contested construct of 'trust in AI.' Yet complementarity faces key theoretical challenges: it lacks precise theoretical anchoring, it is formalized just as a post hoc indicator of relative predictive accuracy, it remains silent about other desiderata of human-AI interactions and it abstracts away from the magnitude-cost profile of its performance gain. As a result, complementarity is difficult to obtain in empirical settings. In this work, we leverage epistemology to address these challenges by reframing complementarity within the discourse on justificatory AI. Drawing on computational reliabilism, we argue that historical instances of complementarity function as evidence that a given human-AI interaction is a reliable epistemic process for a given predictive task. Together with other reliability indicators assessing the alignment of the human-AI team with the epistemic standards and socio-technical practices, complementarity contributes to the degree of reliability of human-AI teams when generating predictions. This supports the practical reasoning of those affected by these outputs -- patients, managers, regulators, and others. In summary, our approach suggests that the role and value of complementarity lies not in providing a relative measure of predictive accuracy, but in helping calibrate decision-making to the reliability of AI-supported processes that increasingly shape everyday life.

A Scoping Review of the Ethical Perspectives on Anthropomorphising Large Language Model-Based Conversational Agents

Jan 14, 2026Abstract:Anthropomorphisation -- the phenomenon whereby non-human entities are ascribed human-like qualities -- has become increasingly salient with the rise of large language model (LLM)-based conversational agents (CAs). Unlike earlier chatbots, LLM-based CAs routinely generate interactional and linguistic cues, such as first-person self-reference, epistemic and affective expressions that empirical work shows can increase engagement. On the other hand, anthropomorphisation raises ethical concerns, including deception, overreliance, and exploitative relationship framing, while some authors argue that anthropomorphic interaction may support autonomy, well-being, and inclusion. Despite increasing interest in the phenomenon, literature remains fragmented across domains and varies substantially in how it defines, operationalizes, and normatively evaluates anthropomorphisation. This scoping review maps ethically oriented work on anthropomorphising LLM-based CAs across five databases and three preprint repositories. We synthesize (1) conceptual foundations, (2) ethical challenges and opportunities, and (3) methodological approaches. We find convergence on attribution-based definitions but substantial divergence in operationalization, a predominantly risk-forward normative framing, and limited empirical work that links observed interaction effects to actionable governance guidance. We conclude with a research agenda and design/governance recommendations for ethically deploying anthropomorphic cues in LLM-based conversational agents.

Addressing Social Misattributions of Large Language Models: An HCXAI-based Approach

Mar 26, 2024Abstract:Human-centered explainable AI (HCXAI) advocates for the integration of social aspects into AI explanations. Central to the HCXAI discourse is the Social Transparency (ST) framework, which aims to make the socio-organizational context of AI systems accessible to their users. In this work, we suggest extending the ST framework to address the risks of social misattributions in Large Language Models (LLMs), particularly in sensitive areas like mental health. In fact LLMs, which are remarkably capable of simulating roles and personas, may lead to mismatches between designers' intentions and users' perceptions of social attributes, risking to promote emotional manipulation and dangerous behaviors, cases of epistemic injustice, and unwarranted trust. To address these issues, we propose enhancing the ST framework with a fifth 'W-question' to clarify the specific social attributions assigned to LLMs by its designers and users. This addition aims to bridge the gap between LLM capabilities and user perceptions, promoting the ethically responsible development and use of LLM-based technology.

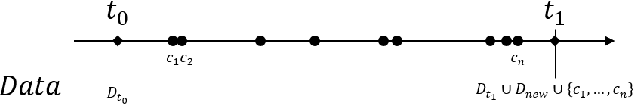

A Series of Unfortunate Counterfactual Events: the Role of Time in Counterfactual Explanations

Oct 09, 2020

Abstract:Counterfactual explanations are a prominent example of post-hoc interpretability methods in the explainable Artificial Intelligence research domain. They provide individuals with alternative scenarios and a set of recommendations to achieve a sought-after machine learning model outcome. Recently, the literature has identified desiderata of counterfactual explanations, such as feasibility, actionability and sparsity that should support their applicability in real-world contexts. However, we show that the literature has neglected the problem of the time dependency of counterfactual explanations. We argue that, due to their time dependency and because of the provision of recommendations, even feasible, actionable and sparse counterfactual explanations may not be appropriate in real-world applications. This is due to the possible emergence of what we call "unfortunate counterfactual events." These events may occur due to the retraining of machine learning models whose outcomes have to be explained via counterfactual explanation. Series of unfortunate counterfactual events frustrate the efforts of those individuals who successfully implemented the recommendations of counterfactual explanations. This negatively affects people's trust in the ability of institutions to provide machine learning-supported decisions consistently. We introduce an approach to address the problem of the emergence of unfortunate counterfactual events that makes use of histories of counterfactual explanations. In the final part of the paper we propose an ethical analysis of two distinct strategies to cope with the challenge of unfortunate counterfactual events. We show that they respond to an ethically responsible imperative to preserve the trustworthiness of credit lending organizations, the decision models they employ, and the social-economic function of credit lending.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge