Alun Preece

Cardiff University

Uncertainty Aware AI ML: Why and How

Sep 20, 2018

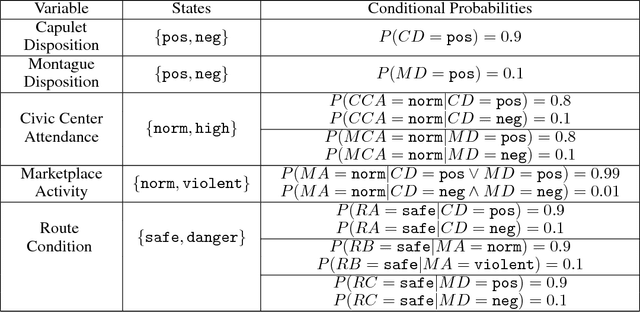

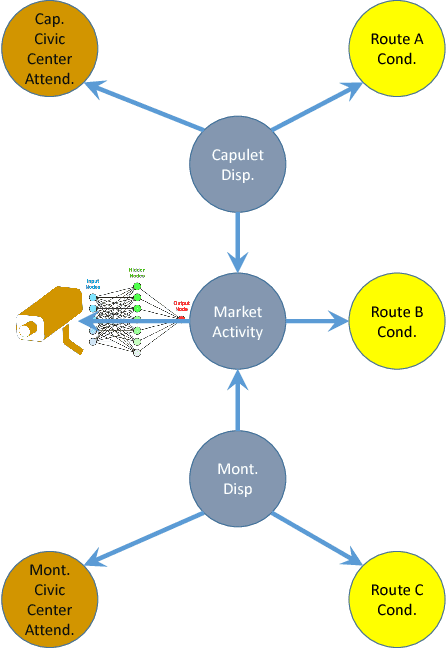

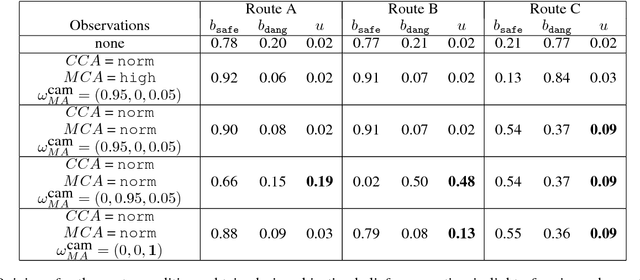

Abstract:This paper argues the need for research to realize uncertainty-aware artificial intelligence and machine learning (AI\&ML) systems for decision support by describing a number of motivating scenarios. Furthermore, the paper defines uncertainty-awareness and lays out the challenges along with surveying some promising research directions. A theoretical demonstration illustrates how two emerging uncertainty-aware ML and AI technologies could be integrated and be of value for a route planning operation.

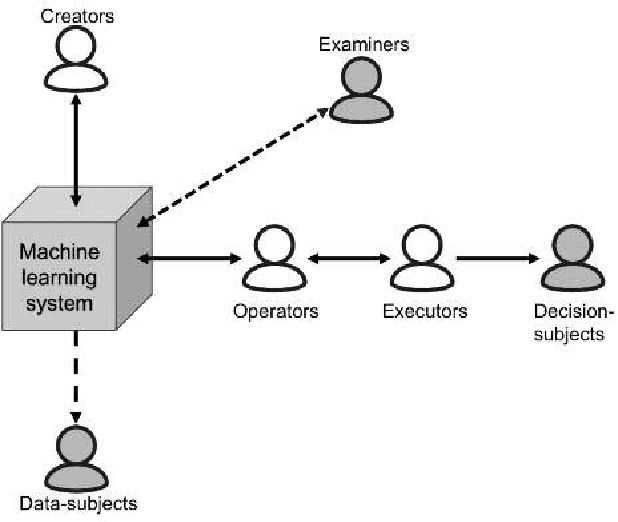

Interpretable to Whom? A Role-based Model for Analyzing Interpretable Machine Learning Systems

Jun 20, 2018

Abstract:Several researchers have argued that a machine learning system's interpretability should be defined in relation to a specific agent or task: we should not ask if the system is interpretable, but to whom is it interpretable. We describe a model intended to help answer this question, by identifying different roles that agents can fulfill in relation to the machine learning system. We illustrate the use of our model in a variety of scenarios, exploring how an agent's role influences its goals, and the implications for defining interpretability. Finally, we make suggestions for how our model could be useful to interpretability researchers, system developers, and regulatory bodies auditing machine learning systems.

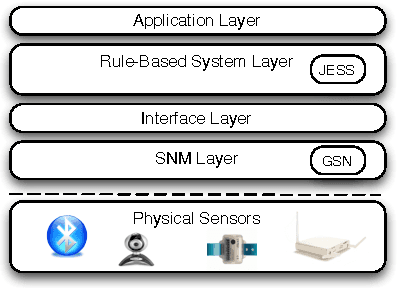

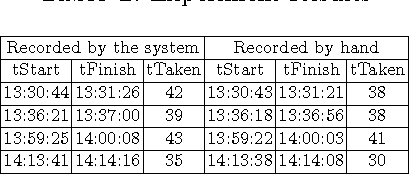

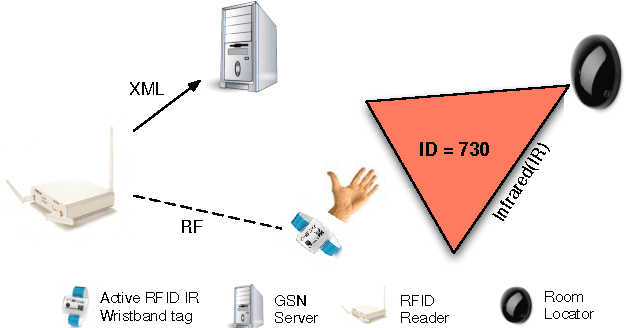

Rule-Based Semantic Sensing

Jul 11, 2011

Abstract:Rule-Based Systems have been in use for decades to solve a variety of problems but not in the sensor informatics domain. Rules aid the aggregation of low-level sensor readings to form a more complete picture of the real world and help to address 10 identified challenges for sensor network middleware. This paper presents the reader with an overview of a system architecture and a pilot application to demonstrate the usefulness of a system integrating rules with sensor middleware.

Proceedings of the Doctoral Consortium and Poster Session of the 5th International Symposium on Rules (RuleML 2011@IJCAI)

Jul 08, 2011Abstract:This volume contains the papers presented at the first edition of the Doctoral Consortium of the 5th International Symposium on Rules (RuleML 2011@IJCAI) held on July 19th, 2011 in Barcelona, as well as the poster session papers of the RuleML 2011@IJCAI main conference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge