Alexandra Getmanskaya

TUMLS: Trustful Fully Unsupervised Multi-Level Segmentation for Whole Slide Images of Histology

Apr 17, 2025

Abstract:Digital pathology, augmented by artificial intelligence (AI), holds significant promise for improving the workflow of pathologists. However, challenges such as the labor-intensive annotation of whole slide images (WSIs), high computational demands, and trust concerns arising from the absence of uncertainty estimation in predictions hinder the practical application of current AI methodologies in histopathology. To address these issues, we present a novel trustful fully unsupervised multi-level segmentation methodology (TUMLS) for WSIs. TUMLS adopts an autoencoder (AE) as a feature extractor to identify the different tissue types within low-resolution training data. It selects representative patches from each identified group based on an uncertainty measure and then does unsupervised nuclei segmentation in their respective higher-resolution space without using any ML algorithms. Crucially, this solution integrates seamlessly into clinicians workflows, transforming the examination of a whole WSI into a review of concise, interpretable cross-level insights. This integration significantly enhances and accelerates the workflow while ensuring transparency. We evaluated our approach using the UPENN-GBM dataset, where the AE achieved a mean squared error (MSE) of 0.0016. Additionally, nucleus segmentation is assessed on the MoNuSeg dataset, outperforming all unsupervised approaches with an F1 score of 77.46% and a Jaccard score of 63.35%. These results demonstrate the efficacy of TUMLS in advancing the field of digital pathology.

Cephalometric Landmark Regression with Convolutional Neural Networks on 3D Computed Tomography Data

Jul 20, 2020

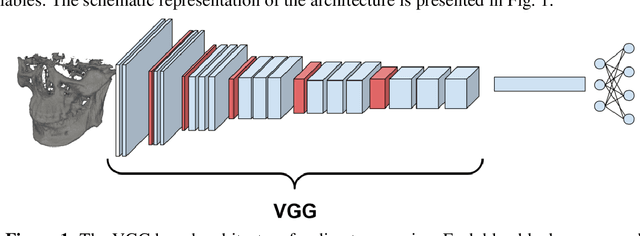

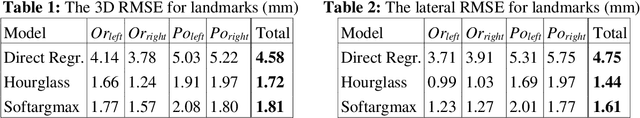

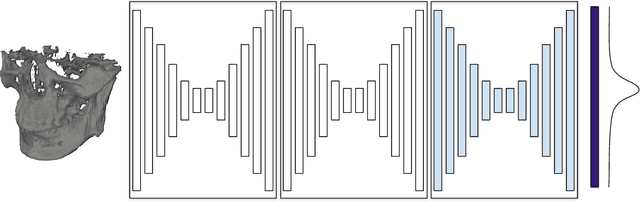

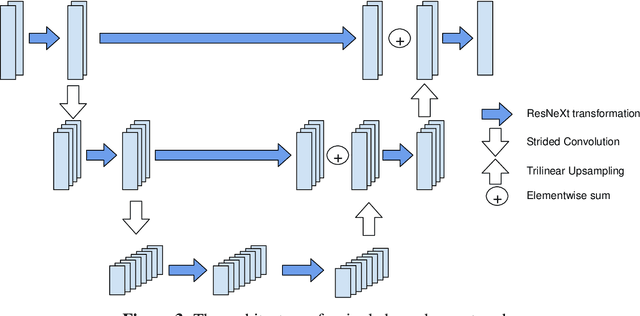

Abstract:In this paper, we address the problem of automatic three-dimensional cephalometric analysis. Cephalometric analysis performed on lateral radiographs doesn't fully exploit the structure of 3D objects due to projection onto the lateral plane. With the development of three-dimensional imaging techniques such as CT, several analysis methods have been proposed that extend to the 3D case. The analysis based on these methods is invariant to rotations and translations and can describe difficult skull deformation, where 2D cephalometry has no use. In this paper, we provide a wide overview of existing approaches for cephalometric landmark regression. Moreover, we perform a series of experiments with state of the art 3D convolutional neural network (CNN) based methods for keypoint regression: direct regression with CNN, heatmap regression and Softargmax regression. For the first time, we extensively evaluate the described methods and demonstrate their effectiveness in the estimation of Frankfort Horizontal and cephalometric points locations for patients with severe skull deformations. We demonstrate that Heatmap and Softargmax regression models provide sufficient regression error for medical applications (less than 4 mm). Moreover, the Softargmax model achieves 1.15o inclination error for the Frankfort horizontal. For the fair comparison with the prior art, we also report results projected on the lateral plane.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge