Alexander Lobashev

Lightweight Optimal-Transport Harmonization on Edge Devices

Nov 16, 2025Abstract:Color harmonization adjusts the colors of an inserted object so that it perceptually matches the surrounding image, resulting in a seamless composite. The harmonization problem naturally arises in augmented reality (AR), yet harmonization algorithms are not currently integrated into AR pipelines because real-time solutions are scarce. In this work, we address color harmonization for AR by proposing a lightweight approach that supports on-device inference. For this, we leverage classical optimal transport theory by training a compact encoder to predict the Monge-Kantorovich transport map. We benchmark our MKL-Harmonizer algorithm against state-of-the-art methods and demonstrate that for real composite AR images our method achieves the best aggregated score. We release our dedicated AR dataset of composite images with pixel-accurate masks and data-gathering toolkit to support further data acquisition by researchers.

Hessian Geometry of Latent Space in Generative Models

Jun 12, 2025Abstract:This paper presents a novel method for analyzing the latent space geometry of generative models, including statistical physics models and diffusion models, by reconstructing the Fisher information metric. The method approximates the posterior distribution of latent variables given generated samples and uses this to learn the log-partition function, which defines the Fisher metric for exponential families. Theoretical convergence guarantees are provided, and the method is validated on the Ising and TASEP models, outperforming existing baselines in reconstructing thermodynamic quantities. Applied to diffusion models, the method reveals a fractal structure of phase transitions in the latent space, characterized by abrupt changes in the Fisher metric. We demonstrate that while geodesic interpolations are approximately linear within individual phases, this linearity breaks down at phase boundaries, where the diffusion model exhibits a divergent Lipschitz constant with respect to the latent space. These findings provide new insights into the complex structure of diffusion model latent spaces and their connection to phenomena like phase transitions. Our source code is available at https://github.com/alobashev/hessian-geometry-of-diffusion-models.

Training-Free Voice Conversion with Factorized Optimal Transport

Jun 11, 2025Abstract:This paper introduces Factorized MKL-VC, a training-free modification for kNN-VC pipeline. In contrast with original pipeline, our algorithm performs high quality any-to-any cross-lingual voice conversion with only 5 second of reference audio. MKL-VC replaces kNN regression with a factorized optimal transport map in WavLM embedding subspaces, derived from Monge-Kantorovich Linear solution. Factorization addresses non-uniform variance across dimensions, ensuring effective feature transformation. Experiments on LibriSpeech and FLEURS datasets show MKL-VC significantly improves content preservation and robustness with short reference audio, outperforming kNN-VC. MKL-VC achieves performance comparable to FACodec, especially in cross-lingual voice conversion domain.

Color Conditional Generation with Sliced Wasserstein Guidance

Mar 24, 2025Abstract:We propose SW-Guidance, a training-free approach for image generation conditioned on the color distribution of a reference image. While it is possible to generate an image with fixed colors by first creating an image from a text prompt and then applying a color style transfer method, this approach often results in semantically meaningless colors in the generated image. Our method solves this problem by modifying the sampling process of a diffusion model to incorporate the differentiable Sliced 1-Wasserstein distance between the color distribution of the generated image and the reference palette. Our method outperforms state-of-the-art techniques for color-conditional generation in terms of color similarity to the reference, producing images that not only match the reference colors but also maintain semantic coherence with the original text prompt. Our source code is available at https://github.com/alobashev/sw-guidance/.

Color Transfer with Modulated Flows

Mar 24, 2025Abstract:In this work, we introduce Modulated Flows (ModFlows), a novel approach for color transfer between images based on rectified flows. The primary goal of the color transfer is to adjust the colors of a target image to match the color distribution of a reference image. Our technique is based on optimal transport and executes color transfer as an invertible transformation within the RGB color space. The ModFlows utilizes the bijective property of flows, enabling us to introduce a common intermediate color distribution and build a dataset of rectified flows. We train an encoder on this dataset to predict the weights of a rectified model for new images. After training on a set of optimal transport plans, our approach can generate plans for new pairs of distributions without additional fine-tuning. We additionally show that the trained encoder provides an image embedding, associated only with its color style. The presented method is capable of processing 4K images and achieves the state-of-the-art performance in terms of content and style similarity. Our source code is available at https://github.com/maria-larchenko/modflows

Diffusion-based inpainting of incomplete Euclidean distance matrices of trajectories generated by a fractional Brownian motion

Apr 10, 2024Abstract:Fractional Brownian trajectories (fBm) feature both randomness and strong scale-free correlations, challenging generative models to reproduce the intrinsic memory characterizing the underlying process. Here we test a diffusion probabilistic model on a specific dataset of corrupted images corresponding to incomplete Euclidean distance matrices of fBm at various memory exponents $H$. Our dataset implies uniqueness of the data imputation in the regime of low missing ratio, where the remaining partial graph is rigid, providing the ground truth for the inpainting. We find that the conditional diffusion generation stably reproduces the statistics of missing fBm-distributed distances for different values of $H$ exponent. Furthermore, while diffusion models have been recently shown to remember samples from the training database, we show that diffusion-based inpainting behaves qualitatively different from the database search with the increasing database size. Finally, we apply our fBm-trained diffusion model with $H=1/3$ for completion of chromosome distance matrices obtained in single-cell microscopy experiments, showing its superiority over the standard bioinformatics algorithms. Our source code is available on GitHub at https://github.com/alobashev/diffusion_fbm.

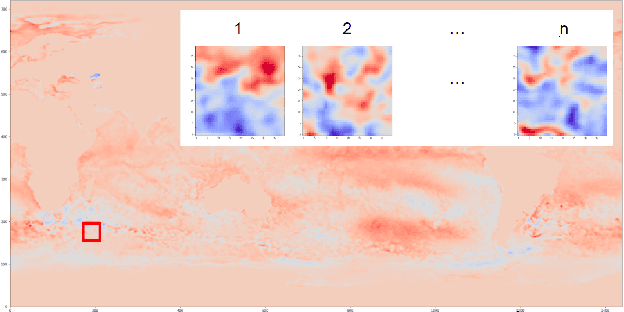

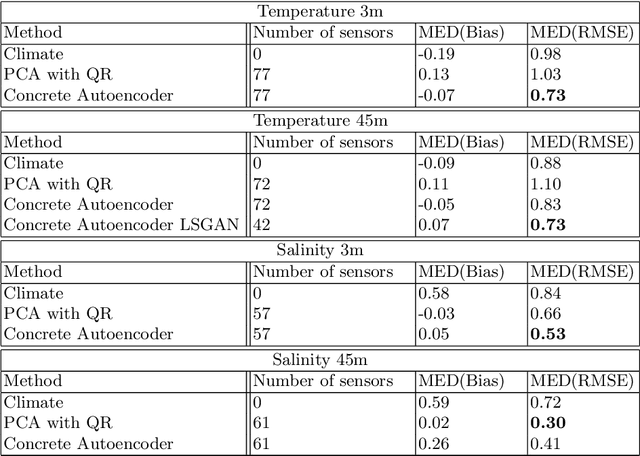

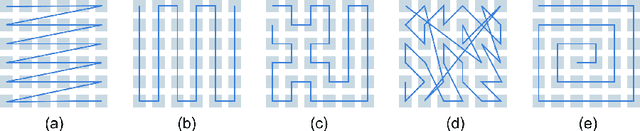

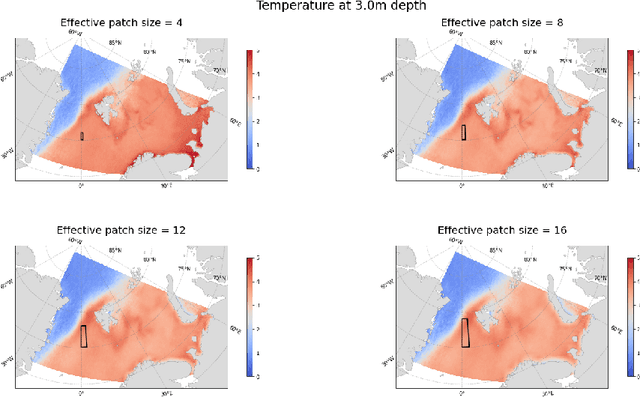

Information Entropy Initialized Concrete Autoencoder for Optimal Sensor Placement and Reconstruction of Geophysical Fields

Jun 28, 2022

Abstract:We propose a new approach to the optimal placement of sensors for the problem of reconstructing geophysical fields from sparse measurements. Our method consists of two stages. In the first stage, we estimate the variability of the physical field as a function of spatial coordinates by approximating its information entropy through the Conditional PixelCNN network. To calculate the entropy, a new ordering of a two-dimensional data array (spiral ordering) is proposed, which makes it possible to obtain the entropy of a physical field simultaneously for several spatial scales. In the second stage, the entropy of the physical field is used to initialize the distribution of optimal sensor locations. This distribution is further optimized with the Concrete Autoencoder architecture with the straight-through gradient estimator and adversarial loss to simultaneously minimize the number of sensors and maximize reconstruction accuracy. Our method scales linearly with data size, unlike commonly used Principal Component Analysis. We demonstrate our method on the two examples: (a) temperature and (b) salinity fields around the Barents Sea and the Svalbard group of islands. For these examples, we compute the reconstruction error of our method and a few baselines. We test our approach against two baselines (1) PCA with QR factorization and (2) climatology. We find out that the obtained optimal sensor locations have clear physical interpretation and correspond to the boundaries between sea currents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge