Aleix Cambray

Incremental Sequence Classification with Temporal Consistency

May 22, 2025Abstract:We address the problem of incremental sequence classification, where predictions are updated as new elements in the sequence are revealed. Drawing on temporal-difference learning from reinforcement learning, we identify a temporal-consistency condition that successive predictions should satisfy. We leverage this condition to develop a novel loss function for training incremental sequence classifiers. Through a concrete example, we demonstrate that optimizing this loss can offer substantial gains in data efficiency. We apply our method to text classification tasks and show that it improves predictive accuracy over competing approaches on several benchmark datasets. We further evaluate our approach on the task of verifying large language model generations for correctness in grade-school math problems. Our results show that models trained with our method are better able to distinguish promising generations from unpromising ones after observing only a few tokens.

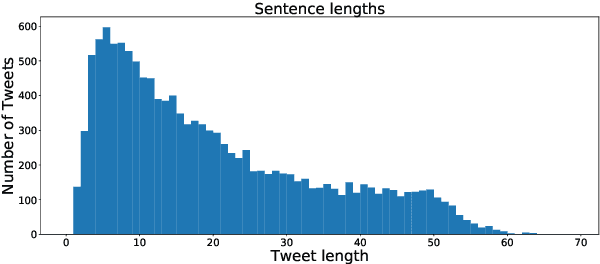

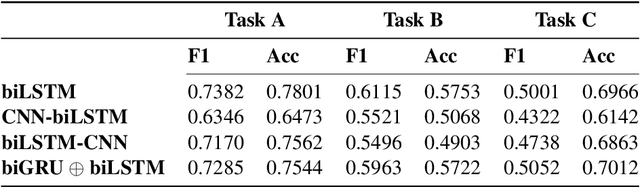

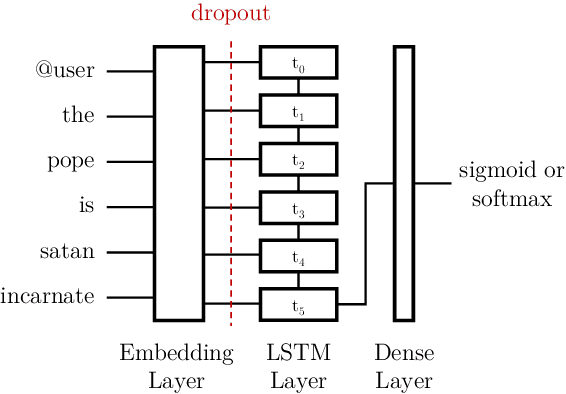

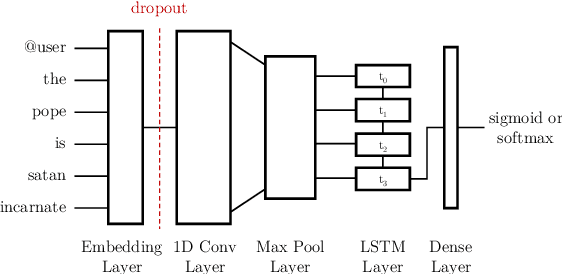

Bidirectional Recurrent Models for Offensive Tweet Classification

Mar 19, 2019

Abstract:In this paper we propose four deep recurrent architectures to tackle the task of offensive tweet detection as well as further classification into targeting and subject of said targeting. Our architectures are based on LSTMs and GRUs, we present a simple bidirectional LSTM as a baseline system and then further increase the complexity of the models by adding convolutional layers and implementing a split-process-merge architecture with LSTM and GRU as processors. Multiple pre-processing techniques were also investigated. The validation F1-score results from each model are presented for the three subtasks as well as the final F1-score performance on the private competition test set. It was found that model complexity did not necessarily yield better results. Our best-performing model was also the simplest, a bidirectional LSTM; closely followed by a two-branch bidirectional LSTM and GRU architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge