Ailiya Borjigin

Safe and Compliant Cross-Market Trade Execution via Constrained RL and Zero-Knowledge Audits

Oct 06, 2025

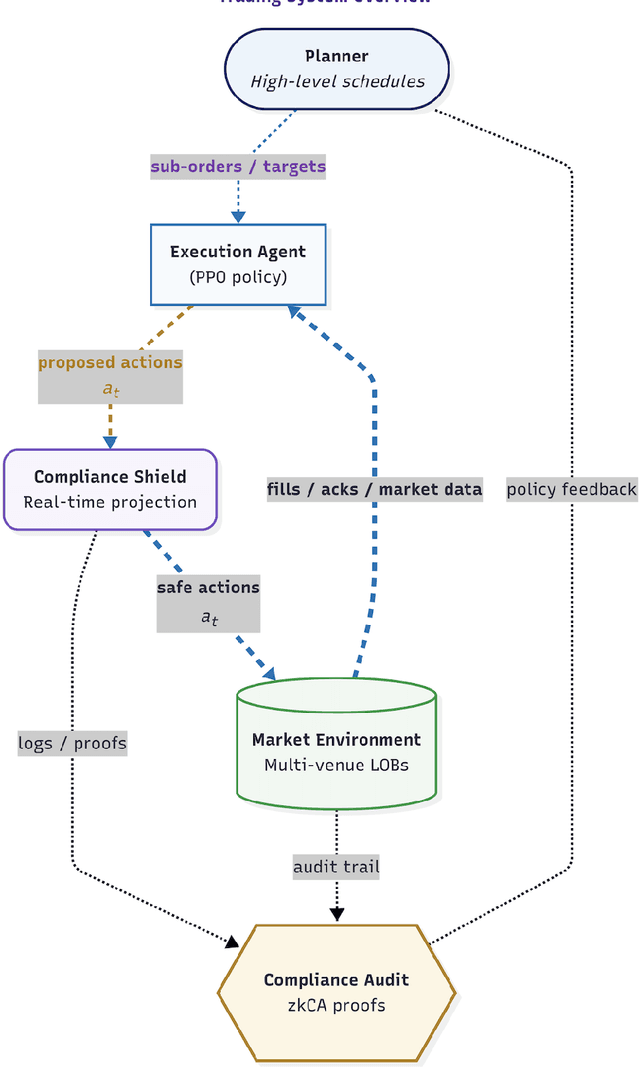

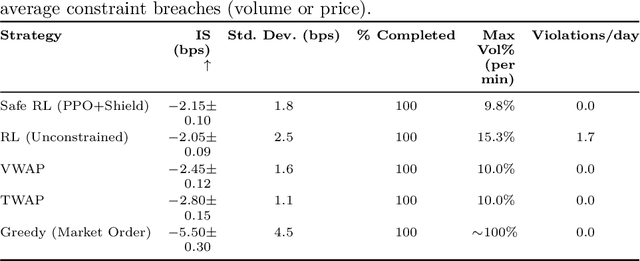

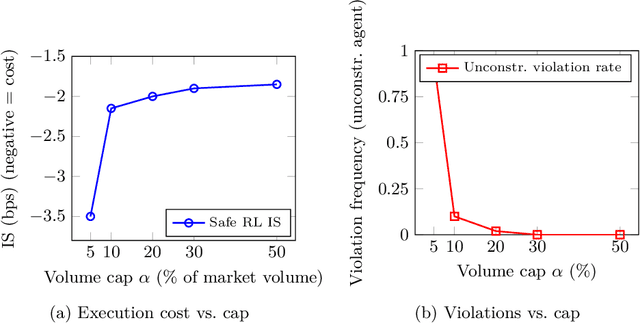

Abstract:We present a cross-market algorithmic trading system that balances execution quality with rigorous compliance enforcement. The architecture comprises a high-level planner, a reinforcement learning execution agent, and an independent compliance agent. We formulate trade execution as a constrained Markov decision process with hard constraints on participation limits, price bands, and self-trading avoidance. The execution agent is trained with proximal policy optimization, while a runtime action-shield projects any unsafe action into a feasible set. To support auditability without exposing proprietary signals, we add a zero-knowledge compliance audit layer that produces cryptographic proofs that all actions satisfied the constraints. We evaluate in a multi-venue, ABIDES-based simulator and compare against standard baselines (e.g., TWAP, VWAP). The learned policy reduces implementation shortfall and variance while exhibiting no observed constraint violations across stress scenarios including elevated latency, partial fills, compliance module toggling, and varying constraint limits. We report effects at the 95% confidence level using paired t-tests and examine tail risk via CVaR. We situate the work at the intersection of optimal execution, safe reinforcement learning, regulatory technology, and verifiable AI, and discuss ethical considerations, limitations (e.g., modeling assumptions and computational overhead), and paths to real-world deployment.

Behavioral Universe Network (BUN): A Behavioral Information-Based Framework for Complex Systems

Apr 21, 2025Abstract:Modern digital ecosystems feature complex, dynamic interactions among autonomous entities across diverse domains. Traditional models often separate agents and objects, lacking a unified foundation to capture their interactive behaviors. This paper introduces the Behavioral Universe Network (BUN), a theoretical framework grounded in the Agent-Interaction-Behavior (AIB) formalism. BUN treats subjects (active agents), objects (resources), and behaviors (operations) as first-class entities, all governed by a shared Behavioral Information Base (BIB). We detail the AIB core concepts and demonstrate how BUN leverages information-driven triggers, semantic enrichment, and adaptive rules to coordinate multi-agent systems. We highlight key benefits: enhanced behavior analysis, strong adaptability, and cross-domain interoperability. We conclude by positioning BUN as a promising foundation for next-generation digital governance and intelligent applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge