"speech": models, code, and papers

TO-Rawnet: Improving RawNet with TCN and Orthogonal Regularization for Fake Audio Detection

May 23, 2023

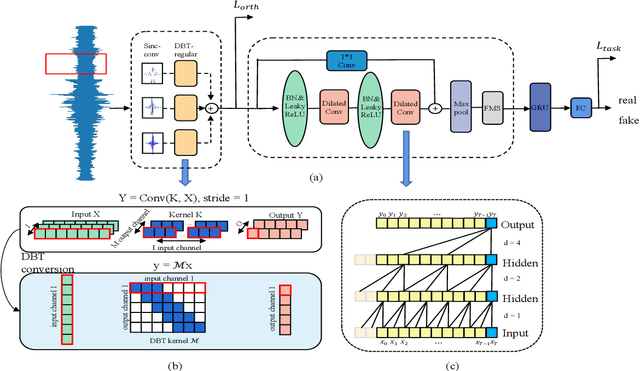

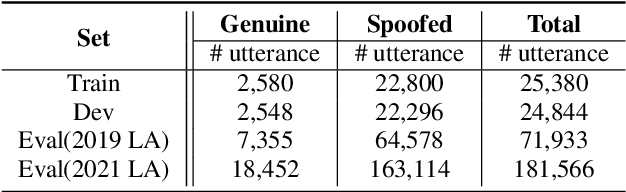

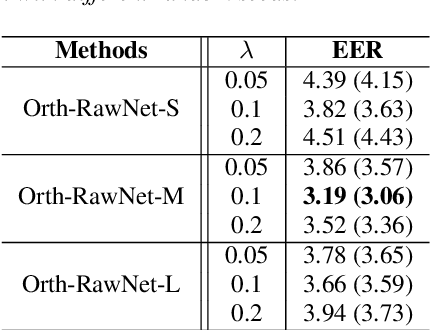

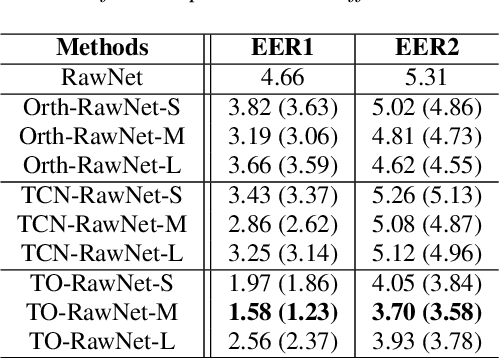

Current fake audio detection relies on hand-crafted features, which lose information during extraction. To overcome this, recent studies use direct feature extraction from raw audio signals. For example, RawNet is one of the representative works in end-to-end fake audio detection. However, existing work on RawNet does not optimize the parameters of the Sinc-conv during training, which limited its performance. In this paper, we propose to incorporate orthogonal convolution into RawNet, which reduces the correlation between filters when optimizing the parameters of Sinc-conv, thus improving discriminability. Additionally, we introduce temporal convolutional networks (TCN) to capture long-term dependencies in speech signals. Experiments on the ASVspoof 2019 show that the Our TO-RawNet system can relatively reduce EER by 66.09\% on logical access scenario compared with the RawNet, demonstrating its effectiveness in detecting fake audio attacks.

Improving Speech Prosody of Audiobook Text-to-Speech Synthesis with Acoustic and Textual Contexts

Nov 04, 2022

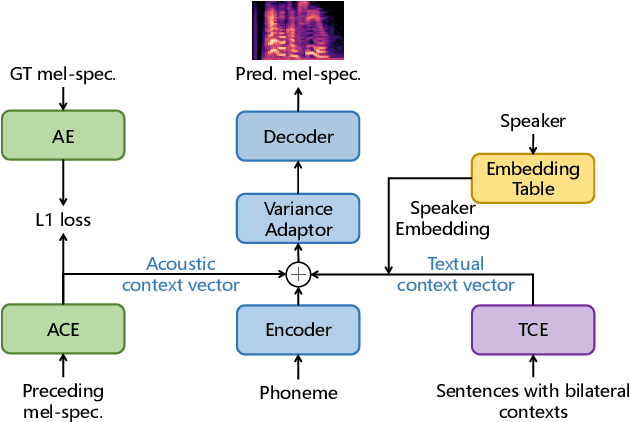

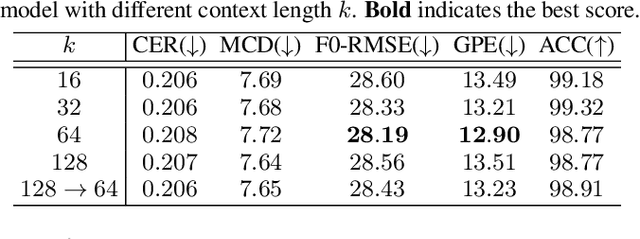

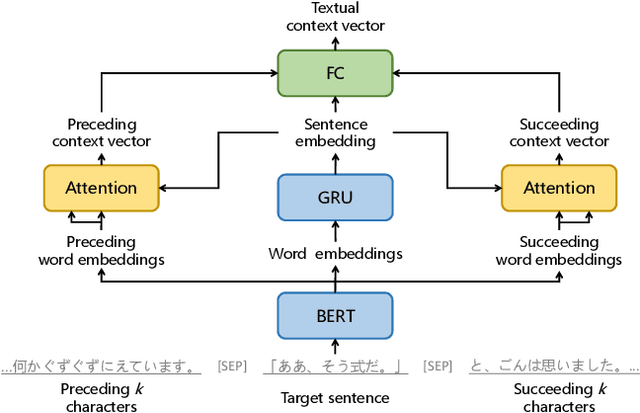

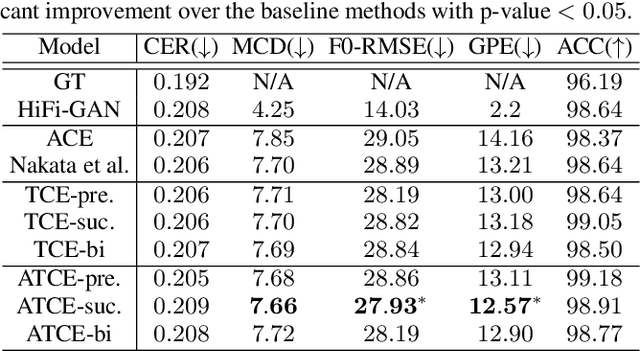

We present a multi-speaker Japanese audiobook text-to-speech (TTS) system that leverages multimodal context information of preceding acoustic context and bilateral textual context to improve the prosody of synthetic speech. Previous work either uses unilateral or single-modality context, which does not fully represent the context information. The proposed method uses an acoustic context encoder and a textual context encoder to aggregate context information and feeds it to the TTS model, which enables the model to predict context-dependent prosody. We conducted comprehensive objective and subjective evaluations on a multi-speaker Japanese audiobook dataset. Experimental results demonstrate that the proposed method significantly outperforms two previous works. Additionally, we present insights about the different choices of context - modalities, lateral information and length - for audiobook TTS that have never been discussed in the literature before.

Sample-Efficient Unsupervised Domain Adaptation of Speech Recognition Systems A case study for Modern Greek

Dec 31, 2022

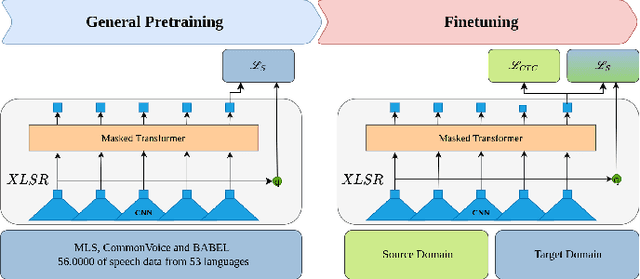

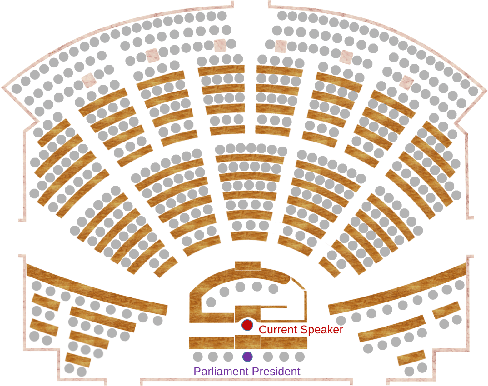

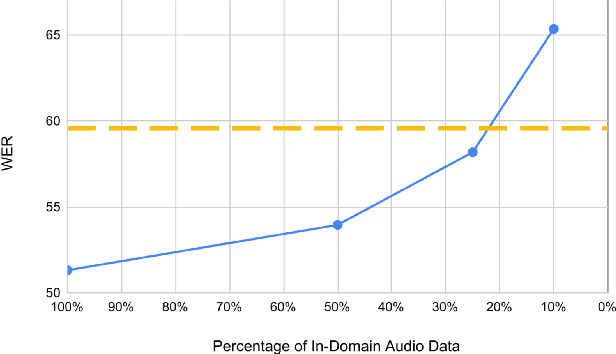

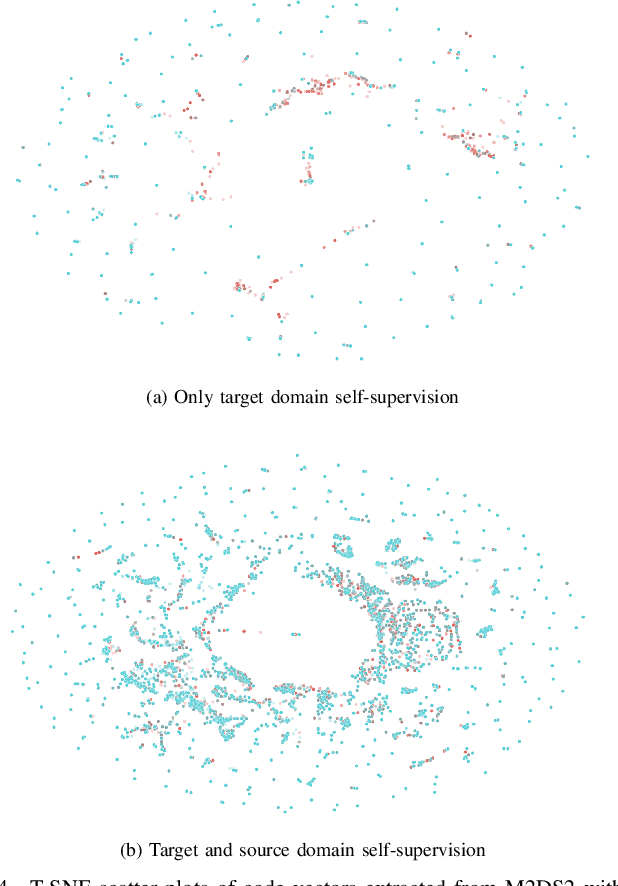

Modern speech recognition systems exhibits rapid performance degradation under domain shift. This issue is especially prevalent in data-scarce settings, such as low-resource languages, where diversity of training data is limited. In this work we propose M2DS2, a simple and sample-efficient finetuning strategy for large pretrained speech models, based on mixed source and target domain self-supervision. We find that including source domain self-supervision stabilizes training and avoids mode collapse of the latent representations. For evaluation, we collect HParl, a $120$ hour speech corpus for Greek, consisting of plenary sessions in the Greek Parliament. We merge HParl with two popular Greek corpora to create GREC-MD, a test-bed for multi-domain evaluation of Greek ASR systems. In our experiments we find that, while other Unsupervised Domain Adaptation baselines fail in this resource-constrained environment, M2DS2 yields significant improvements for cross-domain adaptation, even when a only a few hours of in-domain audio are available. When we relax the problem in a weakly supervised setting, we find that independent adaptation for audio using M2DS2 and language using simple LM augmentation techniques is particularly effective, yielding word error rates comparable to the fully supervised baselines.

AudioDec: An Open-source Streaming High-fidelity Neural Audio Codec

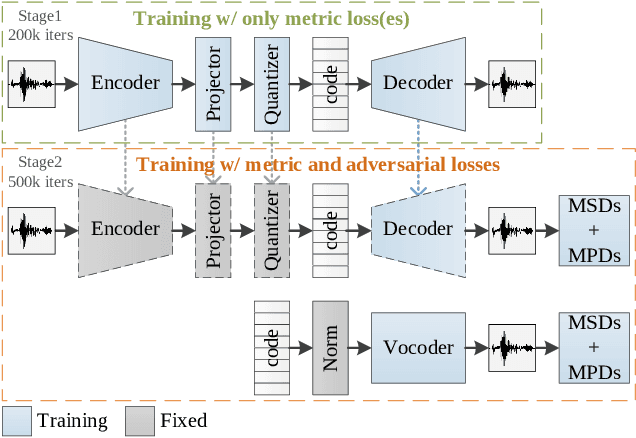

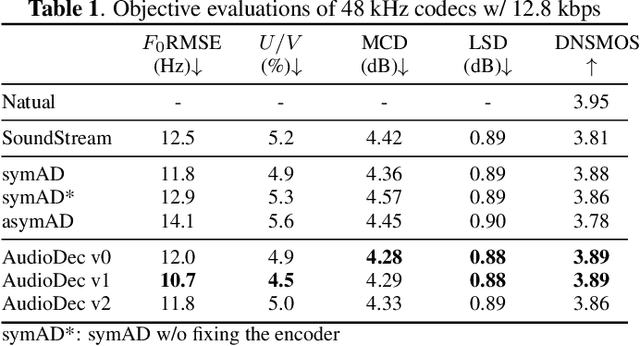

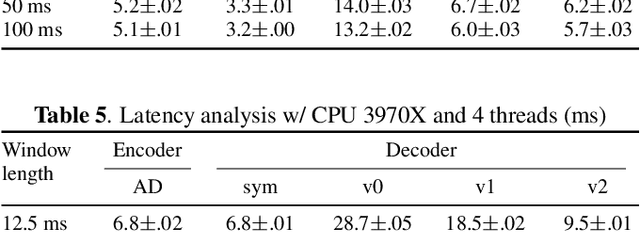

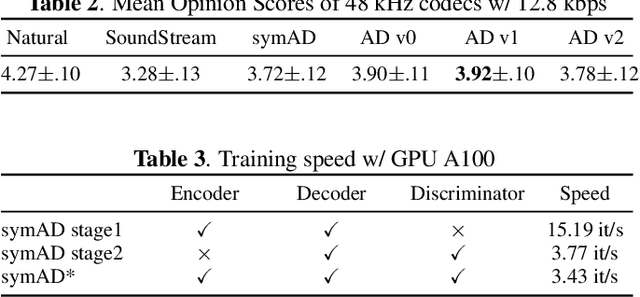

May 26, 2023

A good audio codec for live applications such as telecommunication is characterized by three key properties: (1) compression, i.e.\ the bitrate that is required to transmit the signal should be as low as possible; (2) latency, i.e.\ encoding and decoding the signal needs to be fast enough to enable communication without or with only minimal noticeable delay; and (3) reconstruction quality of the signal. In this work, we propose an open-source, streamable, and real-time neural audio codec that achieves strong performance along all three axes: it can reconstruct highly natural sounding 48~kHz speech signals while operating at only 12~kbps and running with less than 6~ms (GPU)/10~ms (CPU) latency. An efficient training paradigm is also demonstrated for developing such neural audio codecs for real-world scenarios. Both objective and subjective evaluations using the VCTK corpus are provided. To sum up, AudioDec is a well-developed plug-and-play benchmark for audio codec applications.

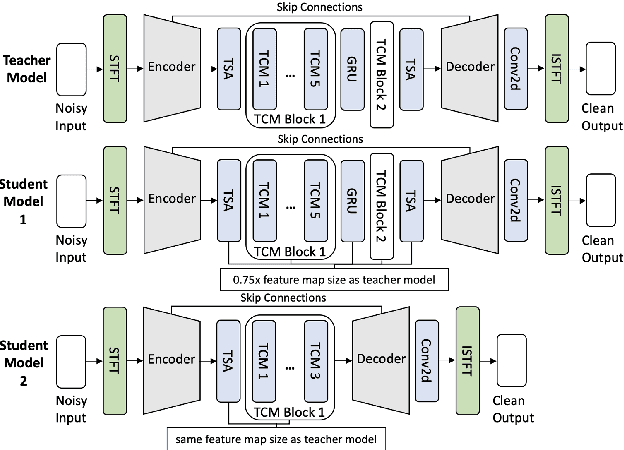

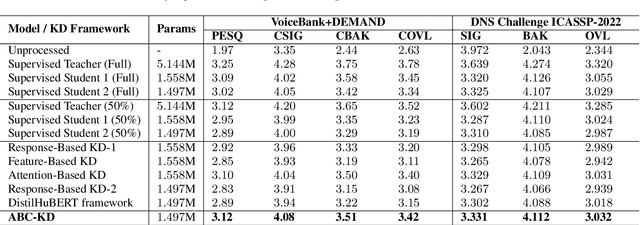

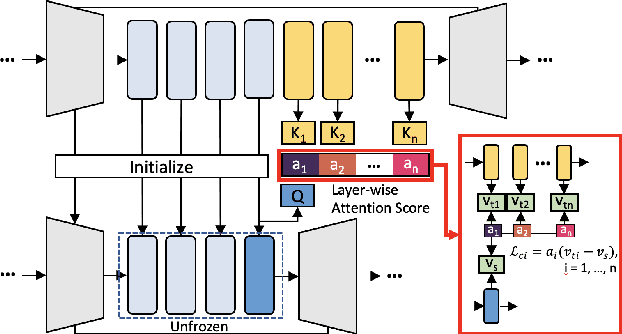

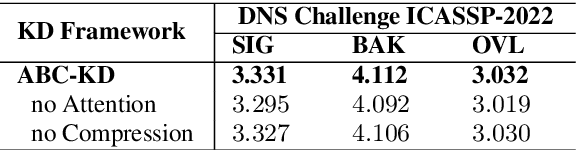

ABC-KD: Attention-Based-Compression Knowledge Distillation for Deep Learning-Based Noise Suppression

May 26, 2023

Noise suppression (NS) models have been widely applied to enhance speech quality. Recently, Deep Learning-Based NS, which we denote as Deep Noise Suppression (DNS), became the mainstream NS method due to its excelling performance over traditional ones. However, DNS models face 2 major challenges for supporting the real-world applications. First, high-performing DNS models are usually large in size, causing deployment difficulties. Second, DNS models require extensive training data, including noisy audios as inputs and clean audios as labels. It is often difficult to obtain clean labels for training DNS models. We propose the use of knowledge distillation (KD) to resolve both challenges. Our study serves 2 main purposes. To begin with, we are among the first to comprehensively investigate mainstream KD techniques on DNS models to resolve the two challenges. Furthermore, we propose a novel Attention-Based-Compression KD method that outperforms all investigated mainstream KD frameworks on DNS task.

A Whisper transformer for audio captioning trained with synthetic captions and transfer learning

May 15, 2023

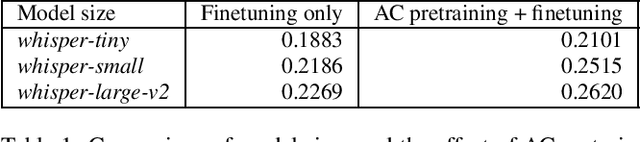

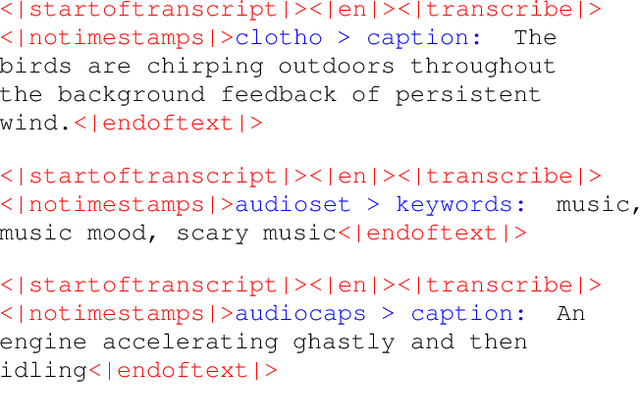

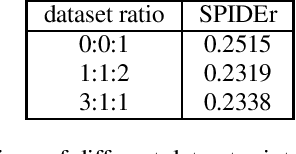

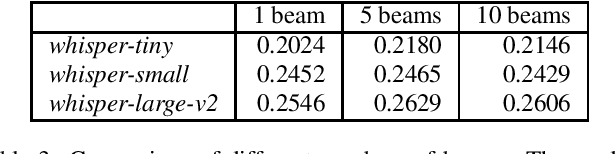

The field of audio captioning has seen significant advancements in recent years, driven by the availability of large-scale audio datasets and advancements in deep learning techniques. In this technical report, we present our approach to audio captioning, focusing on the use of a pretrained speech-to-text Whisper model and pretraining on synthetic captions. We discuss our training procedures and present our experiments' results, which include model size variations, dataset mixtures, and other hyperparameters. Our findings demonstrate the impact of different training strategies on the performance of the audio captioning model. Our code and trained models are publicly available on GitHub and Hugging Face Hub.

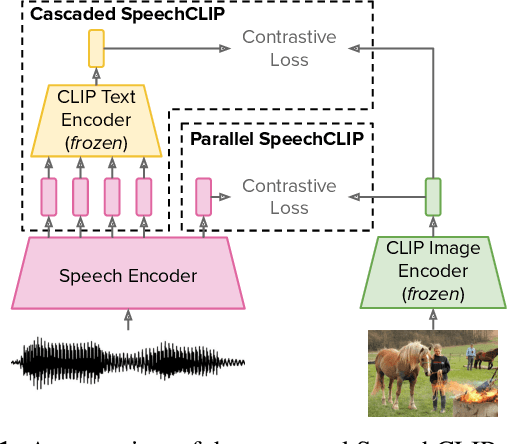

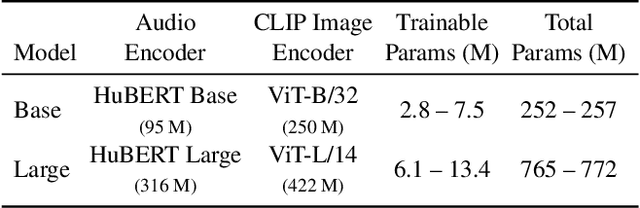

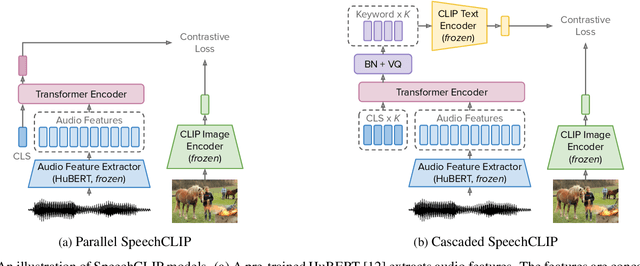

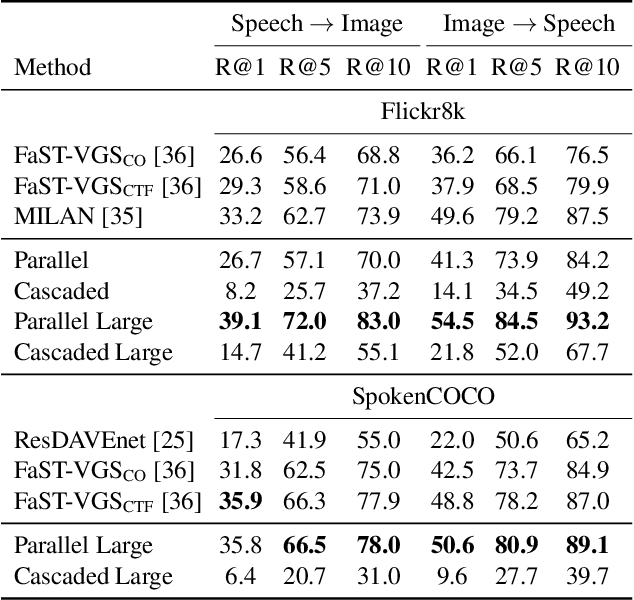

SpeechCLIP: Integrating Speech with Pre-Trained Vision and Language Model

Oct 03, 2022

Data-driven speech processing models usually perform well with a large amount of text supervision, but collecting transcribed speech data is costly. Therefore, we propose SpeechCLIP, a novel framework bridging speech and text through images to enhance speech models without transcriptions. We leverage state-of-the-art pre-trained HuBERT and CLIP, aligning them via paired images and spoken captions with minimal fine-tuning. SpeechCLIP outperforms prior state-of-the-art on image-speech retrieval and performs zero-shot speech-text retrieval without direct supervision from transcriptions. Moreover, SpeechCLIP can directly retrieve semantically related keywords from speech.

Quran Recitation Recognition using End-to-End Deep Learning

May 10, 2023

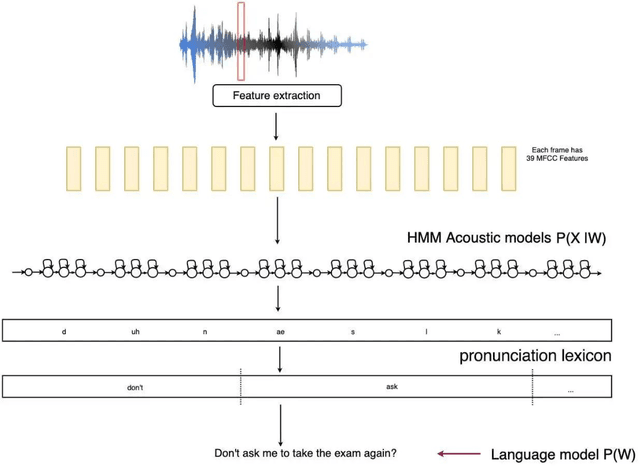

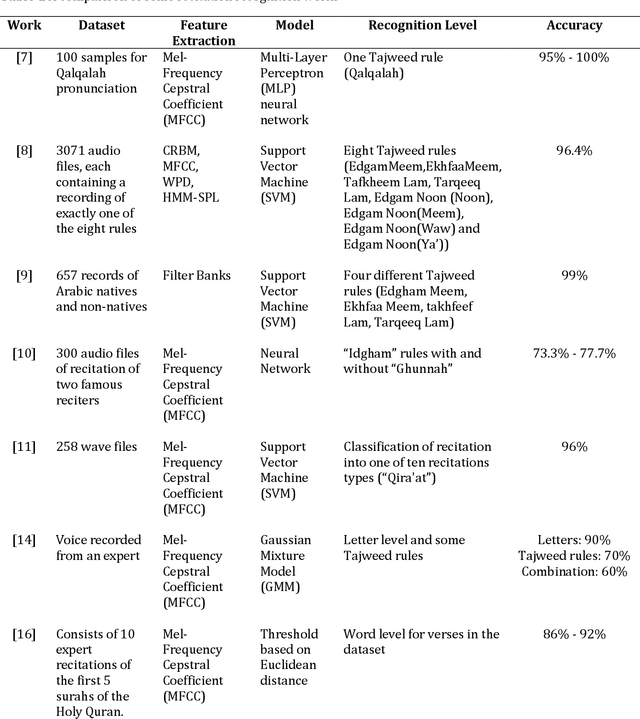

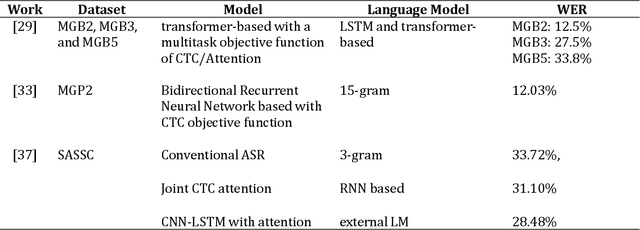

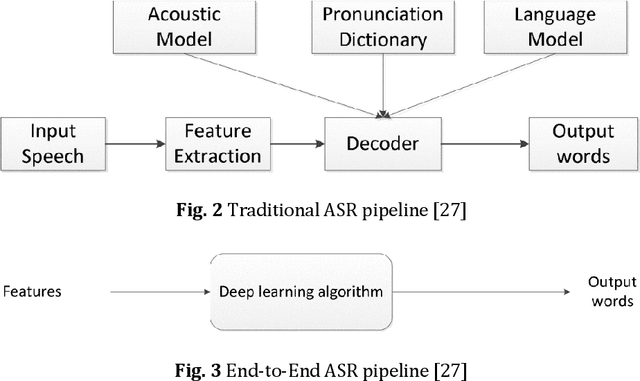

The Quran is the holy scripture of Islam, and its recitation is an important aspect of the religion. Recognizing the recitation of the Holy Quran automatically is a challenging task due to its unique rules that are not applied in normal speaking speeches. A lot of research has been done in this domain, but previous works have detected recitation errors as a classification task or used traditional automatic speech recognition (ASR). In this paper, we proposed a novel end-to-end deep learning model for recognizing the recitation of the Holy Quran. The proposed model is a CNN-Bidirectional GRU encoder that uses CTC as an objective function, and a character-based decoder which is a beam search decoder. Moreover, all previous works were done on small private datasets consisting of short verses and a few chapters of the Holy Quran. As a result of using private datasets, no comparisons were done. To overcome this issue, we used a public dataset that has recently been published (Ar-DAD) and contains about 37 chapters that were recited by 30 reciters, with different recitation speeds and different types of pronunciation rules. The proposed model performance was evaluated using the most common evaluation metrics in speech recognition, word error rate (WER), and character error rate (CER). The results were 8.34% WER and 2.42% CER. We hope this research will be a baseline for comparisons with future research on this public new dataset (Ar-DAD).

McNet: Fuse Multiple Cues for Multichannel Speech Enhancement

Nov 16, 2022

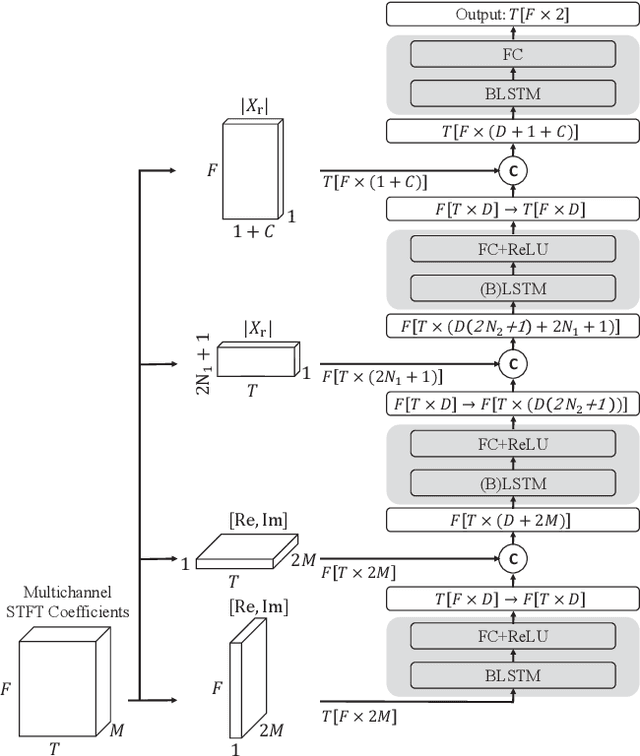

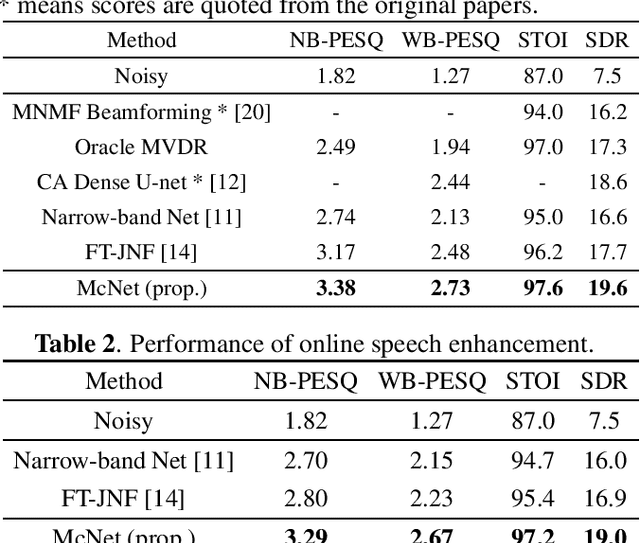

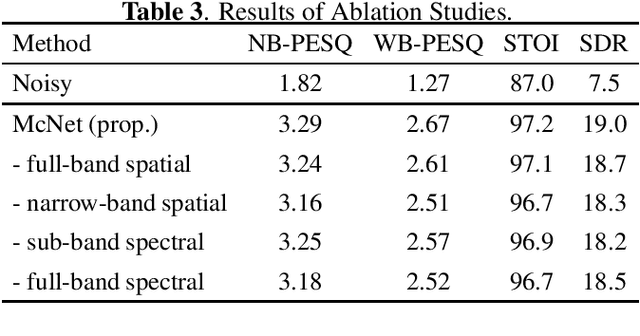

In multichannel speech enhancement, both spectral and spatial information are vital for discriminating between speech and noise. How to fully exploit these two types of information and their temporal dynamics remains an interesting research problem. As a solution to this problem, this paper proposes a multi-cue fusion network named McNet, which cascades four modules to respectively exploit the full-band spatial, narrow-band spatial, sub-band spectral, and full-band spectral information. Experiments show that each module in the proposed network has its unique contribution and, as a whole, notably outperforms other state-of-the-art methods.

HuBERT-TR: Reviving Turkish Automatic Speech Recognition with Self-supervised Speech Representation Learning

Oct 13, 2022

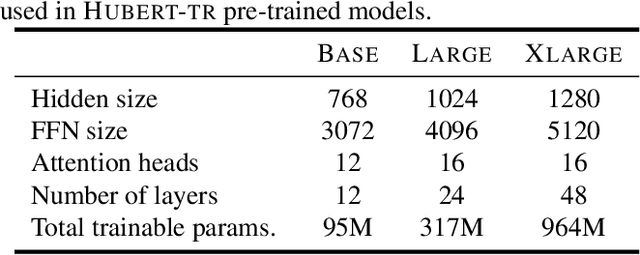

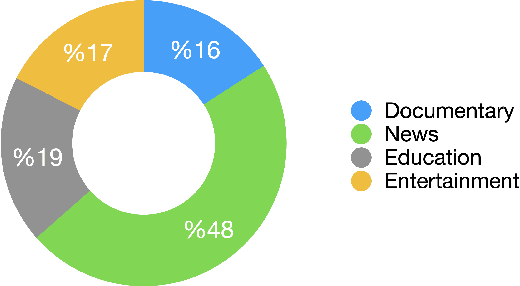

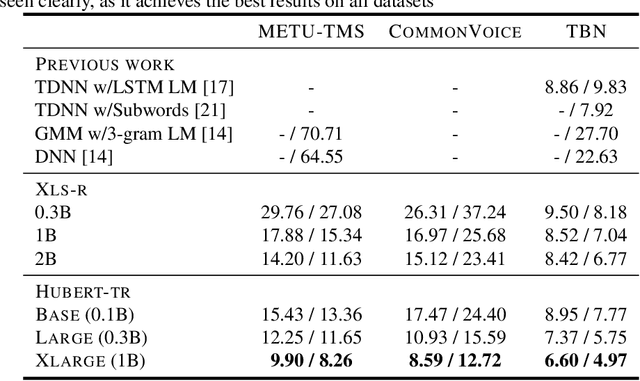

While the Turkish language is listed among low-resource languages, literature on Turkish automatic speech recognition (ASR) is relatively old. In this paper, we present HuBERT-TR, a speech representation model for Turkish based on HuBERT. HuBERT-TR achieves state-of-the-art results on several Turkish ASR datasets. We investigate pre-training HuBERT for Turkish with large-scale data curated from online resources. We pre-train HuBERT-TR using over 6,500 hours of speech data curated from YouTube that includes extensive variability in terms of quality and genre. We show that pre-trained models within a multi-lingual setup are inferior to language-specific models, where our Turkish model HuBERT-TR base performs better than its x10 times larger multi-lingual counterpart XLS-R-1B. Moreover, we study the effect of scaling on ASR performance by scaling our models up to 1B parameters. Our best model yields a state-of-the-art word error rate of 4.97% on the Turkish Broadcast News dataset. Models are available at huggingface.co/asafaya .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge