"facial recognition": models, code, and papers

Gradient Attention Balance Network: Mitigating Face Recognition Racial Bias via Gradient Attention

Apr 05, 2023

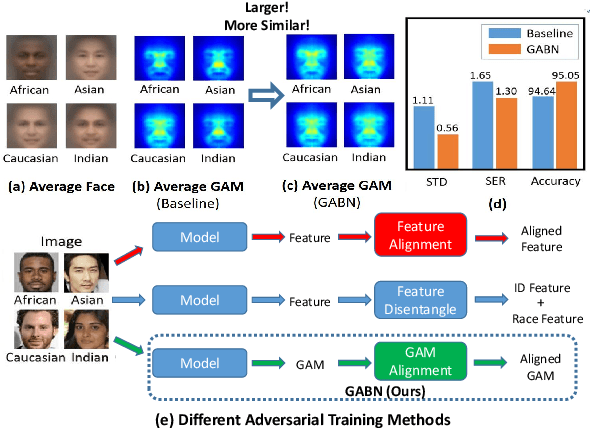

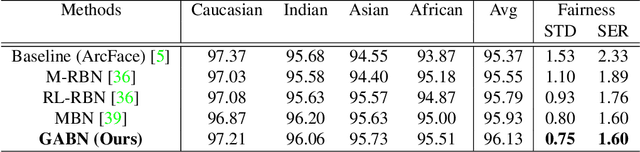

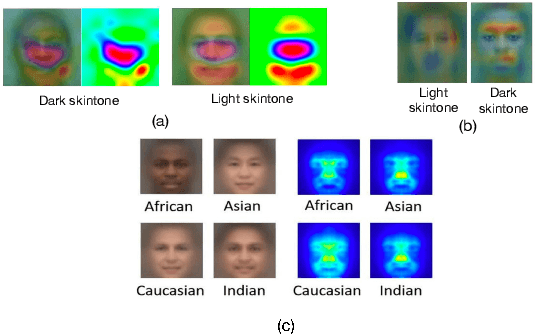

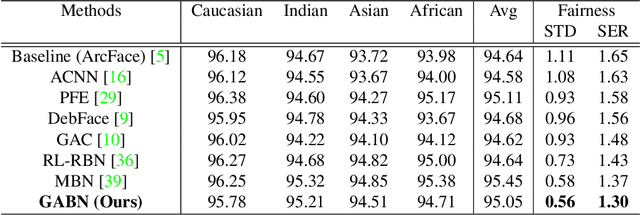

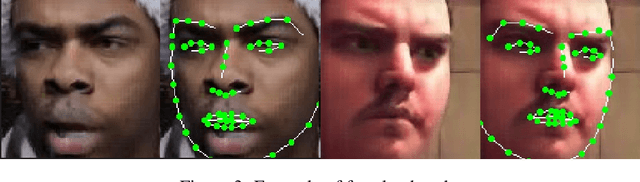

Although face recognition has made impressive progress in recent years, we ignore the racial bias of the recognition system when we pursue a high level of accuracy. Previous work found that for different races, face recognition networks focus on different facial regions, and the sensitive regions of darker-skinned people are much smaller. Based on this discovery, we propose a new de-bias method based on gradient attention, called Gradient Attention Balance Network (GABN). Specifically, we use the gradient attention map (GAM) of the face recognition network to track the sensitive facial regions and make the GAMs of different races tend to be consistent through adversarial learning. This method mitigates the bias by making the network focus on similar facial regions. In addition, we also use masks to erase the Top-N sensitive facial regions, forcing the network to allocate its attention to a larger facial region. This method expands the sensitive region of darker-skinned people and further reduces the gap between GAM of darker-skinned people and GAM of Caucasians. Extensive experiments show that GABN successfully mitigates racial bias in face recognition and learns more balanced performance for people of different races.

Learn-to-Decompose: Cascaded Decomposition Network for Cross-Domain Few-Shot Facial Expression Recognition

Jul 16, 2022

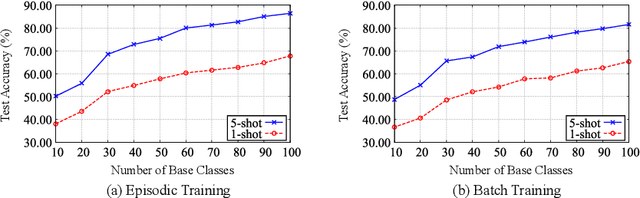

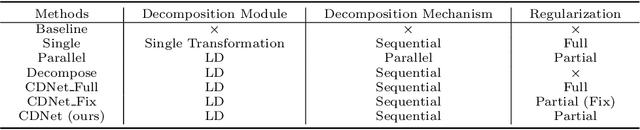

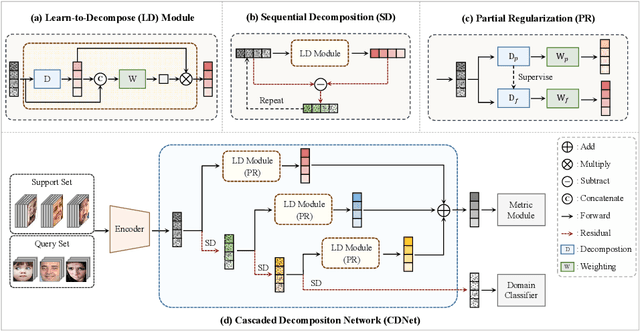

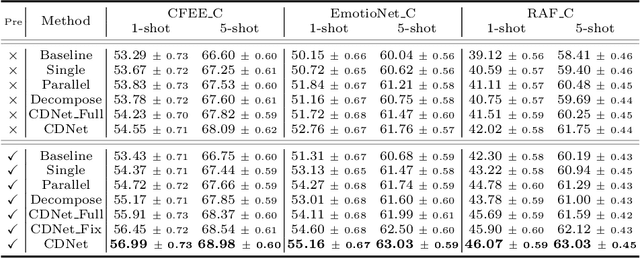

Most existing compound facial expression recognition (FER) methods rely on large-scale labeled compound expression data for training. However, collecting such data is labor-intensive and time-consuming. In this paper, we address the compound FER task in the cross-domain few-shot learning (FSL) setting, which requires only a few samples of compound expressions in the target domain. Specifically, we propose a novel cascaded decomposition network (CDNet), which cascades several learn-to-decompose modules with shared parameters based on a sequential decomposition mechanism, to obtain a transferable feature space. To alleviate the overfitting problem caused by limited base classes in our task, a partial regularization strategy is designed to effectively exploit the best of both episodic training and batch training. By training across similar tasks on multiple basic expression datasets, CDNet learns the ability of learn-to-decompose that can be easily adapted to identify unseen compound expressions. Extensive experiments on both in-the-lab and in-the-wild compound expression datasets demonstrate the superiority of our proposed CDNet against several state-of-the-art FSL methods. Code is available at: https://github.com/zouxinyi0625/CDNet.

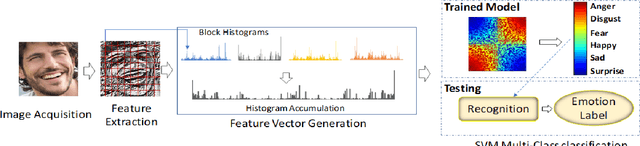

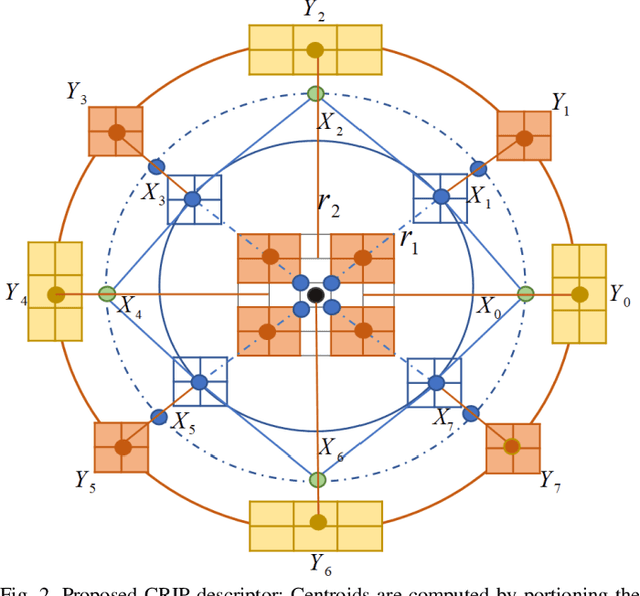

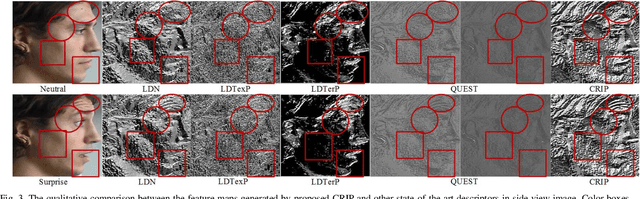

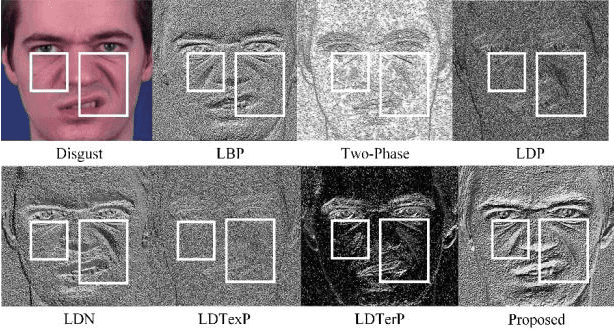

Cross-Centroid Ripple Pattern for Facial Expression Recognition

Jan 16, 2022

In this paper, we propose a new feature descriptor Cross-Centroid Ripple Pattern (CRIP) for facial expression recognition. CRIP encodes the transitional pattern of a facial expression by incorporating cross-centroid relationship between two ripples located at radius r1 and r2 respectively. These ripples are generated by dividing the local neighborhood region into subregions. Thus, CRIP has ability to preserve macro and micro structural variations in an extensive region, which enables it to deal with side views and spontaneous expressions. Furthermore, gradient information between cross centroid ripples provides strenght to captures prominent edge features in active patches: eyes, nose and mouth, that define the disparities between different facial expressions. Cross centroid information also provides robustness to irregular illumination. Moreover, CRIP utilizes the averaging behavior of pixels at subregions that yields robustness to deal with noisy conditions. The performance of proposed descriptor is evaluated on seven comprehensive expression datasets consisting of challenging conditions such as age, pose, ethnicity and illumination variations. The experimental results show that our descriptor consistently achieved better accuracy rate as compared to existing state-of-art approaches.

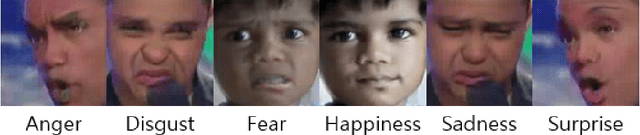

GANonymization: A GAN-based Face Anonymization Framework for Preserving Emotional Expressions

May 03, 2023

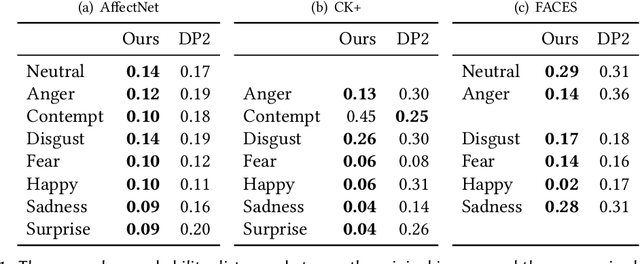

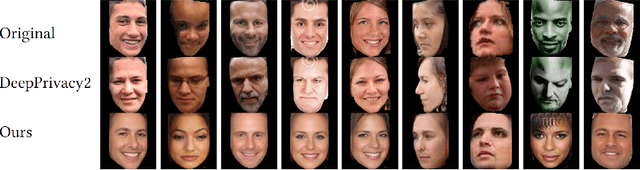

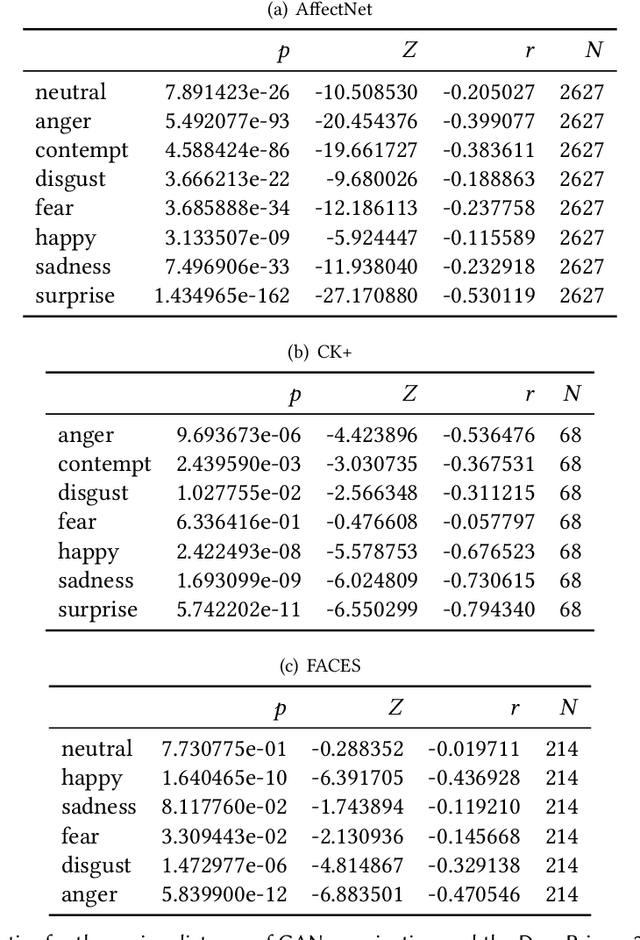

In recent years, the increasing availability of personal data has raised concerns regarding privacy and security. One of the critical processes to address these concerns is data anonymization, which aims to protect individual privacy and prevent the release of sensitive information. This research focuses on the importance of face anonymization. Therefore, we introduce GANonymization, a novel face anonymization framework with facial expression-preserving abilities. Our approach is based on a high-level representation of a face which is synthesized into an anonymized version based on a generative adversarial network (GAN). The effectiveness of the approach was assessed by evaluating its performance in removing identifiable facial attributes to increase the anonymity of the given individual face. Additionally, the performance of preserving facial expressions was evaluated on several affect recognition datasets and outperformed the state-of-the-art method in most categories. Finally, our approach was analyzed for its ability to remove various facial traits, such as jewelry, hair color, and multiple others. Here, it demonstrated reliable performance in removing these attributes. Our results suggest that GANonymization is a promising approach for anonymizing faces while preserving facial expressions.

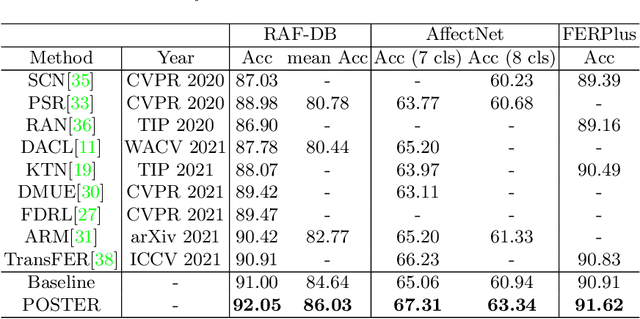

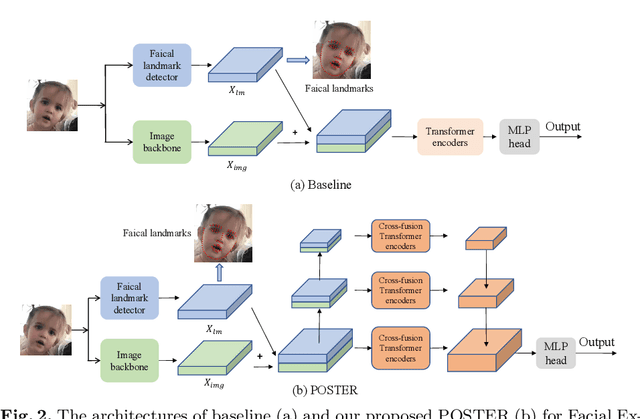

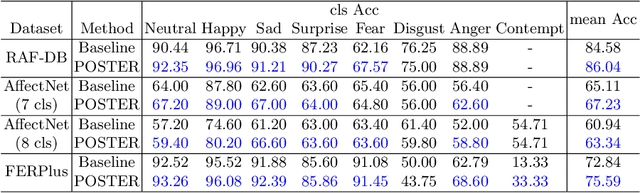

POSTER: A Pyramid Cross-Fusion Transformer Network for Facial Expression Recognition

Apr 08, 2022

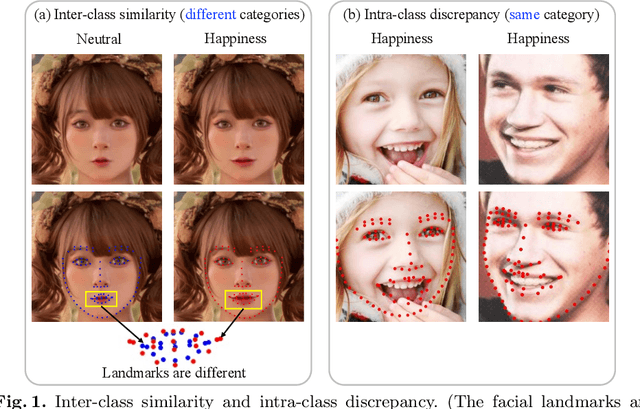

Facial Expression Recognition (FER) has received increasing interest in the computer vision community. As a challenging task, there are three key issues especially prevalent in FER: inter-class similarity, intra-class discrepancy, and scale sensitivity. Existing methods typically address some of these issues, but do not tackle them all in a unified framework. Therefore, in this paper, we propose a two-stream Pyramid crOss-fuSion TransformER network (POSTER) that aims to holistically solve these issues. Specifically, we design a transformer-based cross-fusion paradigm that enables effective collaboration of facial landmark and direct image features to maximize proper attention to salient facial regions. Furthermore, POSTER employs a pyramid structure to promote scale invariance. Extensive experimental results demonstrate that our POSTER outperforms SOTA methods on RAF-DB with 92.05%, FERPlus with 91.62%, AffectNet (7 cls) with 67.31%, and AffectNet (8 cls) with 63.34%, respectively.

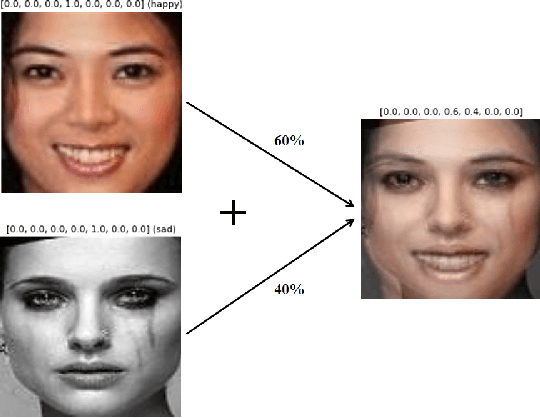

MixAugment & Mixup: Augmentation Methods for Facial Expression Recognition

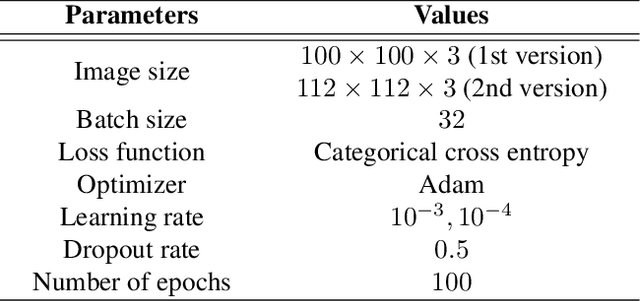

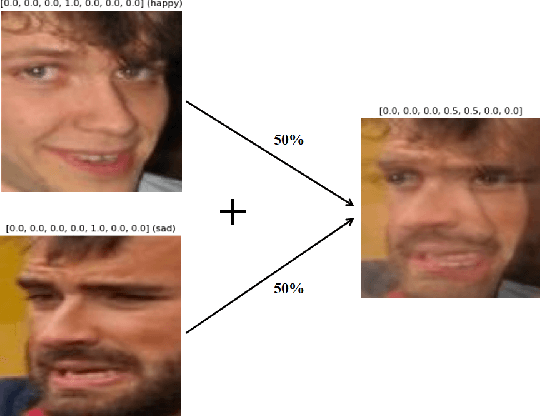

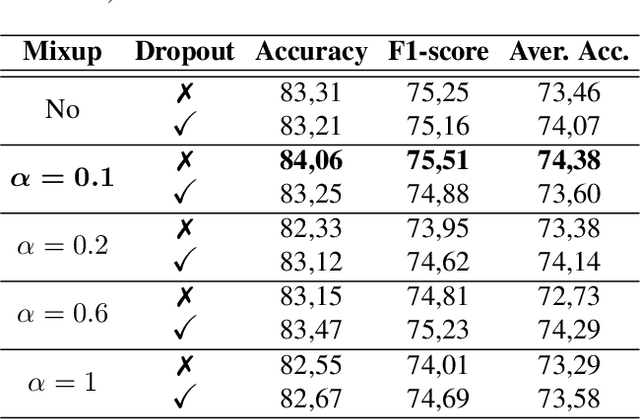

May 09, 2022

Automatic Facial Expression Recognition (FER) has attracted increasing attention in the last 20 years since facial expressions play a central role in human communication. Most FER methodologies utilize Deep Neural Networks (DNNs) that are powerful tools when it comes to data analysis. However, despite their power, these networks are prone to overfitting, as they often tend to memorize the training data. What is more, there are not currently a lot of in-the-wild (i.e. in unconstrained environment) large databases for FER. To alleviate this issue, a number of data augmentation techniques have been proposed. Data augmentation is a way to increase the diversity of available data by applying constrained transformations on the original data. One such technique, which has positively contributed to various classification tasks, is Mixup. According to this, a DNN is trained on convex combinations of pairs of examples and their corresponding labels. In this paper, we examine the effectiveness of Mixup for in-the-wild FER in which data have large variations in head poses, illumination conditions, backgrounds and contexts. We then propose a new data augmentation strategy which is based on Mixup, called MixAugment. According to this, the network is trained concurrently on a combination of virtual examples and real examples; all these examples contribute to the overall loss function. We conduct an extensive experimental study that proves the effectiveness of MixAugment over Mixup and various state-of-the-art methods. We further investigate the combination of dropout with Mixup and MixAugment, as well as the combination of other data augmentation techniques with MixAugment.

Fuzzy and entropy facial recognition

Aug 24, 2014This paper suggests an effective method for facial recognition using fuzzy theory and Shannon entropy. Combination of fuzzy theory and Shannon entropy eliminates the complication of other methods. Shannon entropy calculates the ratio of an element between faces, and fuzzy theory calculates the member ship of the entropy with 1. More details will be mentioned in Section 3. The learning performance is better than others as it is very simple, and only need two data per learning. By using factors that don't usually change during the life, the method will have a high accuracy.

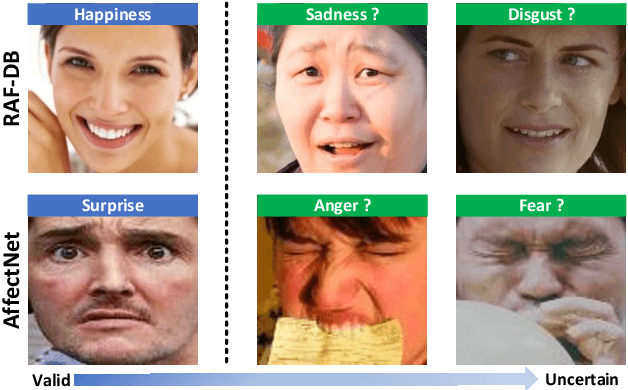

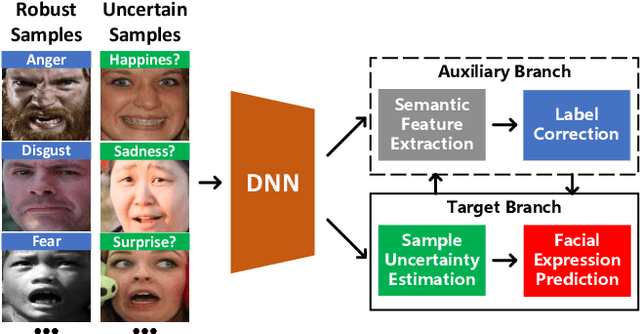

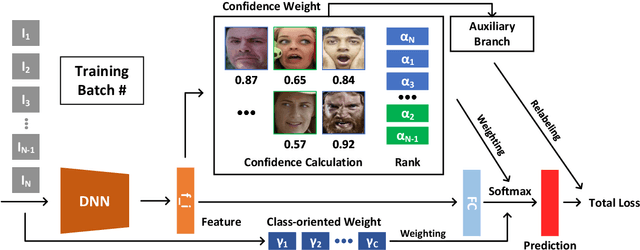

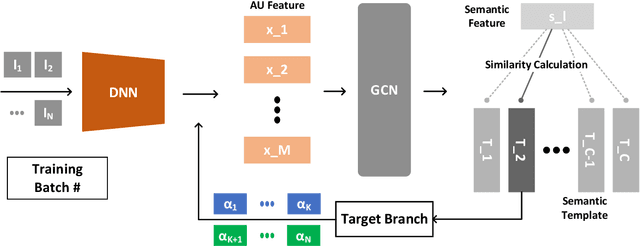

Uncertain Label Correction via Auxiliary Action Unit Graphs for Facial Expression Recognition

Apr 23, 2022

High-quality annotated images are significant to deep facial expression recognition (FER) methods. However, uncertain labels, mostly existing in large-scale public datasets, often mislead the training process. In this paper, we achieve uncertain label correction of facial expressions using auxiliary action unit (AU) graphs, called ULC-AG. Specifically, a weighted regularization module is introduced to highlight valid samples and suppress category imbalance in every batch. Based on the latent dependency between emotions and AUs, an auxiliary branch using graph convolutional layers is added to extract the semantic information from graph topologies. Finally, a re-labeling strategy corrects the ambiguous annotations by comparing their feature similarities with semantic templates. Experiments show that our ULC-AG achieves 89.31% and 61.57% accuracy on RAF-DB and AffectNet datasets, respectively, outperforming the baseline and state-of-the-art methods.

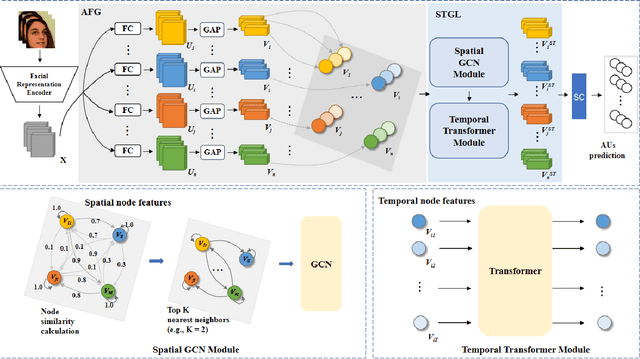

Spatio-Temporal AU Relational Graph Representation Learning For Facial Action Units Detection

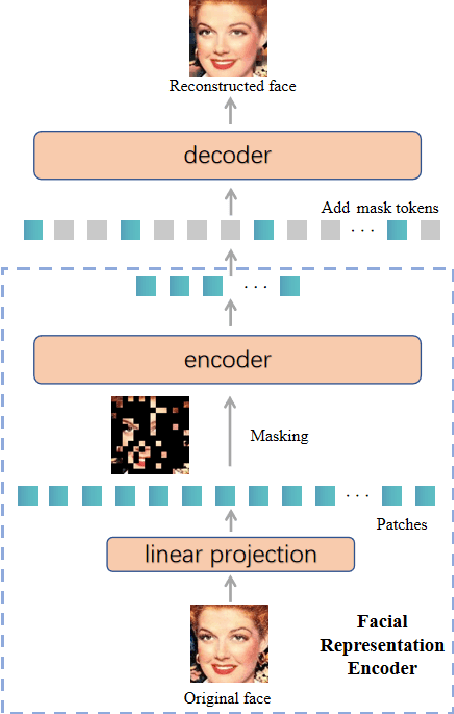

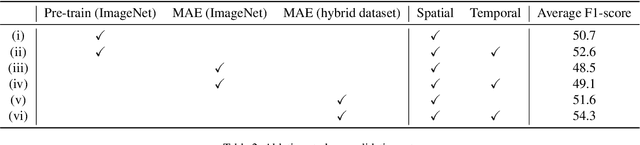

Mar 27, 2023

This paper presents our Facial Action Units (AUs) recognition submission to the fifth Affective Behavior Analysis in-the-wild Competition (ABAW). Our approach consists of three main modules: (i) a pre-trained facial representation encoder which produce a strong facial representation from each input face image in the input sequence; (ii) an AU-specific feature generator that specifically learns a set of AU features from each facial representation; and (iii) a spatio-temporal graph learning module that constructs a spatio-temporal graph representation. This graph representation describes AUs contained in all frames and predicts the occurrence of each AU based on both the modeled spatial information within the corresponding face and the learned temporal dynamics among frames. The experimental results show that our approach outperformed the baseline and the spatio-temporal graph representation learning allows our model to generate the best results among all ablated systems. Our model ranks at the 4th place in the AU recognition track at the 5th ABAW Competition.

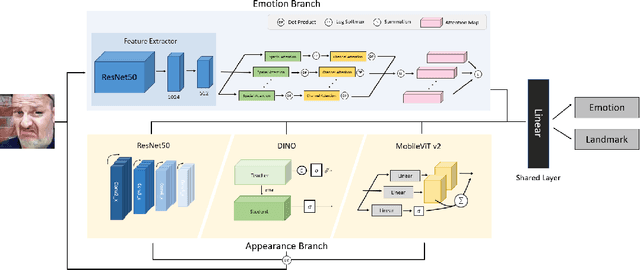

Learning from Synthetic Data: Facial Expression Classification based on Ensemble of Multi-task Networks

Jul 21, 2022

Facial expression in-the-wild is essential for various interactive computing domains. Especially, "Learning from Synthetic Data" (LSD) is an important topic in the facial expression recognition task. In this paper, we propose a multi-task learning-based facial expression recognition approach which consists of emotion and appearance learning branches that can share all face information, and present preliminary results for the LSD challenge introduced in the 4th affective behavior analysis in-the-wild (ABAW) competition. Our method achieved the mean F1 score of 0.71.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge