"Topic Modeling": models, code, and papers

Open vs Closed-ended questions in attitudinal surveys -- comparing, combining, and interpreting using natural language processing

May 03, 2022

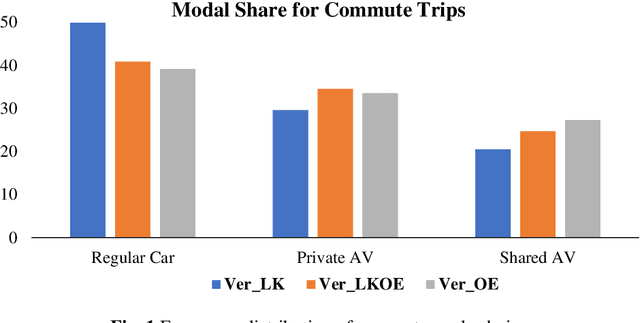

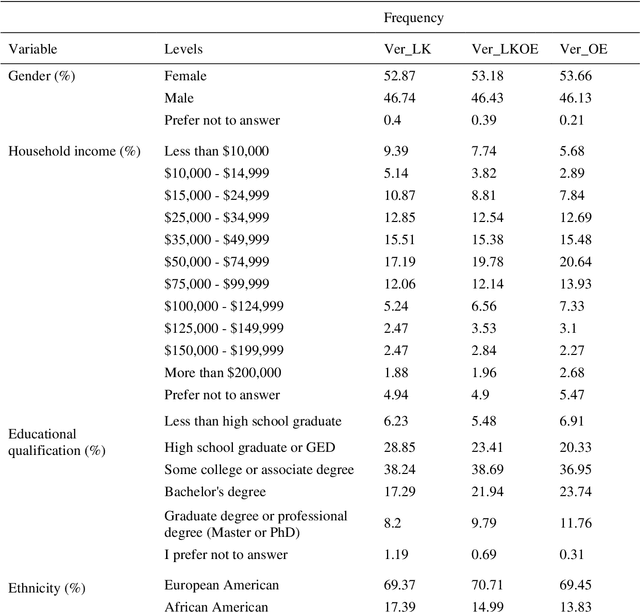

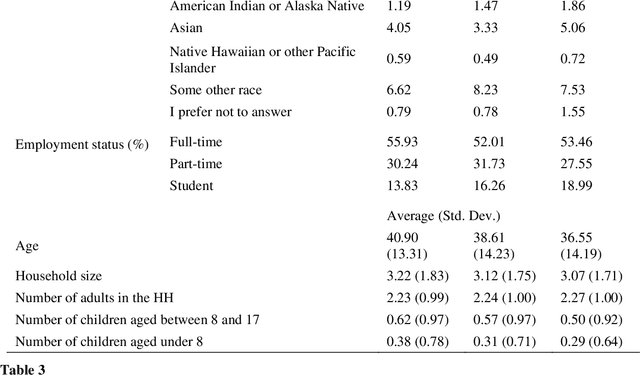

To improve the traveling experience, researchers have been analyzing the role of attitudes in travel behavior modeling. Although most researchers use closed-ended surveys, the appropriate method to measure attitudes is debatable. Topic Modeling could significantly reduce the time to extract information from open-ended responses and eliminate subjective bias, thereby alleviating analyst concerns. Our research uses Topic Modeling to extract information from open-ended questions and compare its performance with closed-ended responses. Furthermore, some respondents might prefer answering questions using their preferred questionnaire type. So, we propose a modeling framework that allows respondents to use their preferred questionnaire type to answer the survey and enable analysts to use the modeling frameworks of their choice to predict behavior. We demonstrate this using a dataset collected from the USA that measures the intention to use Autonomous Vehicles for commute trips. Respondents were presented with alternative questionnaire versions (open- and closed- ended). Since our objective was also to compare the performance of alternative questionnaire versions, the survey was designed to eliminate influences resulting from statements, behavioral framework, and the choice experiment. Results indicate the suitability of using Topic Modeling to extract information from open-ended responses; however, the models estimated using the closed-ended questions perform better compared to them. Besides, the proposed model performs better compared to the models used currently. Furthermore, our proposed framework will allow respondents to choose the questionnaire type to answer, which could be particularly beneficial to them when using voice-based surveys.

Short Text Topic Modeling: Application to tweets about Bitcoin

Mar 17, 2022

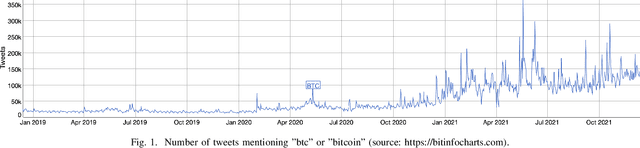

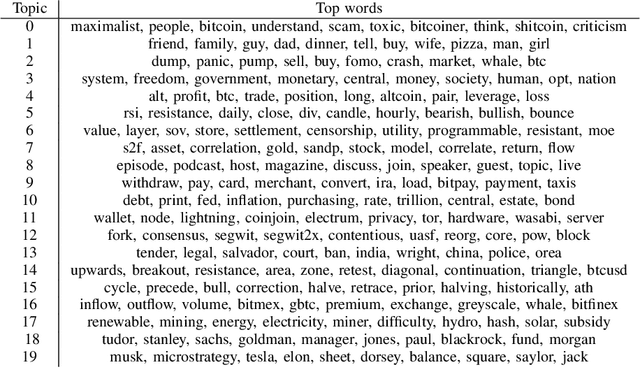

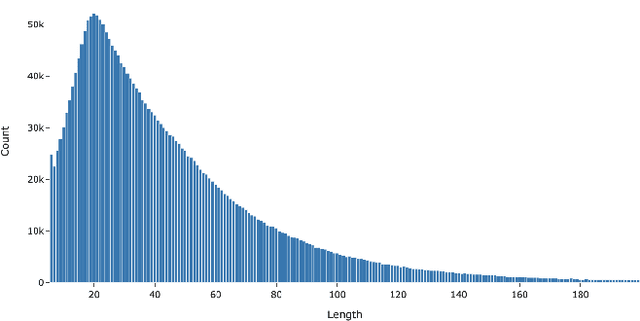

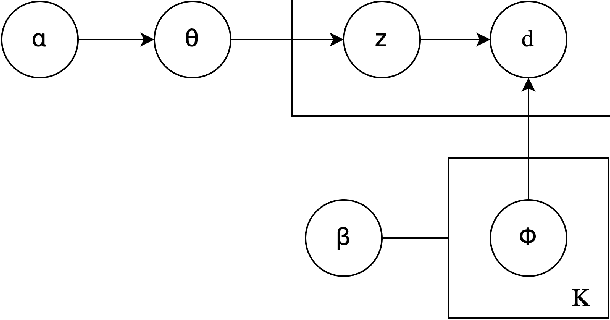

Understanding the semantic of a collection of texts is a challenging task. Topic models are probabilistic models that aims at extracting "topics" from a corpus of documents. This task is particularly difficult when the corpus is composed of short texts, such as posts on social networks. Following several previous research papers, we explore in this paper a set of collected tweets about bitcoin. In this work, we train three topic models and evaluate their output with several scores. We also propose a concrete application of the extracted topics.

Capturing Topic Framing via Masked Language Modeling

Feb 07, 2023

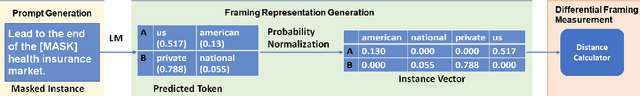

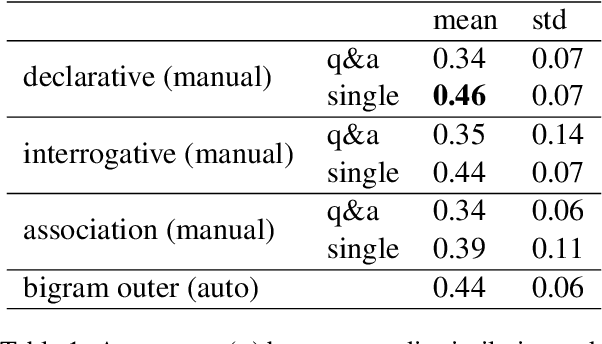

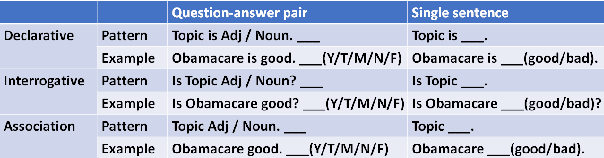

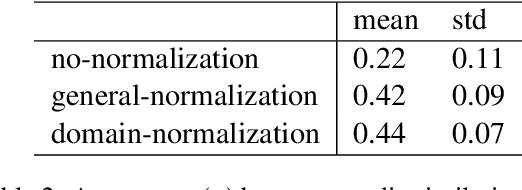

Differential framing of issues can lead to divergent world views on important issues. This is especially true in domains where the information presented can reach a large audience, such as traditional and social media. Scalable and reliable measurement of such differential framing is an important first step in addressing them. In this work, based on the intuition that framing affects the tone and word choices in written language, we propose a framework for modeling the differential framing of issues through masked token prediction via large-scale fine-tuned language models (LMs). Specifically, we explore three key factors for our framework: 1) prompt generation methods for the masked token prediction; 2) methods for normalizing the output of fine-tuned LMs; 3) robustness to the choice of pre-trained LMs used for fine-tuning. Through experiments on a dataset of articles from traditional media outlets covering five diverse and politically polarized topics, we show that our framework can capture differential framing of these topics with high reliability.

* In Findings of EMNLP 2022

Twitter Topic Classification

Sep 20, 2022

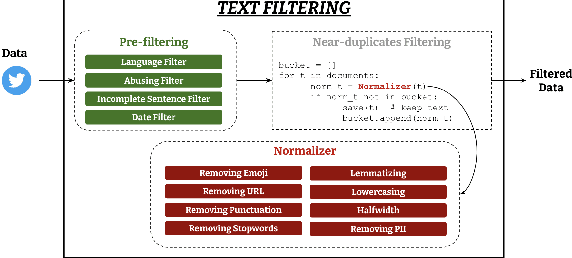

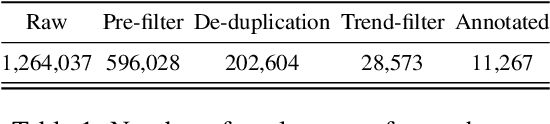

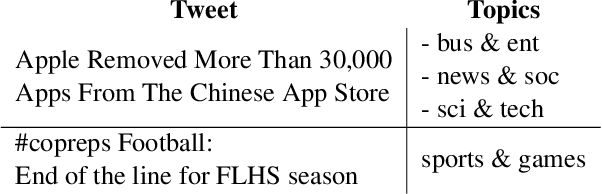

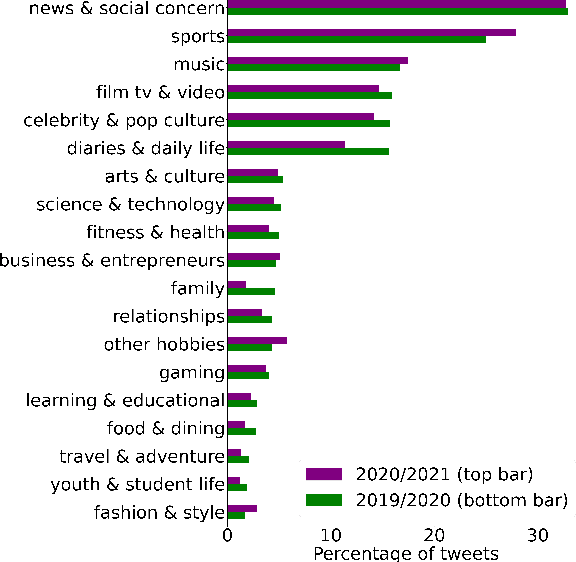

Social media platforms host discussions about a wide variety of topics that arise everyday. Making sense of all the content and organising it into categories is an arduous task. A common way to deal with this issue is relying on topic modeling, but topics discovered using this technique are difficult to interpret and can differ from corpus to corpus. In this paper, we present a new task based on tweet topic classification and release two associated datasets. Given a wide range of topics covering the most important discussion points in social media, we provide training and testing data from recent time periods that can be used to evaluate tweet classification models. Moreover, we perform a quantitative evaluation and analysis of current general- and domain-specific language models on the task, which provide more insights on the challenges and nature of the task.

Topic Modeling of Hierarchical Corpora

Apr 13, 2015

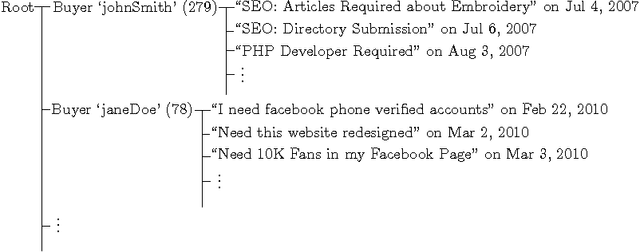

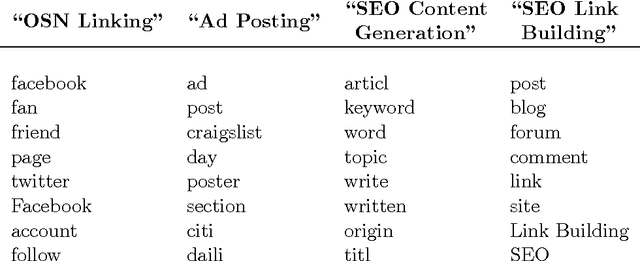

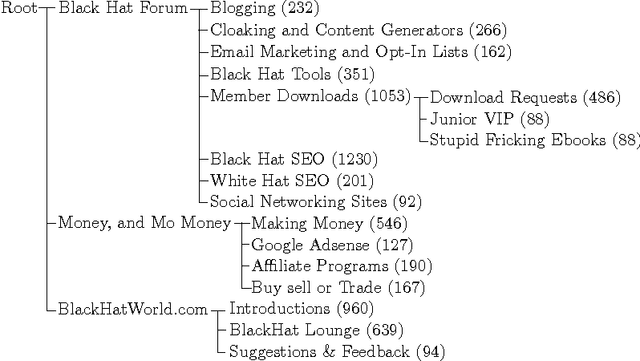

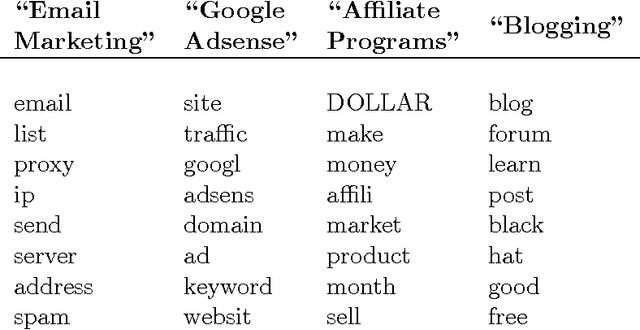

We study the problem of topic modeling in corpora whose documents are organized in a multi-level hierarchy. We explore a parametric approach to this problem, assuming that the number of topics is known or can be estimated by cross-validation. The models we consider can be viewed as special (finite-dimensional) instances of hierarchical Dirichlet processes (HDPs). For these models we show that there exists a simple variational approximation for probabilistic inference. The approximation relies on a previously unexploited inequality that handles the conditional dependence between Dirichlet latent variables in adjacent levels of the model's hierarchy. We compare our approach to existing implementations of nonparametric HDPs. On several benchmarks we find that our approach is faster than Gibbs sampling and able to learn more predictive models than existing variational methods. Finally, we demonstrate the large-scale viability of our approach on two newly available corpora from researchers in computer security---one with 350,000 documents and over 6,000 internal subcategories, the other with a five-level deep hierarchy.

Recommendation System based on Semantic Scholar Mining and Topic modeling: A behavioral analysis of researchers from six conferences

Dec 20, 2018

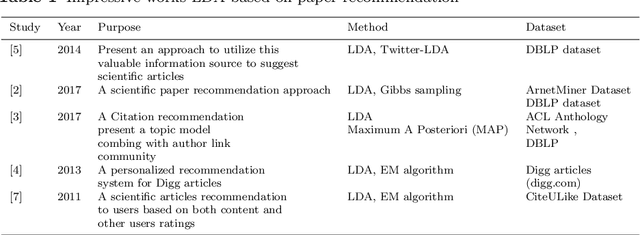

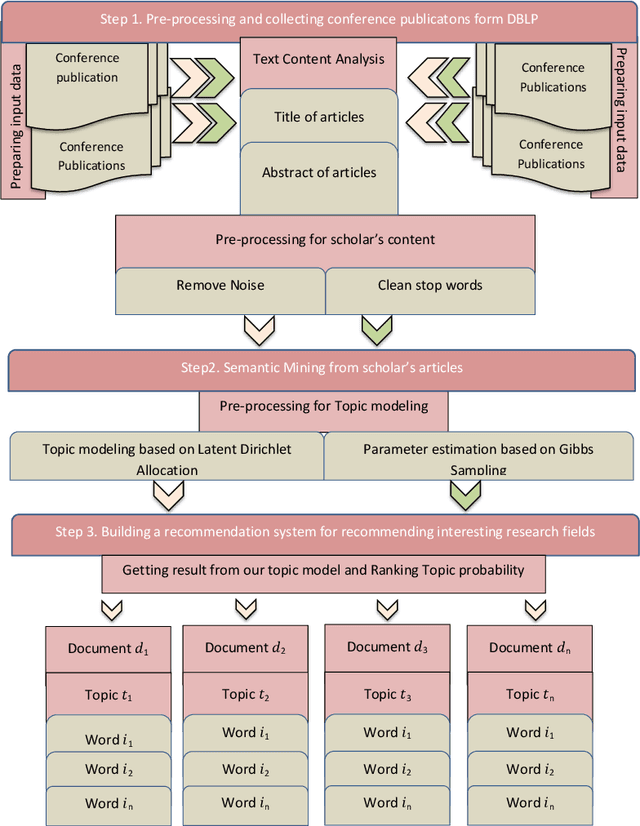

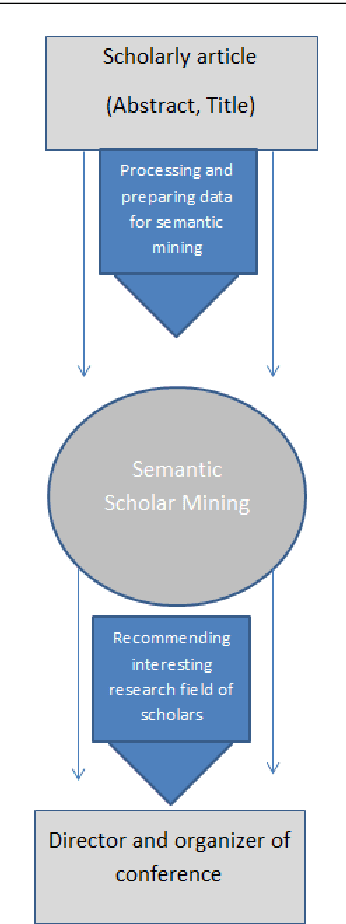

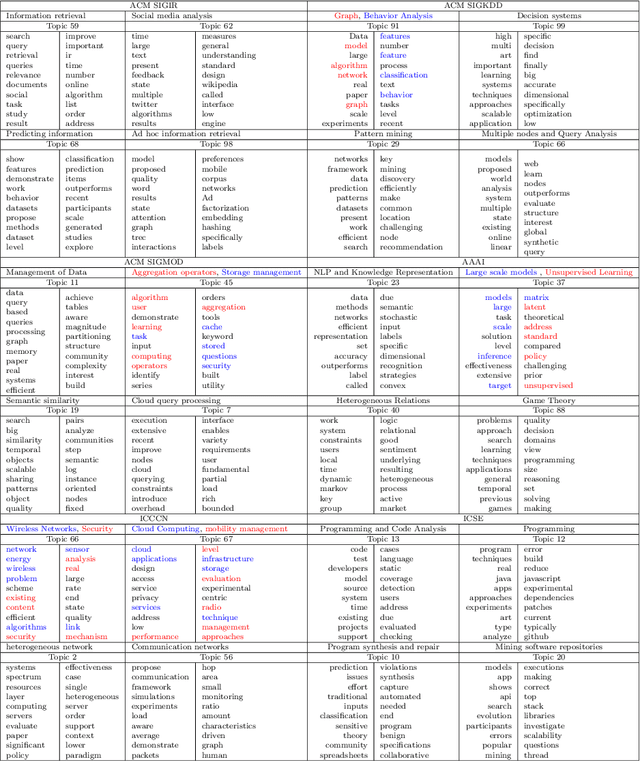

Recommendation systems have an important place to help online users in the internet society. Recommendation Systems in computer science are of very practical use these days in various aspects of the Internet portals, such as social networks, and library websites. There are several approaches to implement recommendation systems, Latent Dirichlet Allocation (LDA) is one the popular techniques in Topic Modeling. Recently, researchers have proposed many approaches based on Recommendation Systems and LDA. According to importance of the subject, in this paper we discover the trends of the topics and find relationship between LDA topics and Scholar-Context-documents. In fact, We apply probabilistic topic modeling based on Gibbs sampling algorithms for a semantic mining from six conference publications in computer science from DBLP dataset. According to our experimental results, our semantic framework can be effective to help organizations to better organize these conferences and cover future research topics.

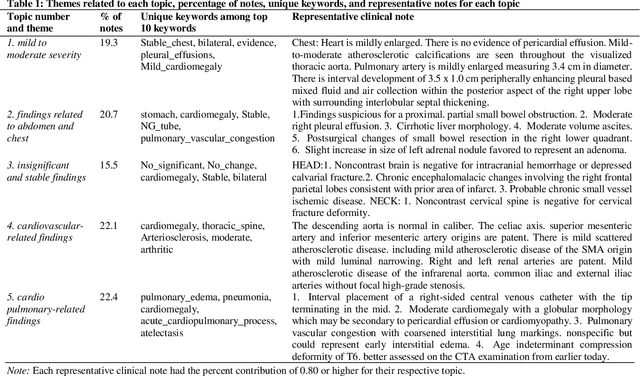

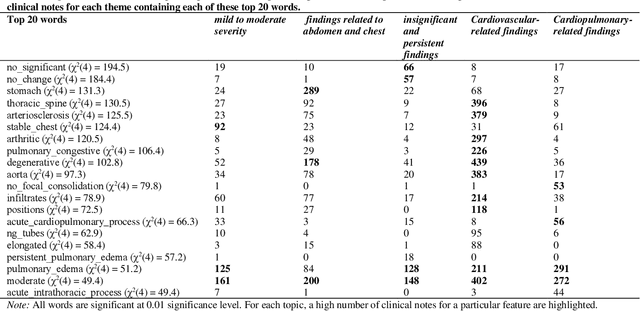

Leveraging Natural Learning Processing to Uncover Themes in Clinical Notes of Patients Admitted for Heart Failure

Apr 14, 2022

Heart failure occurs when the heart is not able to pump blood and oxygen to support other organs in the body as it should. Treatments include medications and sometimes hospitalization. Patients with heart failure can have both cardiovascular as well as non-cardiovascular comorbidities. Clinical notes of patients with heart failure can be analyzed to gain insight into the topics discussed in these notes and the major comorbidities in these patients. In this regard, we apply machine learning techniques, such as topic modeling, to identify the major themes found in the clinical notes specific to the procedures performed on 1,200 patients admitted for heart failure at the University of Illinois Hospital and Health Sciences System (UI Health). Topic modeling revealed five hidden themes in these clinical notes, including one related to heart disease comorbidities.

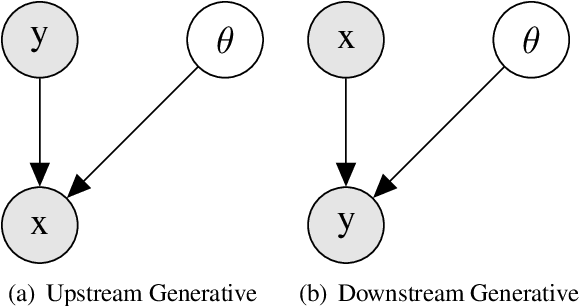

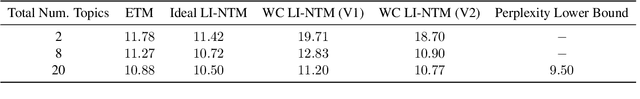

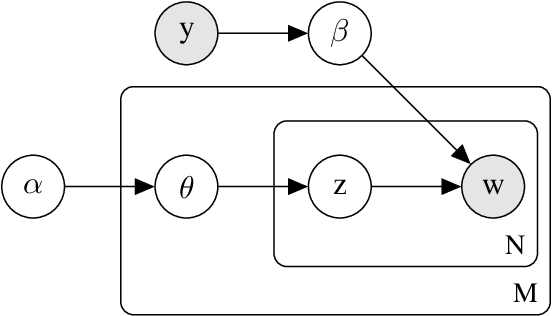

A Joint Learning Approach for Semi-supervised Neural Topic Modeling

Apr 07, 2022

Topic models are some of the most popular ways to represent textual data in an interpret-able manner. Recently, advances in deep generative models, specifically auto-encoding variational Bayes (AEVB), have led to the introduction of unsupervised neural topic models, which leverage deep generative models as opposed to traditional statistics-based topic models. We extend upon these neural topic models by introducing the Label-Indexed Neural Topic Model (LI-NTM), which is, to the extent of our knowledge, the first effective upstream semi-supervised neural topic model. We find that LI-NTM outperforms existing neural topic models in document reconstruction benchmarks, with the most notable results in low labeled data regimes and for data-sets with informative labels; furthermore, our jointly learned classifier outperforms baseline classifiers in ablation studies.

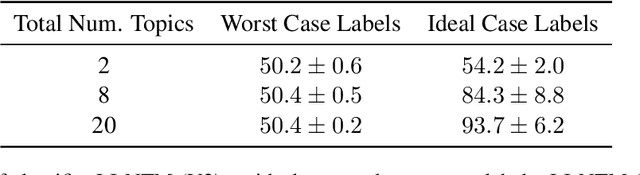

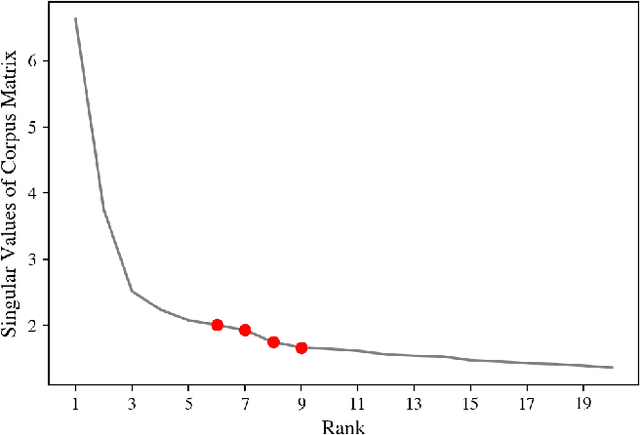

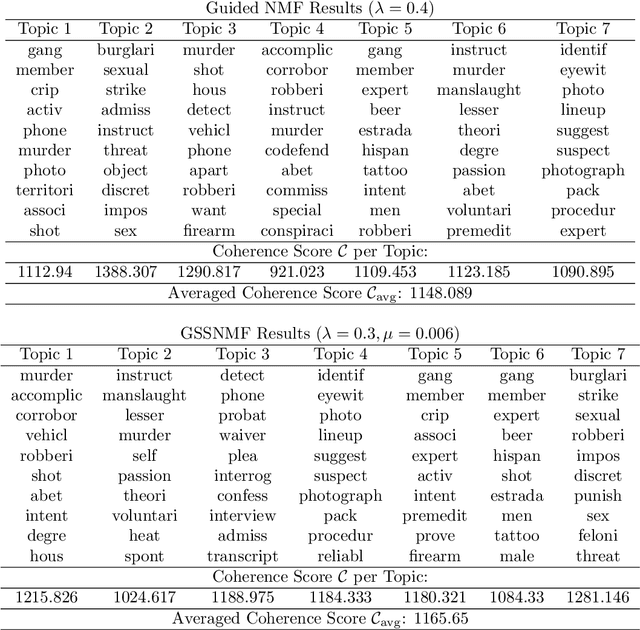

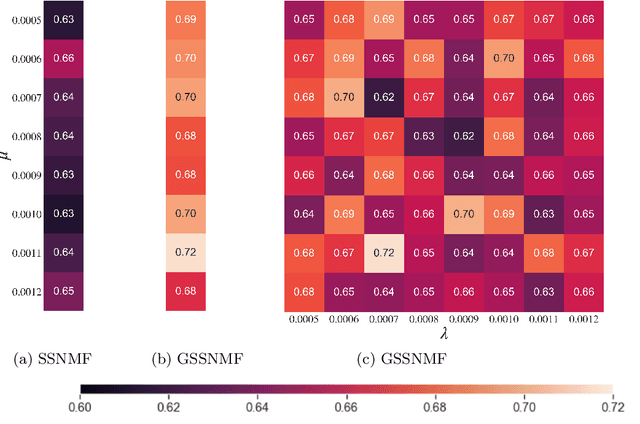

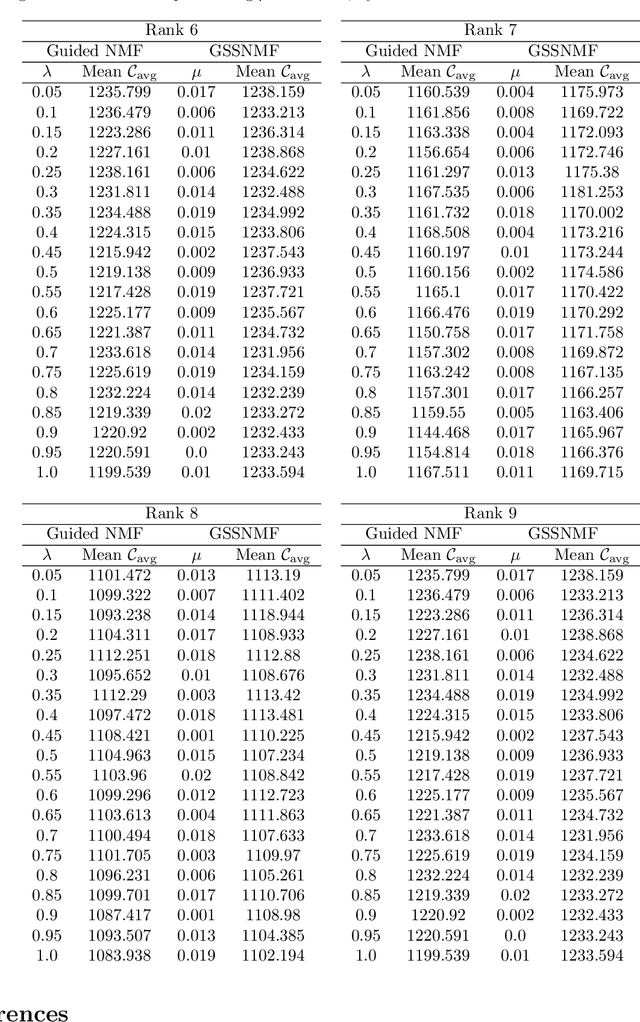

Guided Semi-Supervised Non-negative Matrix Factorization on Legal Documents

Jan 31, 2022

Classification and topic modeling are popular techniques in machine learning that extract information from large-scale datasets. By incorporating a priori information such as labels or important features, methods have been developed to perform classification and topic modeling tasks; however, most methods that can perform both do not allow for guidance of the topics or features. In this paper, we propose a method, namely Guided Semi-Supervised Non-negative Matrix Factorization (GSSNMF), that performs both classification and topic modeling by incorporating supervision from both pre-assigned document class labels and user-designed seed words. We test the performance of this method through its application to legal documents provided by the California Innocence Project, a nonprofit that works to free innocent convicted persons and reform the justice system. The results show that our proposed method improves both classification accuracy and topic coherence in comparison to past methods like Semi-Supervised Non-negative Matrix Factorization (SSNMF) and Guided Non-negative Matrix Factorization (Guided NMF).

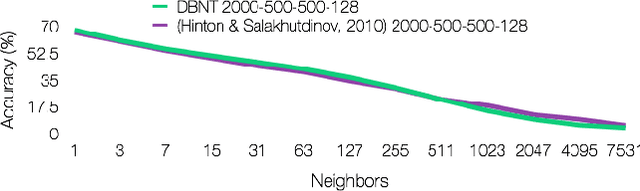

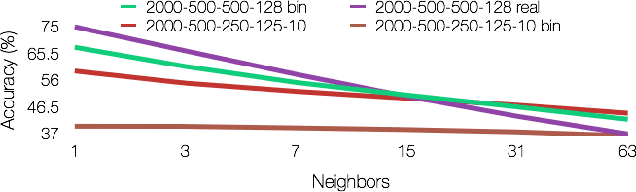

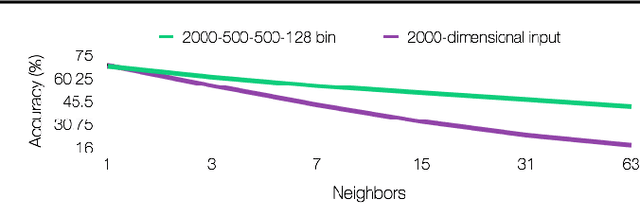

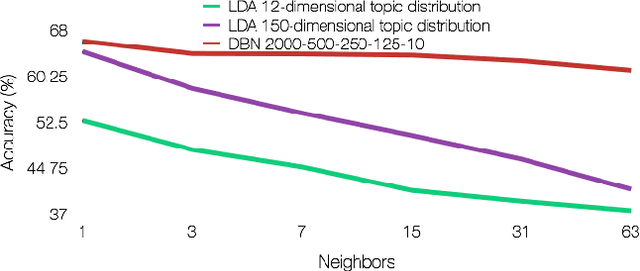

Deep Belief Nets for Topic Modeling

Jan 18, 2015

Applying traditional collaborative filtering to digital publishing is challenging because user data is very sparse due to the high volume of documents relative to the number of users. Content based approaches, on the other hand, is attractive because textual content is often very informative. In this paper we describe large-scale content based collaborative filtering for digital publishing. To solve the digital publishing recommender problem we compare two approaches: latent Dirichlet allocation (LDA) and deep belief nets (DBN) that both find low-dimensional latent representations for documents. Efficient retrieval can be carried out in the latent representation. We work both on public benchmarks and digital media content provided by Issuu, an online publishing platform. This article also comes with a newly developed deep belief nets toolbox for topic modeling tailored towards performance evaluation of the DBN model and comparisons to the LDA model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge