"Time Series Analysis": models, code, and papers

TFAD: A Decomposition Time Series Anomaly Detection Architecture with Time-Frequency Analysis

Oct 18, 2022

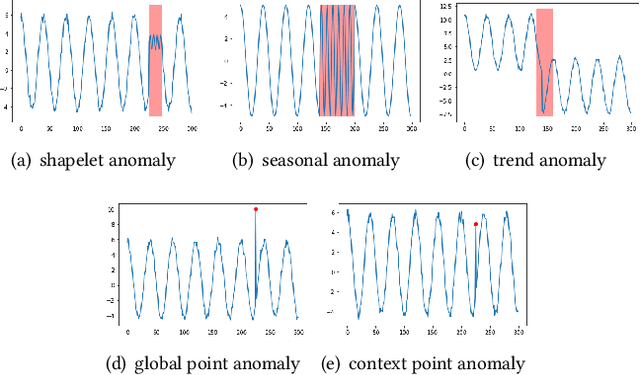

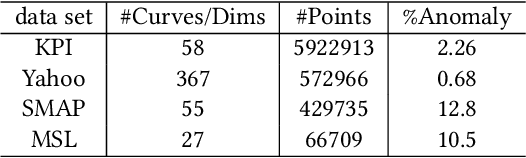

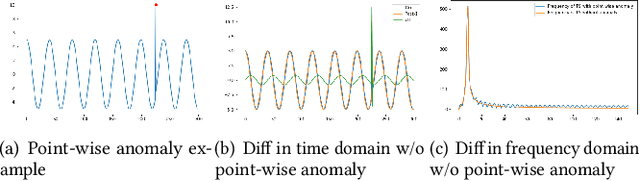

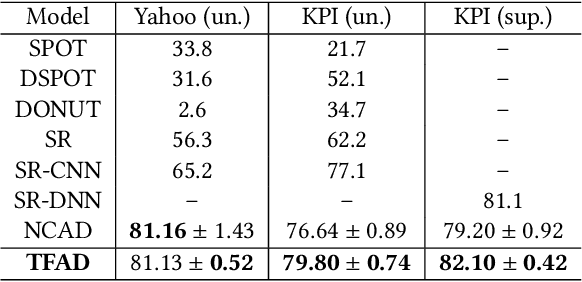

Time series anomaly detection is a challenging problem due to the complex temporal dependencies and the limited label data. Although some algorithms including both traditional and deep models have been proposed, most of them mainly focus on time-domain modeling, and do not fully utilize the information in the frequency domain of the time series data. In this paper, we propose a Time-Frequency analysis based time series Anomaly Detection model, or TFAD for short, to exploit both time and frequency domains for performance improvement. Besides, we incorporate time series decomposition and data augmentation mechanisms in the designed time-frequency architecture to further boost the abilities of performance and interpretability. Empirical studies on widely used benchmark datasets show that our approach obtains state-of-the-art performance in univariate and multivariate time series anomaly detection tasks. Code is provided at https://github.com/DAMO-DI-ML/CIKM22-TFAD.

Twitter's Agenda-Setting Role: A Study of Twitter Strategy for Political Diversion

Dec 16, 2022

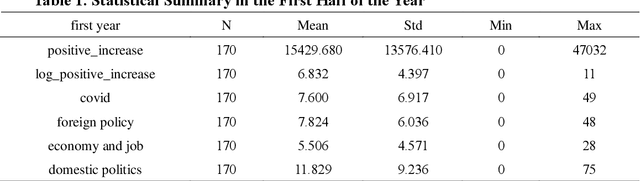

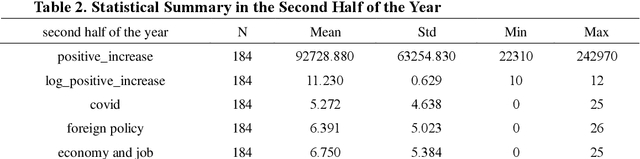

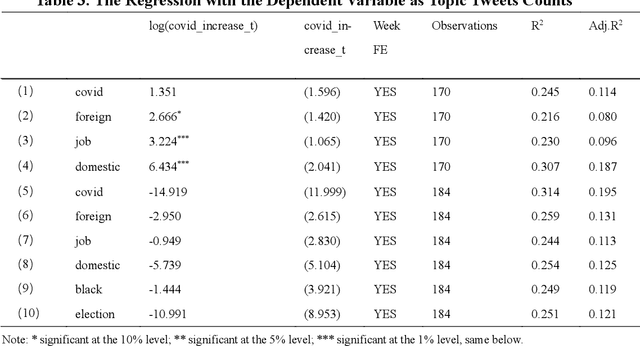

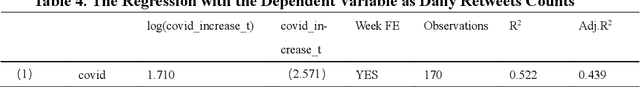

This study verified the effectiveness of Donald Trump's Twitter campaign in guiding agen-da-setting and deflecting political risk and examined Trump's Twitter communication strategy and explores the communication effects of his tweet content during Covid-19 pandemic. We collected all tweets posted by Trump on the Twitter platform from January 1, 2020 to December 31, 2020.We used Ordinary Least Squares (OLS) regression analysis with a fixed effects model to analyze the existence of the Twitter strategy. The correlation between the number of con-firmed daily Covid-19 diagnoses and the number of particular thematic tweets was investigated using time series analysis. Empirical analysis revealed Twitter's strategy is used to divert public attention from negative Covid-19 reports during the epidemic, and it posts a powerful political communication effect on Twitter. However, findings suggest that Trump did not use false claims to divert political risk and shape public opinion.

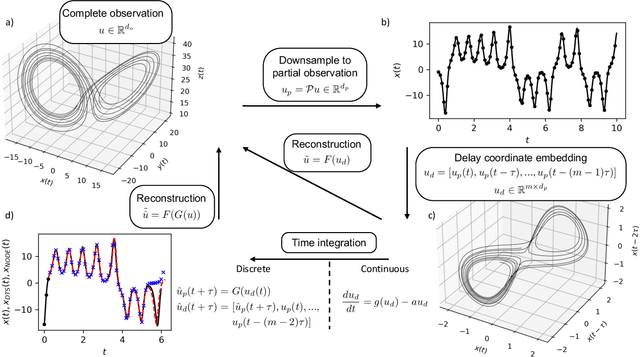

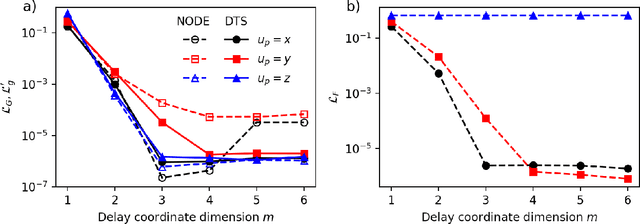

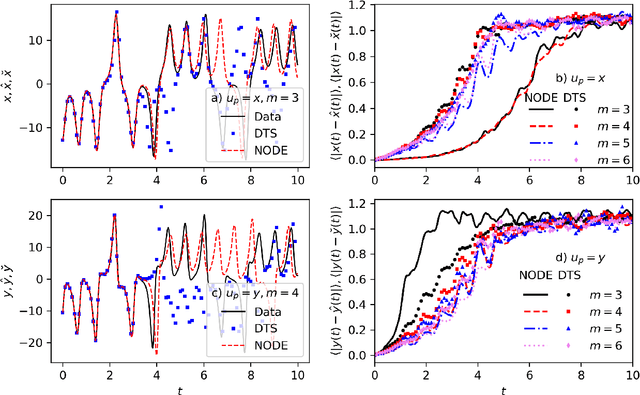

Deep learning delay coordinate dynamics for chaotic attractors from partial observable data

Nov 20, 2022

A common problem in time series analysis is to predict dynamics with only scalar or partial observations of the underlying dynamical system. For data on a smooth compact manifold, Takens theorem proves a time delayed embedding of the partial state is diffeomorphic to the attractor, although for chaotic and highly nonlinear systems learning these delay coordinate mappings is challenging. We utilize deep artificial neural networks (ANNs) to learn discrete discrete time maps and continuous time flows of the partial state. Given training data for the full state, we also learn a reconstruction map. Thus, predictions of a time series can be made from the current state and several previous observations with embedding parameters determined from time series analysis. The state space for time evolution is of comparable dimension to reduced order manifold models. These are advantages over recurrent neural network models, which require a high dimensional internal state or additional memory terms and hyperparameters. We demonstrate the capacity of deep ANNs to predict chaotic behavior from a scalar observation on a manifold of dimension three via the Lorenz system. We also consider multivariate observations on the Kuramoto-Sivashinsky equation, where the observation dimension required for accurately reproducing dynamics increases with the manifold dimension via the spatial extent of the system.

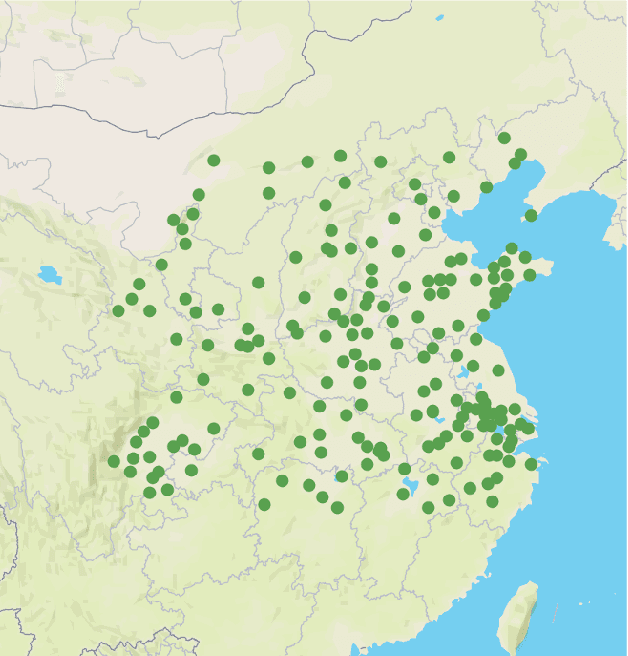

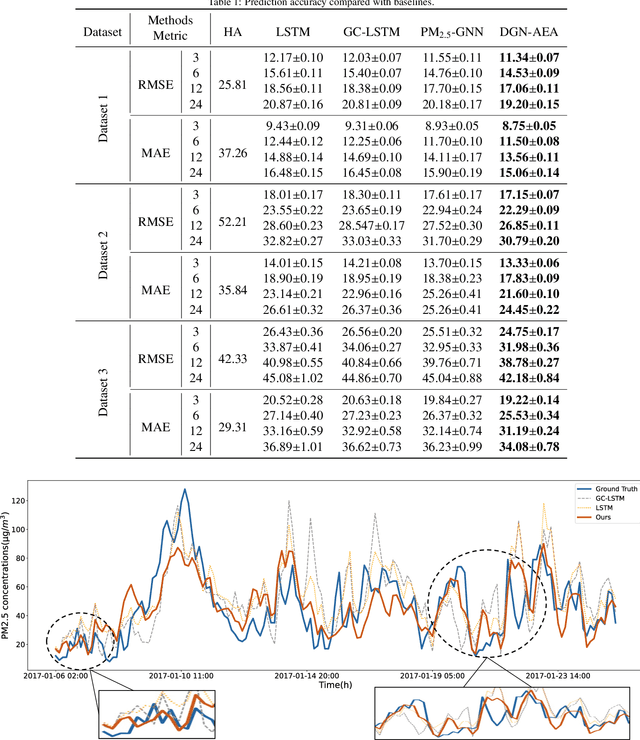

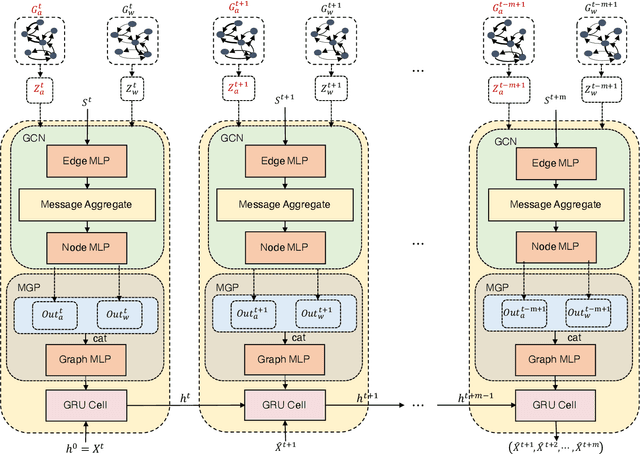

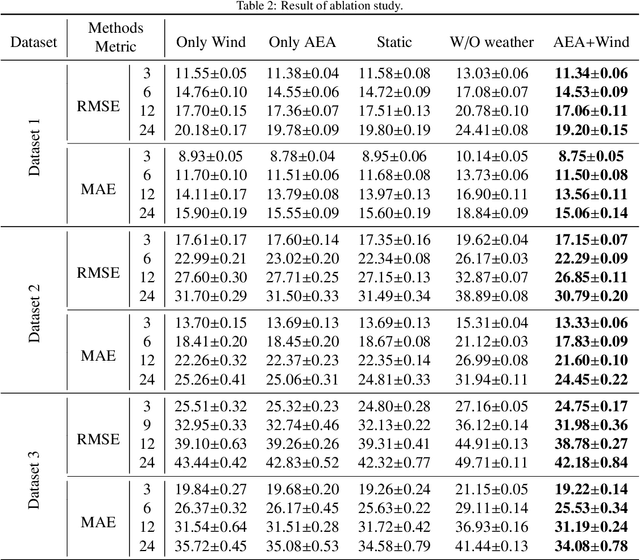

Dynamic Graph Neural Network with Adaptive Edge Attributes for Air Quality Predictions

Feb 20, 2023

Air quality prediction is a typical spatio-temporal modeling problem, which always uses different components to handle spatial and temporal dependencies in complex systems separately. Previous models based on time series analysis and Recurrent Neural Network (RNN) methods have only modeled time series while ignoring spatial information. Previous GCNs-based methods usually require providing spatial correlation graph structure of observation sites in advance. The correlations among these sites and their strengths are usually calculated using prior information. However, due to the limitations of human cognition, limited prior information cannot reflect the real station-related structure or bring more effective information for accurate prediction. To this end, we propose a novel Dynamic Graph Neural Network with Adaptive Edge Attributes (DGN-AEA) on the message passing network, which generates the adaptive bidirected dynamic graph by learning the edge attributes as model parameters. Unlike prior information to establish edges, our method can obtain adaptive edge information through end-to-end training without any prior information. Thus reduced the complexity of the problem. Besides, the hidden structural information between the stations can be obtained as model by-products, which can help make some subsequent decision-making analyses. Experimental results show that our model received state-of-the-art performance than other baselines.

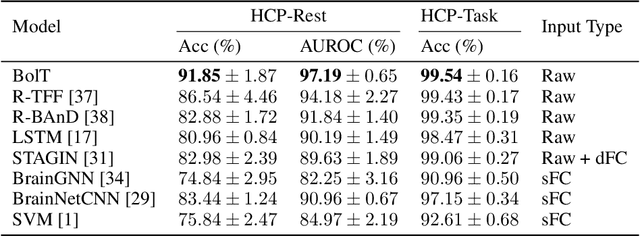

BolT: Fused Window Transformers for fMRI Time Series Analysis

May 23, 2022

Functional magnetic resonance imaging (fMRI) enables examination of inter-regional interactions in the brain via functional connectivity (FC) analyses that measure the synchrony between the temporal activations of separate regions. Given their exceptional sensitivity, deep-learning methods have received growing interest for FC analyses of high-dimensional fMRI data. In this domain, models that operate directly on raw time series as opposed to pre-computed FC features have the potential benefit of leveraging the full scale of information present in fMRI data. However, previous models are based on architectures suboptimal for temporal integration of representations across multiple time scales. Here, we present BolT, blood-oxygen-level-dependent transformer, for analyzing multi-variate fMRI time series. BolT leverages a cascade of transformer encoders equipped with a novel fused window attention mechanism. Transformer encoding is performed on temporally-overlapped time windows within the fMRI time series to capture short time-scale representations. To integrate information across windows, cross-window attention is computed between base tokens in each time window and fringe tokens from neighboring time windows. To transition from local to global representations, the extent of window overlap and thereby number of fringe tokens is progressively increased across the cascade. Finally, a novel cross-window regularization is enforced to align the high-level representations of global $CLS$ features across time windows. Comprehensive experiments on public fMRI datasets clearly illustrate the superior performance of BolT against state-of-the-art methods. Posthoc explanatory analyses to identify landmark time points and regions that contribute most significantly to model decisions corroborate prominent neuroscientific findings from recent fMRI studies.

Deep recurrent spiking neural networks capture both static and dynamic representations of the visual cortex under movie stimuli

Jun 02, 2023

In the real world, visual stimuli received by the biological visual system are predominantly dynamic rather than static. A better understanding of how the visual cortex represents movie stimuli could provide deeper insight into the information processing mechanisms of the visual system. Although some progress has been made in modeling neural responses to natural movies with deep neural networks, the visual representations of static and dynamic information under such time-series visual stimuli remain to be further explored. In this work, considering abundant recurrent connections in the mouse visual system, we design a recurrent module based on the hierarchy of the mouse cortex and add it into Deep Spiking Neural Networks, which have been demonstrated to be a more compelling computational model for the visual cortex. Using Time-Series Representational Similarity Analysis, we measure the representational similarity between networks and mouse cortical regions under natural movie stimuli. Subsequently, we conduct a comparison of the representational similarity across recurrent/feedforward networks and image/video training tasks. Trained on the video action recognition task, recurrent SNN achieves the highest representational similarity and significantly outperforms feedforward SNN trained on the same task by 15% and the recurrent SNN trained on the image classification task by 8%. We investigate how static and dynamic representations of SNNs influence the similarity, as a way to explain the importance of these two forms of representations in biological neural coding. Taken together, our work is the first to apply deep recurrent SNNs to model the mouse visual cortex under movie stimuli and we establish that these networks are competent to capture both static and dynamic representations and make contributions to understanding the movie information processing mechanisms of the visual cortex.

Geometric-Based Pruning Rules For Change Point Detection in Multiple Independent Time Series

Jun 15, 2023

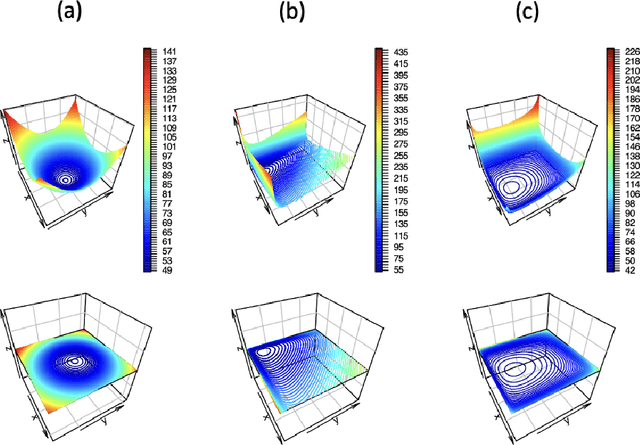

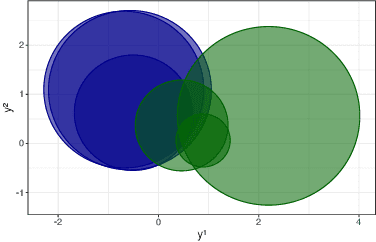

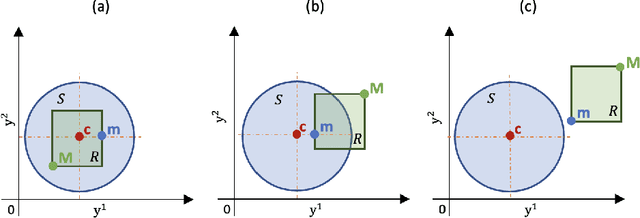

We consider the problem of detecting multiple changes in multiple independent time series. The search for the best segmentation can be expressed as a minimization problem over a given cost function. We focus on dynamic programming algorithms that solve this problem exactly. When the number of changes is proportional to data length, an inequality-based pruning rule encoded in the PELT algorithm leads to a linear time complexity. Another type of pruning, called functional pruning, gives a close-to-linear time complexity whatever the number of changes, but only for the analysis of univariate time series. We propose a few extensions of functional pruning for multiple independent time series based on the use of simple geometric shapes (balls and hyperrectangles). We focus on the Gaussian case, but some of our rules can be easily extended to the exponential family. In a simulation study we compare the computational efficiency of different geometric-based pruning rules. We show that for small dimensions (2, 3, 4) some of them ran significantly faster than inequality-based approaches in particular when the underlying number of changes is small compared to the data length.

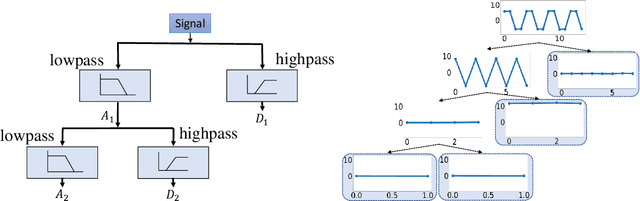

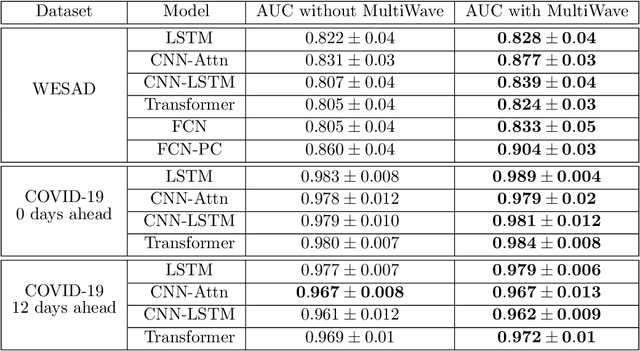

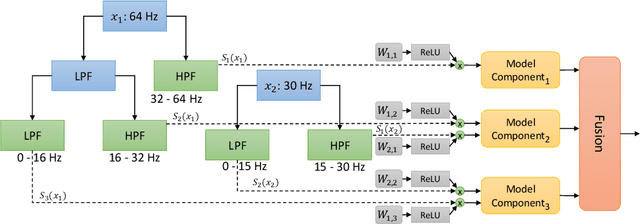

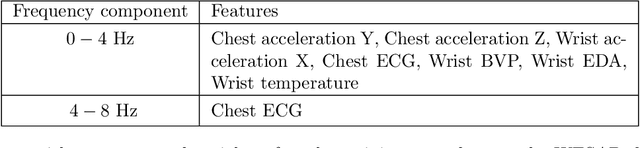

MultiWave: Multiresolution Deep Architectures through Wavelet Decomposition for Multivariate Time Series Prediction

Jun 16, 2023

The analysis of multivariate time series data is challenging due to the various frequencies of signal changes that can occur over both short and long terms. Furthermore, standard deep learning models are often unsuitable for such datasets, as signals are typically sampled at different rates. To address these issues, we introduce MultiWave, a novel framework that enhances deep learning time series models by incorporating components that operate at the intrinsic frequencies of signals. MultiWave uses wavelets to decompose each signal into subsignals of varying frequencies and groups them into frequency bands. Each frequency band is handled by a different component of our model. A gating mechanism combines the output of the components to produce sparse models that use only specific signals at specific frequencies. Our experiments demonstrate that MultiWave accurately identifies informative frequency bands and improves the performance of various deep learning models, including LSTM, Transformer, and CNN-based models, for a wide range of applications. It attains top performance in stress and affect detection from wearables. It also increases the AUC of the best-performing model by 5% for in-hospital COVID-19 mortality prediction from patient blood samples and for human activity recognition from accelerometer and gyroscope data. We show that MultiWave consistently identifies critical features and their frequency components, thus providing valuable insights into the applications studied.

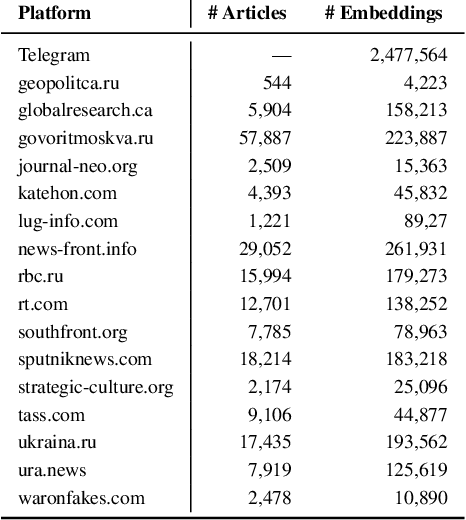

Partial Mobilization: Tracking Multilingual Information Flows Amongst Russian Media Outlets and Telegram

Jan 25, 2023

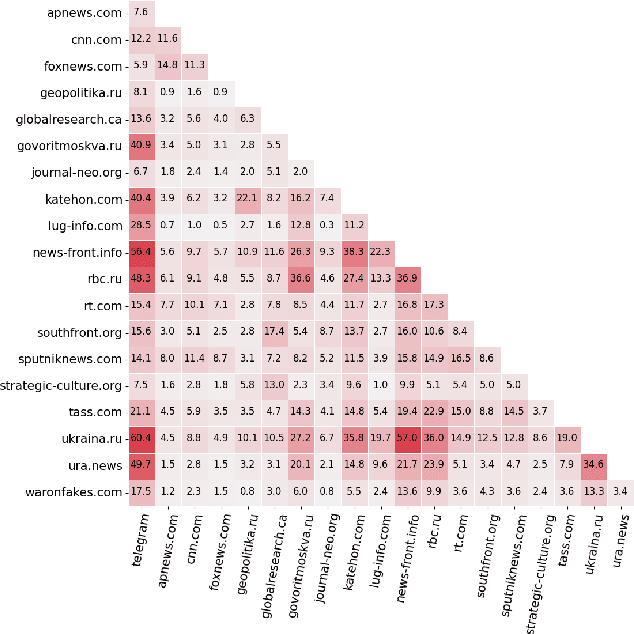

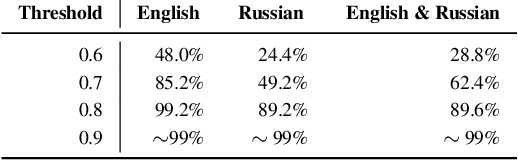

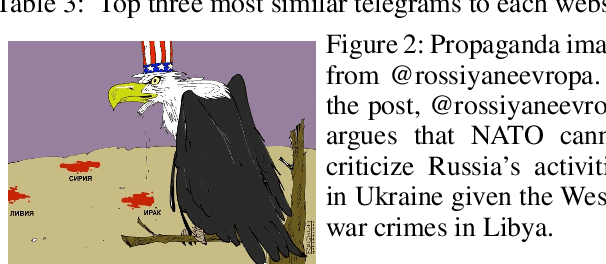

In response to disinformation and propaganda from Russian online media following the Russian invasion of Ukraine, Russian outlets including Russia Today and Sputnik News were banned throughout Europe. Many of these Russian outlets, in order to reach their audiences, began to heavily promote their content on messaging services like Telegram. In this work, to understand this phenomenon, we study how 16 Russian media outlets have interacted with and utilized 732 Telegram channels throughout 2022. To do this, we utilize a multilingual version of the foundational model MPNet to embed articles and Telegram messages in a shared embedding space and semantically compare content. Leveraging a parallelized version of DP-Means clustering, we perform paragraph-level topic/narrative extraction and time-series analysis with Hawkes Processes. With this approach, across our websites, we find between 2.3% (ura.news) and 26.7% (ukraina.ru) of their content originated/resulted from activity on Telegram. Finally, tracking the spread of individual narratives, we measure the rate at which these websites and channels disseminate content within the Russian media ecosystem.

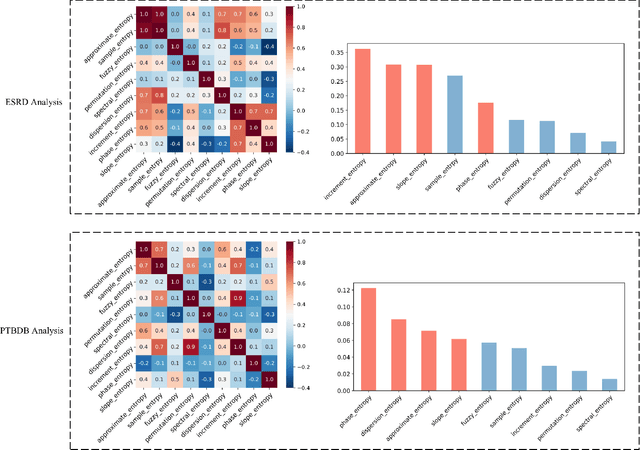

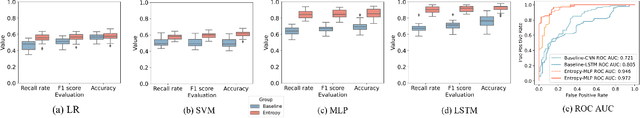

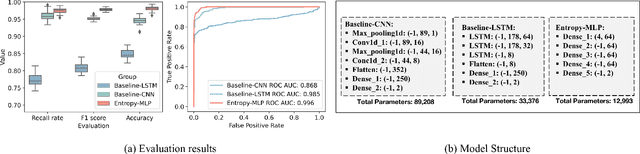

Information Theory Inspired Pattern Analysis for Time-series Data

Feb 22, 2023

Current methods for pattern analysis in time series mainly rely on statistical features or probabilistic learning and inference methods to identify patterns and trends in the data. Such methods do not generalize well when applied to multivariate, multi-source, state-varying, and noisy time-series data. To address these issues, we propose a highly generalizable method that uses information theory-based features to identify and learn from patterns in multivariate time-series data. To demonstrate the proposed approach, we analyze pattern changes in human activity data. For applications with stochastic state transitions, features are developed based on Shannon's entropy of Markov chains, entropy rates of Markov chains, entropy production of Markov chains, and von Neumann entropy of Markov chains. For applications where state modeling is not applicable, we utilize five entropy variants, including approximate entropy, increment entropy, dispersion entropy, phase entropy, and slope entropy. The results show the proposed information theory-based features improve the recall rate, F1 score, and accuracy on average by up to 23.01\% compared with the baseline models and a simpler model structure, with an average reduction of 18.75 times in the number of model parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge