"Information Extraction": models, code, and papers

Perceptual Musical Features for Interpretable Audio Tagging

Jan 04, 2024In the age of music streaming platforms, the task of automatically tagging music audio has garnered significant attention, driving researchers to devise methods aimed at enhancing performance metrics on standard datasets. Most recent approaches rely on deep neural networks, which, despite their impressive performance, possess opacity, making it challenging to elucidate their output for a given input. While the issue of interpretability has been emphasized in other fields like medicine, it has not received attention in music-related tasks. In this study, we explored the relevance of interpretability in the context of automatic music tagging. We constructed a workflow that incorporates three different information extraction techniques: a) leveraging symbolic knowledge, b) utilizing auxiliary deep neural networks, and c) employing signal processing to extract perceptual features from audio files. These features were subsequently used to train an interpretable machine-learning model for tag prediction. We conducted experiments on two datasets, namely the MTG-Jamendo dataset and the GTZAN dataset. Our method surpassed the performance of baseline models in both tasks and, in certain instances, demonstrated competitiveness with the current state-of-the-art. We conclude that there are use cases where the deterioration in performance is outweighed by the value of interpretability.

Fine-tuning and Utilization Methods of Domain-specific LLMs

Jan 01, 2024Recent releases of pre-trained Large Language Models (LLMs) have gained considerable traction, yet research on fine-tuning and employing domain-specific LLMs remains scarce. This study investigates approaches for fine-tuning and leveraging domain-specific LLMs, highlighting trends in LLMs, foundational models, and methods for domain-specific pre-training. Focusing on the financial sector, it details dataset selection, preprocessing, model choice, and considerations crucial for LLM fine-tuning in finance. Addressing the unique characteristics of financial data, the study explores the construction of domain-specific vocabularies and considerations for security and regulatory compliance. In the practical application of LLM fine-tuning, the study outlines the procedure and implementation for generating domain-specific LLMs in finance. Various financial cases, including stock price prediction, sentiment analysis of financial news, automated document processing, research, information extraction, and customer service enhancement, are exemplified. The study explores the potential of LLMs in the financial domain, identifies limitations, and proposes directions for improvement, contributing valuable insights for future research. Ultimately, it advances natural language processing technology in business, suggesting proactive LLM utilization in financial services across industries.

The Impact of Snippet Reliability on Misinformation in Online Health Search

Jan 28, 2024Search result snippets are crucial in modern search engines, providing users with a quick overview of a website's content. Snippets help users determine the relevance of a document to their information needs, and in certain scenarios even enable them to satisfy those needs without visiting web documents. Hence, it is crucial for snippets to reliably represent the content of their corresponding documents. While this may be a straightforward requirement for some queries, it can become challenging in the complex domain of healthcare, and can lead to misinformation. This paper aims to examine snippets' reliability in representing their corresponding documents, specifically in the health domain. To achieve this, we conduct a series of user studies using Google's search results, where participants are asked to infer viewpoints of search results pertaining to queries about the effectiveness of a medical intervention for a medical condition, based solely on their titles and snippets. Our findings reveal that a considerable portion of Google's snippets (28%) failed to present any viewpoint on the intervention's effectiveness, and that 35% were interpreted by participants as having a different viewpoint compared to their corresponding documents. To address this issue, we propose a snippet extraction solution tailored directly to users' information needs, i.e., extracting snippets that summarize documents' viewpoints regarding the intervention and condition that appear in the query. User study demonstrates that our information need-focused solution outperforms the mainstream query-based approach. With only 19.67% of snippets generated by our solution reported as not presenting a viewpoint and a mere 20.33% misinterpreted by participants. These results strongly suggest that an information need-focused approach can significantly improve the reliability of extracted snippets in online health search.

L3Cube-MahaSocialNER: A Social Media based Marathi NER Dataset and BERT models

Dec 30, 2023This work introduces the L3Cube-MahaSocialNER dataset, the first and largest social media dataset specifically designed for Named Entity Recognition (NER) in the Marathi language. The dataset comprises 18,000 manually labeled sentences covering eight entity classes, addressing challenges posed by social media data, including non-standard language and informal idioms. Deep learning models, including CNN, LSTM, BiLSTM, and Transformer models, are evaluated on the individual dataset with IOB and non-IOB notations. The results demonstrate the effectiveness of these models in accurately recognizing named entities in Marathi informal text. The L3Cube-MahaSocialNER dataset offers user-centric information extraction and supports real-time applications, providing a valuable resource for public opinion analysis, news, and marketing on social media platforms. We also show that the zero-shot results of the regular NER model are poor on the social NER test set thus highlighting the need for more social NER datasets. The datasets and models are publicly available at https://github.com/l3cube-pune/MarathiNLP

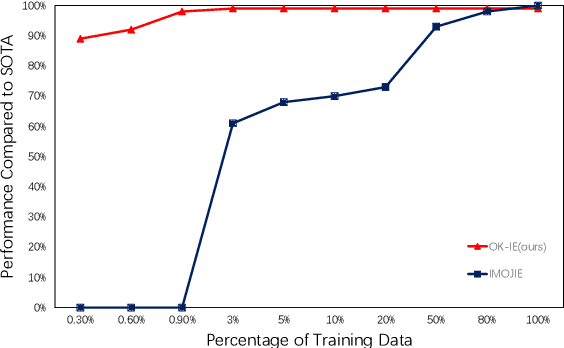

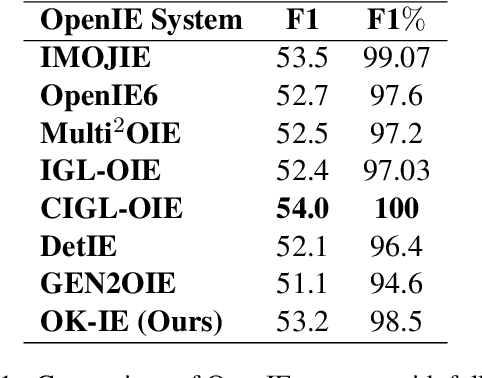

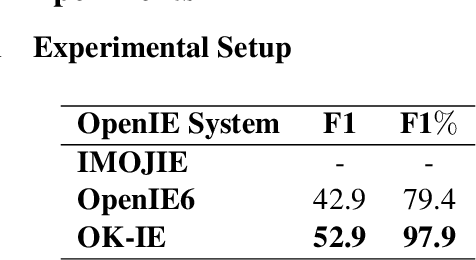

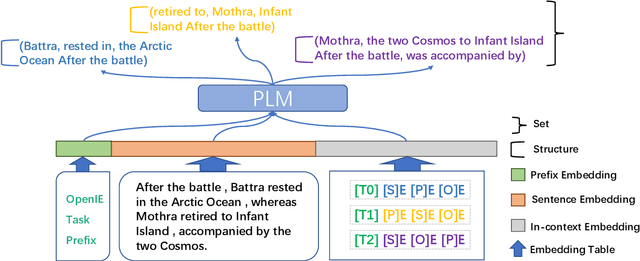

Efficient Data Learning for Open Information Extraction with Pre-trained Language Models

Oct 23, 2023

Open Information Extraction (OpenIE) is a fundamental yet challenging task in Natural Language Processing, which involves extracting all triples (subject, predicate, object) from a given sentence. While labeling-based methods have their merits, generation-based techniques offer unique advantages, such as the ability to generate tokens not present in the original sentence. However, these generation-based methods often require a significant amount of training data to learn the task form of OpenIE and substantial training time to overcome slow model convergence due to the order penalty. In this paper, we introduce a novel framework, OK-IE, that ingeniously transforms the task form of OpenIE into the pre-training task form of the T5 model, thereby reducing the need for extensive training data. Furthermore, we introduce an innovative concept of Anchor to control the sequence of model outputs, effectively eliminating the impact of order penalty on model convergence and significantly reducing training time. Experimental results indicate that, compared to previous SOTA methods, OK-IE requires only 1/100 of the training data (900 instances) and 1/120 of the training time (3 minutes) to achieve comparable results.

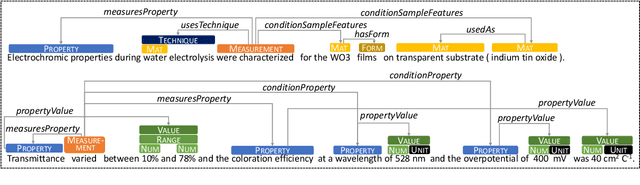

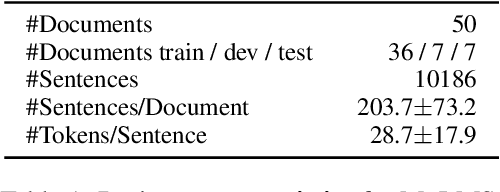

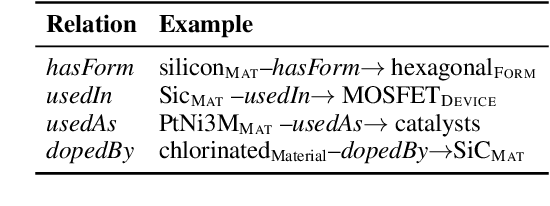

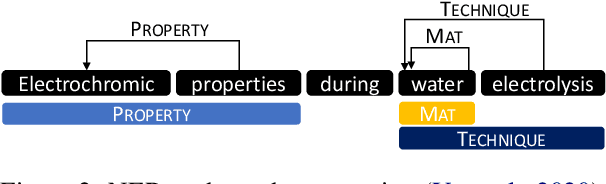

MuLMS: A Multi-Layer Annotated Text Corpus for Information Extraction in the Materials Science Domain

Oct 24, 2023

Keeping track of all relevant recent publications and experimental results for a research area is a challenging task. Prior work has demonstrated the efficacy of information extraction models in various scientific areas. Recently, several datasets have been released for the yet understudied materials science domain. However, these datasets focus on sub-problems such as parsing synthesis procedures or on sub-domains, e.g., solid oxide fuel cells. In this resource paper, we present MuLMS, a new dataset of 50 open-access articles, spanning seven sub-domains of materials science. The corpus has been annotated by domain experts with several layers ranging from named entities over relations to frame structures. We present competitive neural models for all tasks and demonstrate that multi-task training with existing related resources leads to benefits.

CIMIL-CRC: a clinically-informed multiple instance learning framework for patient-level colorectal cancer molecular subtypes classification from H\&E stained images

Jan 29, 2024Treatment approaches for colorectal cancer (CRC) are highly dependent on the molecular subtype, as immunotherapy has shown efficacy in cases with microsatellite instability (MSI) but is ineffective for the microsatellite stable (MSS) subtype. There is promising potential in utilizing deep neural networks (DNNs) to automate the differentiation of CRC subtypes by analyzing Hematoxylin and Eosin (H\&E) stained whole-slide images (WSIs). Due to the extensive size of WSIs, Multiple Instance Learning (MIL) techniques are typically explored. However, existing MIL methods focus on identifying the most representative image patches for classification, which may result in the loss of critical information. Additionally, these methods often overlook clinically relevant information, like the tendency for MSI class tumors to predominantly occur on the proximal (right side) colon. We introduce `CIMIL-CRC', a DNN framework that: 1) solves the MSI/MSS MIL problem by efficiently combining a pre-trained feature extraction model with principal component analysis (PCA) to aggregate information from all patches, and 2) integrates clinical priors, particularly the tumor location within the colon, into the model to enhance patient-level classification accuracy. We assessed our CIMIL-CRC method using the average area under the curve (AUC) from a 5-fold cross-validation experimental setup for model development on the TCGA-CRC-DX cohort, contrasting it with a baseline patch-level classification, MIL-only approach, and Clinically-informed patch-level classification approach. Our CIMIL-CRC outperformed all methods (AUROC: $0.92\pm0.002$ (95\% CI 0.91-0.92), vs. $0.79\pm0.02$ (95\% CI 0.76-0.82), $0.86\pm0.01$ (95\% CI 0.85-0.88), and $0.87\pm0.01$ (95\% CI 0.86-0.88), respectively). The improvement was statistically significant.

Detect-Order-Construct: A Tree Construction based Approach for Hierarchical Document Structure Analysis

Jan 22, 2024Document structure analysis (aka document layout analysis) is crucial for understanding the physical layout and logical structure of documents, with applications in information retrieval, document summarization, knowledge extraction, etc. In this paper, we concentrate on Hierarchical Document Structure Analysis (HDSA) to explore hierarchical relationships within structured documents created using authoring software employing hierarchical schemas, such as LaTeX, Microsoft Word, and HTML. To comprehensively analyze hierarchical document structures, we propose a tree construction based approach that addresses multiple subtasks concurrently, including page object detection (Detect), reading order prediction of identified objects (Order), and the construction of intended hierarchical structure (Construct). We present an effective end-to-end solution based on this framework to demonstrate its performance. To assess our approach, we develop a comprehensive benchmark called Comp-HRDoc, which evaluates the above subtasks simultaneously. Our end-to-end system achieves state-of-the-art performance on two large-scale document layout analysis datasets (PubLayNet and DocLayNet), a high-quality hierarchical document structure reconstruction dataset (HRDoc), and our Comp-HRDoc benchmark. The Comp-HRDoc benchmark will be released to facilitate further research in this field.

I2SRM: Intra- and Inter-Sample Relationship Modeling for Multimodal Information Extraction

Oct 10, 2023

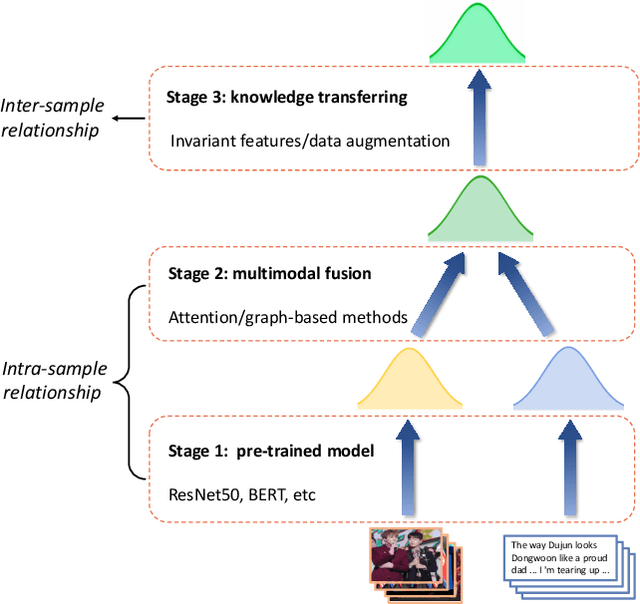

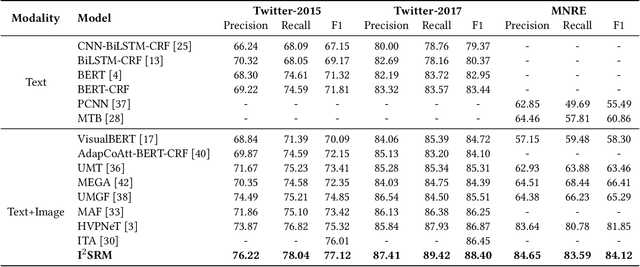

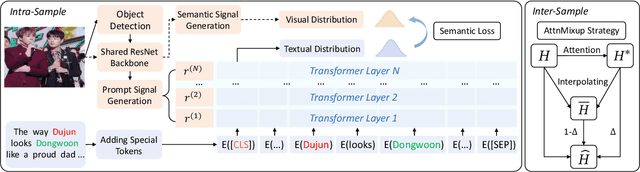

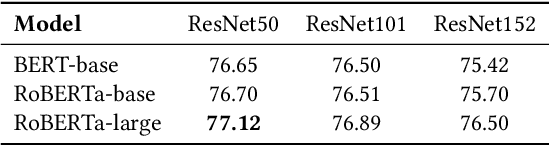

Multimodal information extraction is attracting research attention nowadays, which requires aggregating representations from different modalities. In this paper, we present the Intra- and Inter-Sample Relationship Modeling (I2SRM) method for this task, which contains two modules. Firstly, the intra-sample relationship modeling module operates on a single sample and aims to learn effective representations. Embeddings from textual and visual modalities are shifted to bridge the modality gap caused by distinct pre-trained language and image models. Secondly, the inter-sample relationship modeling module considers relationships among multiple samples and focuses on capturing the interactions. An AttnMixup strategy is proposed, which not only enables collaboration among samples but also augments data to improve generalization. We conduct extensive experiments on the multimodal named entity recognition datasets Twitter-2015 and Twitter-2017, and the multimodal relation extraction dataset MNRE. Our proposed method I2SRM achieves competitive results, 77.12% F1-score on Twitter-2015, 88.40% F1-score on Twitter-2017, and 84.12% F1-score on MNRE.

Rethinking Cross-Attention for Infrared and Visible Image Fusion

Jan 22, 2024The salient information of an infrared image and the abundant texture of a visible image can be fused to obtain a comprehensive image. As can be known, the current fusion methods based on Transformer techniques for infrared and visible (IV) images have exhibited promising performance. However, the attention mechanism of the previous Transformer-based methods was prone to extract common information from source images without considering the discrepancy information, which limited fusion performance. In this paper, by reevaluating the cross-attention mechanism, we propose an alternate Transformer fusion network (ATFuse) to fuse IV images. Our ATFuse consists of one discrepancy information injection module (DIIM) and two alternate common information injection modules (ACIIM). The DIIM is designed by modifying the vanilla cross-attention mechanism, which can promote the extraction of the discrepancy information of the source images. Meanwhile, the ACIIM is devised by alternately using the vanilla cross-attention mechanism, which can fully mine common information and integrate long dependencies. Moreover, the successful training of ATFuse is facilitated by a proposed segmented pixel loss function, which provides a good trade-off for texture detail and salient structure preservation. The qualitative and quantitative results on public datasets indicate our ATFFuse is effective and superior compared to other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge