"Image": models, code, and papers

DETR with Additional Global Aggregation for Cross-domain Weakly Supervised Object Detection

Apr 14, 2023

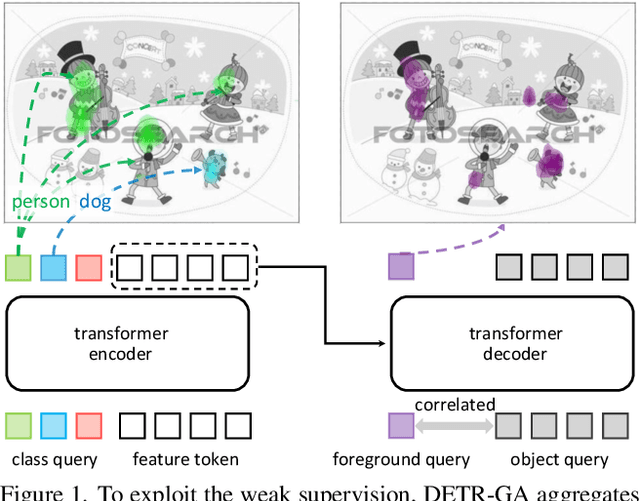

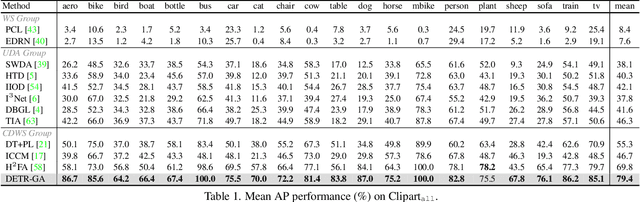

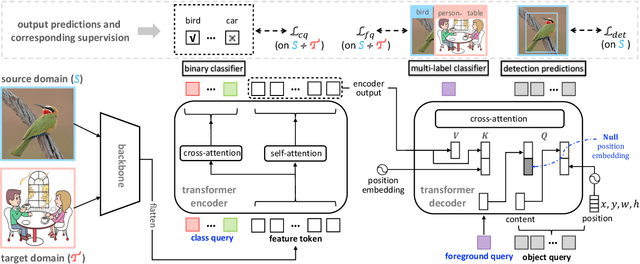

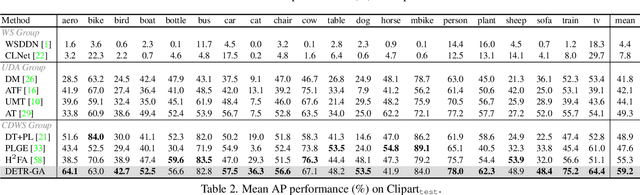

This paper presents a DETR-based method for cross-domain weakly supervised object detection (CDWSOD), aiming at adapting the detector from source to target domain through weak supervision. We think DETR has strong potential for CDWSOD due to an insight: the encoder and the decoder in DETR are both based on the attention mechanism and are thus capable of aggregating semantics across the entire image. The aggregation results, i.e., image-level predictions, can naturally exploit the weak supervision for domain alignment. Such motivated, we propose DETR with additional Global Aggregation (DETR-GA), a CDWSOD detector that simultaneously makes "instance-level + image-level" predictions and utilizes "strong + weak" supervisions. The key point of DETR-GA is very simple: for the encoder / decoder, we respectively add multiple class queries / a foreground query to aggregate the semantics into image-level predictions. Our query-based aggregation has two advantages. First, in the encoder, the weakly-supervised class queries are capable of roughly locating the corresponding positions and excluding the distraction from non-relevant regions. Second, through our design, the object queries and the foreground query in the decoder share consensus on the class semantics, therefore making the strong and weak supervision mutually benefit each other for domain alignment. Extensive experiments on four popular cross-domain benchmarks show that DETR-GA significantly improves CSWSOD and advances the states of the art (e.g., 29.0% --> 79.4% mAP on PASCAL VOC --> Clipart_all dataset).

BiTrackGAN: Cascaded CycleGANs to Constraint Face Aging

Apr 22, 2023

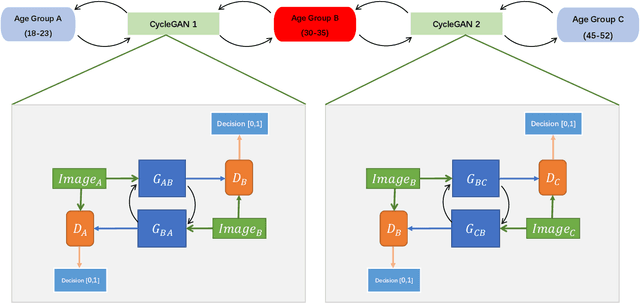

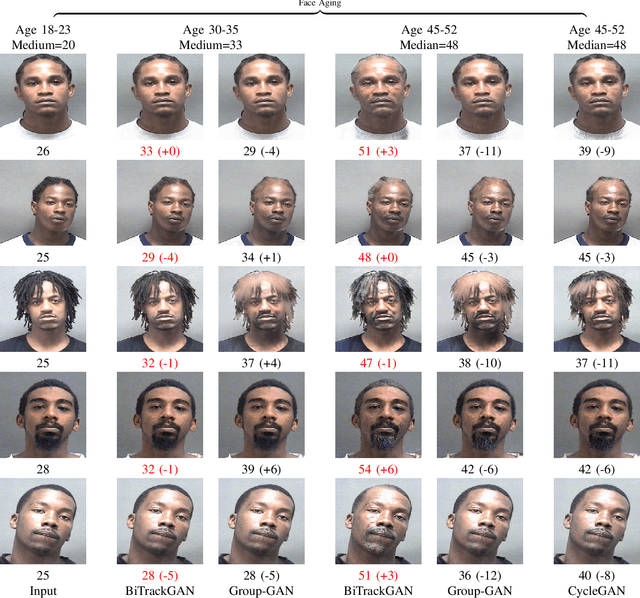

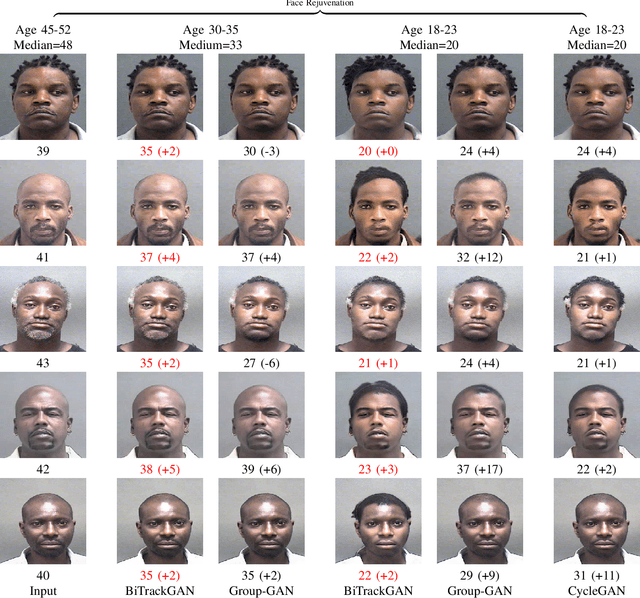

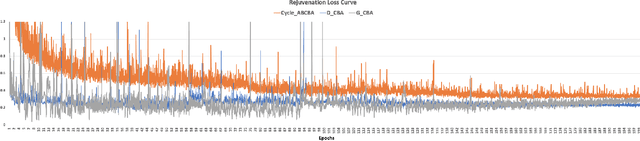

With the increased accuracy of modern computer vision technology, many access control systems are equipped with face recognition functions for faster identification. In order to maintain high recognition accuracy, it is necessary to keep the face database up-to-date. However, it is impractical to collect the latest facial picture of the system's user through human effort. Thus, we propose a bottom-up training method for our proposed network to address this challenge. Essentially, our proposed network is a translation pipeline that cascades two CycleGAN blocks (a widely used unpaired image-to-image translation generative adversarial network) called BiTrackGAN. By bottom-up training, it induces an ideal intermediate state between these two CycleGAN blocks, namely the constraint mechanism. Experimental results show that BiTrackGAN achieves more reasonable and diverse cross-age facial synthesis than other CycleGAN-related methods. As far as we know, it is a novel and effective constraint mechanism for more reason and accurate aging synthesis through the CycleGAN approach.

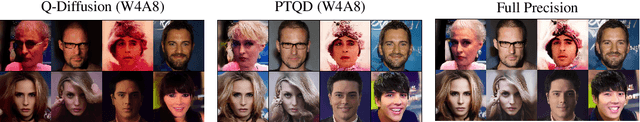

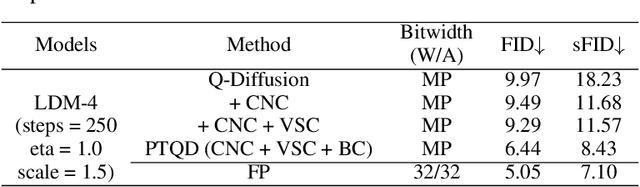

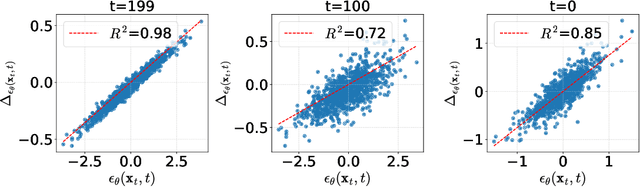

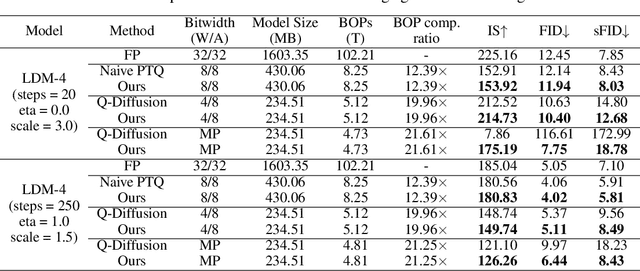

PTQD: Accurate Post-Training Quantization for Diffusion Models

May 18, 2023

Diffusion models have recently dominated image synthesis and other related generative tasks. However, the iterative denoising process is expensive in computations at inference time, making diffusion models less practical for low-latency and scalable real-world applications. Post-training quantization of diffusion models can significantly reduce the model size and accelerate the sampling process without requiring any re-training. Nonetheless, applying existing post-training quantization methods directly to low-bit diffusion models can significantly impair the quality of generated samples. Specifically, for each denoising step, quantization noise leads to deviations in the estimated mean and mismatches with the predetermined variance schedule. Moreover, as the sampling process proceeds, the quantization noise may accumulate, resulting in a low signal-to-noise ratio (SNR) in late denoising steps. To address these challenges, we propose a unified formulation for the quantization noise and diffusion perturbed noise in the quantized denoising process. We first disentangle the quantization noise into its correlated and residual uncorrelated parts regarding its full-precision counterpart. The correlated part can be easily corrected by estimating the correlation coefficient. For the uncorrelated part, we calibrate the denoising variance schedule to absorb the excess variance resulting from quantization. Moreover, we propose a mixed-precision scheme to choose the optimal bitwidth for each denoising step, which prefers low bits to accelerate the early denoising steps while high bits maintain the high SNR for the late steps. Extensive experiments demonstrate that our method outperforms previous post-training quantized diffusion models in generating high-quality samples, with only a 0.06 increase in FID score compared to full-precision LDM-4 on ImageNet 256x256, while saving 19.9x bit operations.

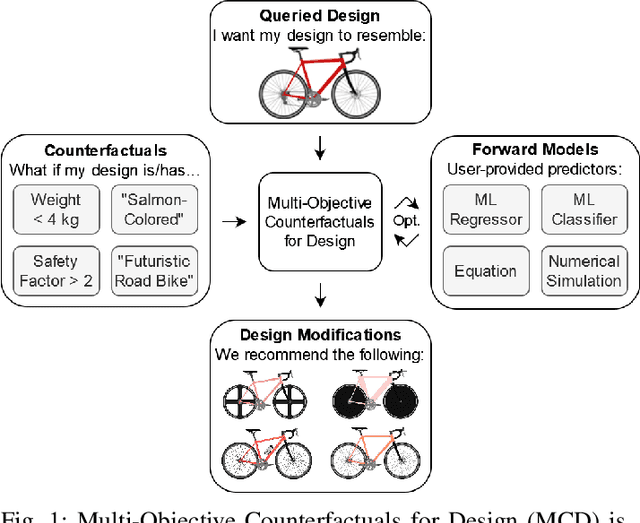

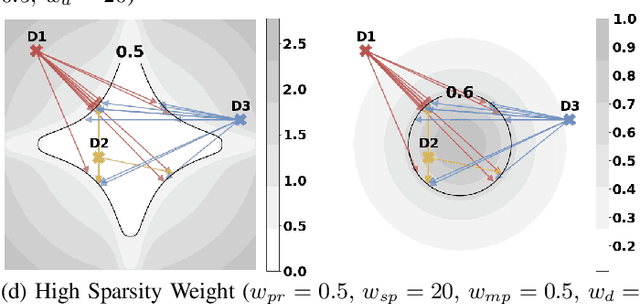

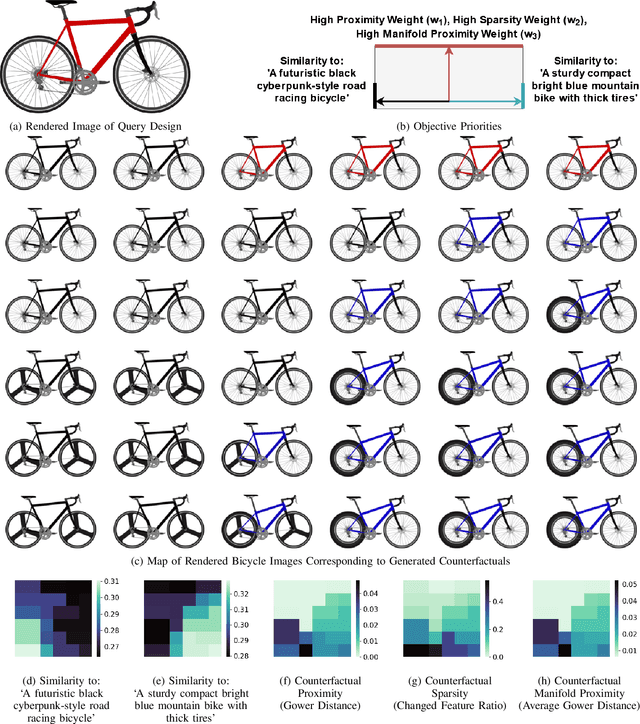

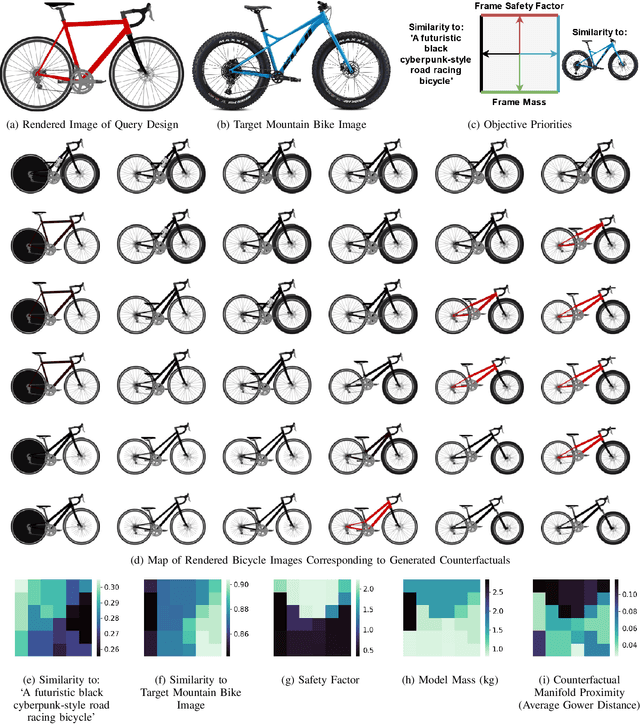

Counterfactuals for Design: A Model-Agnostic Method For Design Recommendations

May 18, 2023

We introduce Multi-Objective Counterfactuals for Design (MCD), a novel method for counterfactual optimization in design problems. Counterfactuals are hypothetical situations that can lead to a different decision or choice. In this paper, the authors frame the counterfactual search problem as a design recommendation tool that can help identify modifications to a design, leading to better functional performance. MCD improves upon existing counterfactual search methods by supporting multi-objective queries, which are crucial in design problems, and by decoupling the counterfactual search and sampling processes, thus enhancing efficiency and facilitating objective tradeoff visualization. The paper demonstrates MCD's core functionality using a two-dimensional test case, followed by three case studies of bicycle design that showcase MCD's effectiveness in real-world design problems. In the first case study, MCD excels at recommending modifications to query designs that can significantly enhance functional performance, such as weight savings and improvements to the structural safety factor. The second case study demonstrates that MCD can work with a pre-trained language model to suggest design changes based on a subjective text prompt effectively. Lastly, the authors task MCD with increasing a query design's similarity to a target image and text prompt while simultaneously reducing weight and improving structural performance, demonstrating MCD's performance on a complex multimodal query. Overall, MCD has the potential to provide valuable recommendations for practitioners and design automation researchers looking for answers to their ``What if'' questions by exploring hypothetical design modifications and their impact on multiple design objectives. The code, test problems, and datasets used in the paper are available to the public at decode.mit.edu/projects/counterfactuals/.

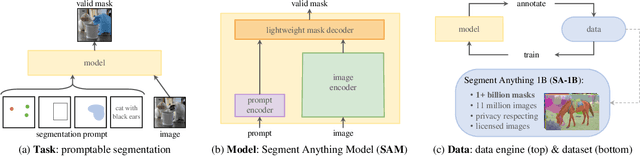

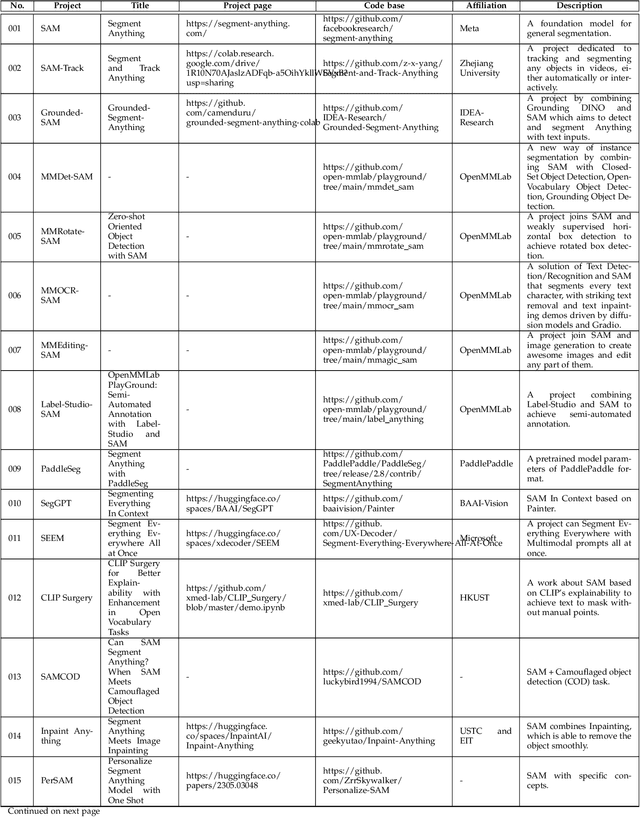

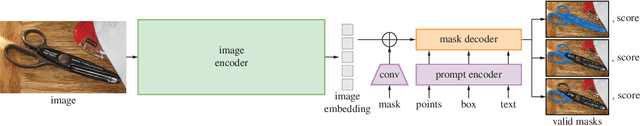

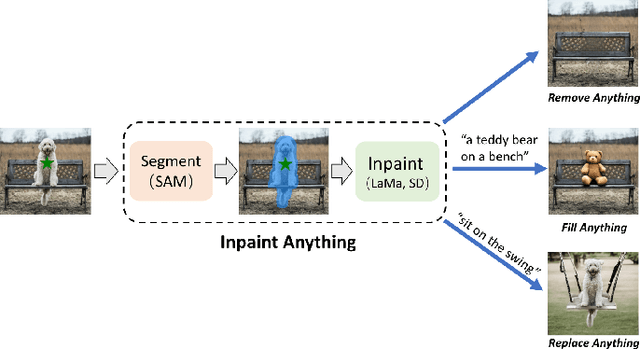

A Comprehensive Survey on Segment Anything Model for Vision and Beyond

May 19, 2023

Artificial intelligence (AI) is evolving towards artificial general intelligence, which refers to the ability of an AI system to perform a wide range of tasks and exhibit a level of intelligence similar to that of a human being. This is in contrast to narrow or specialized AI, which is designed to perform specific tasks with a high degree of efficiency. Therefore, it is urgent to design a general class of models, which we term foundation models, trained on broad data that can be adapted to various downstream tasks. The recently proposed segment anything model (SAM) has made significant progress in breaking the boundaries of segmentation, greatly promoting the development of foundation models for computer vision. To fully comprehend SAM, we conduct a survey study. As the first to comprehensively review the progress of segmenting anything task for vision and beyond based on the foundation model of SAM, this work focuses on its applications to various tasks and data types by discussing its historical development, recent progress, and profound impact on broad applications. We first introduce the background and terminology for foundation models including SAM, as well as state-of-the-art methods contemporaneous with SAM that are significant for segmenting anything task. Then, we analyze and summarize the advantages and limitations of SAM across various image processing applications, including software scenes, real-world scenes, and complex scenes. Importantly, many insights are drawn to guide future research to develop more versatile foundation models and improve the architecture of SAM. We also summarize massive other amazing applications of SAM in vision and beyond. Finally, we maintain a continuously updated paper list and an open-source project summary for foundation model SAM at \href{https://github.com/liliu-avril/Awesome-Segment-Anything}{\color{magenta}{here}}.

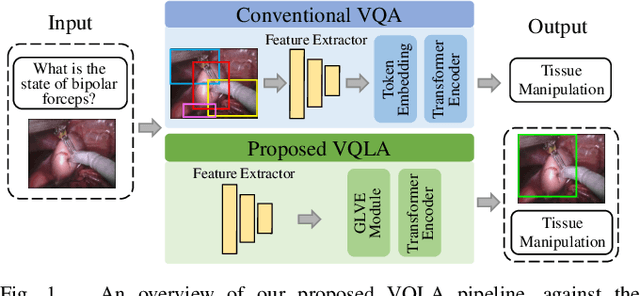

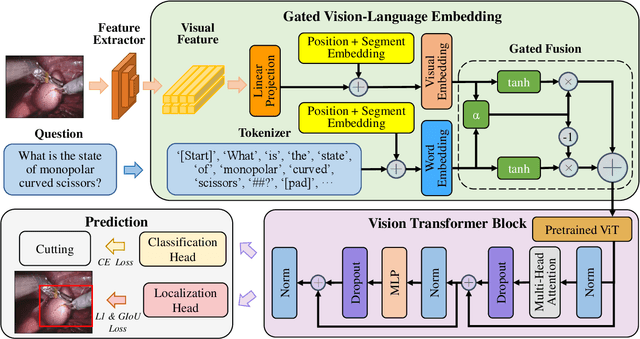

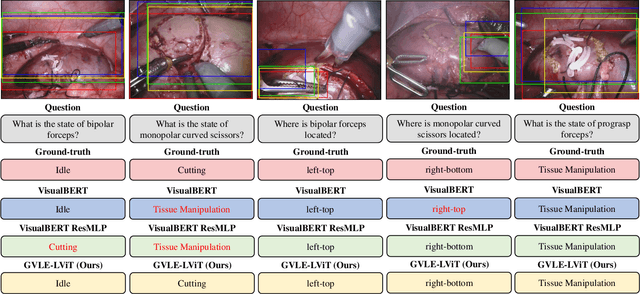

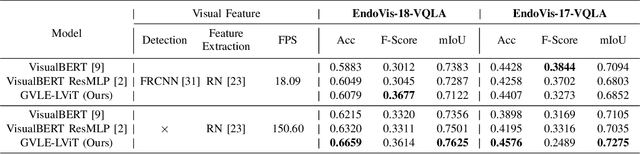

Surgical-VQLA: Transformer with Gated Vision-Language Embedding for Visual Question Localized-Answering in Robotic Surgery

May 19, 2023

Despite the availability of computer-aided simulators and recorded videos of surgical procedures, junior residents still heavily rely on experts to answer their queries. However, expert surgeons are often overloaded with clinical and academic workloads and limit their time in answering. For this purpose, we develop a surgical question-answering system to facilitate robot-assisted surgical scene and activity understanding from recorded videos. Most of the existing VQA methods require an object detector and regions based feature extractor to extract visual features and fuse them with the embedded text of the question for answer generation. However, (1) surgical object detection model is scarce due to smaller datasets and lack of bounding box annotation; (2) current fusion strategy of heterogeneous modalities like text and image is naive; (3) the localized answering is missing, which is crucial in complex surgical scenarios. In this paper, we propose Visual Question Localized-Answering in Robotic Surgery (Surgical-VQLA) to localize the specific surgical area during the answer prediction. To deal with the fusion of the heterogeneous modalities, we design gated vision-language embedding (GVLE) to build input patches for the Language Vision Transformer (LViT) to predict the answer. To get localization, we add the detection head in parallel with the prediction head of the LViT. We also integrate GIoU loss to boost localization performance by preserving the accuracy of the question-answering model. We annotate two datasets of VQLA by utilizing publicly available surgical videos from MICCAI challenges EndoVis-17 and 18. Our validation results suggest that Surgical-VQLA can better understand the surgical scene and localize the specific area related to the question-answering. GVLE presents an efficient language-vision embedding technique by showing superior performance over the existing benchmarks.

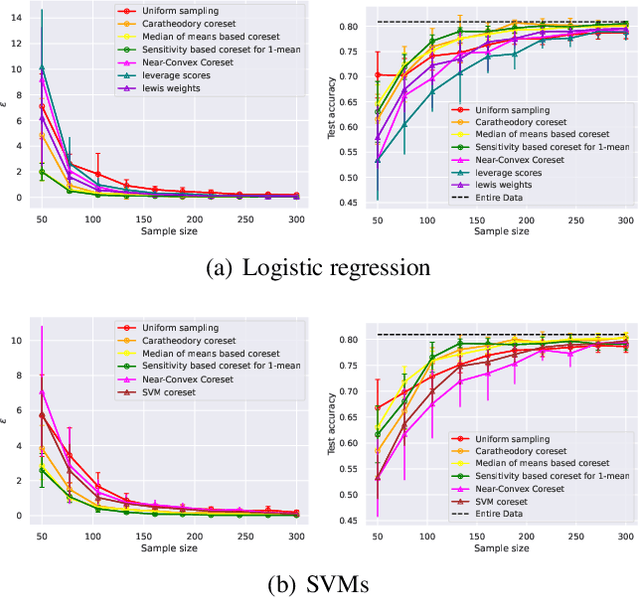

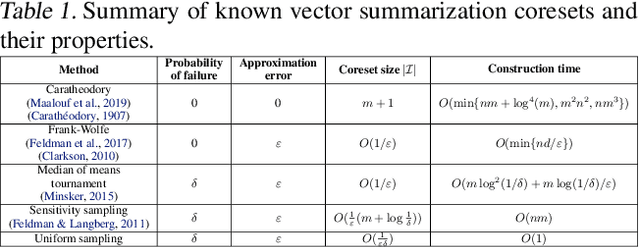

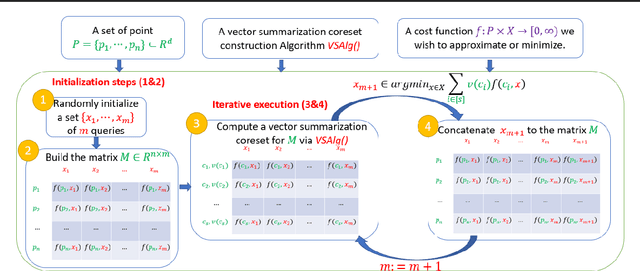

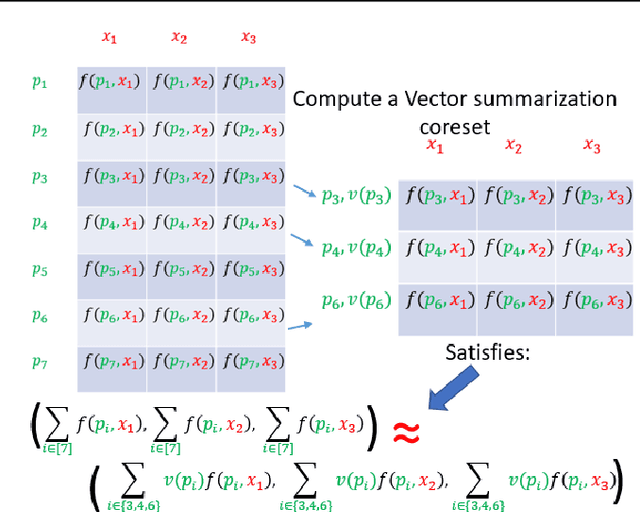

AutoCoreset: An Automatic Practical Coreset Construction Framework

May 19, 2023

A coreset is a tiny weighted subset of an input set, that closely resembles the loss function, with respect to a certain set of queries. Coresets became prevalent in machine learning as they have shown to be advantageous for many applications. While coreset research is an active research area, unfortunately, coresets are constructed in a problem-dependent manner, where for each problem, a new coreset construction algorithm is usually suggested, a process that may take time or may be hard for new researchers in the field. Even the generic frameworks require additional (problem-dependent) computations or proofs to be done by the user. Besides, many problems do not have (provable) small coresets, limiting their applicability. To this end, we suggest an automatic practical framework for constructing coresets, which requires (only) the input data and the desired cost function from the user, without the need for any other task-related computation to be done by the user. To do so, we reduce the problem of approximating a loss function to an instance of vector summation approximation, where the vectors we aim to sum are loss vectors of a specific subset of the queries, such that we aim to approximate the image of the function on this subset. We show that while this set is limited, the coreset is quite general. An extensive experimental study on various machine learning applications is also conducted. Finally, we provide a ``plug and play" style implementation, proposing a user-friendly system that can be easily used to apply coresets for many problems. Full open source code can be found at \href{https://github.com/alaamaalouf/AutoCoreset}{\text{https://github.com/alaamaalouf/AutoCoreset}}. We believe that these contributions enable future research and easier use and applications of coresets.

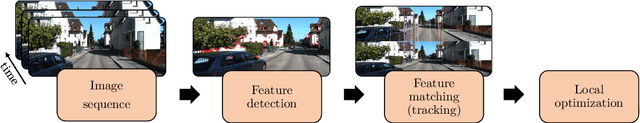

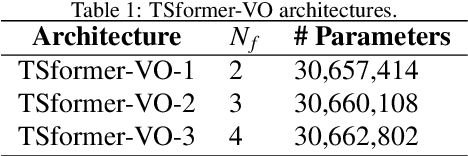

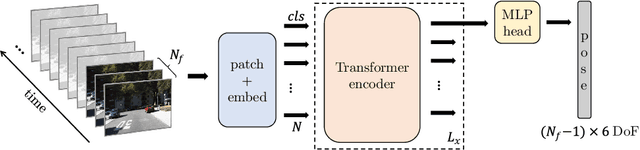

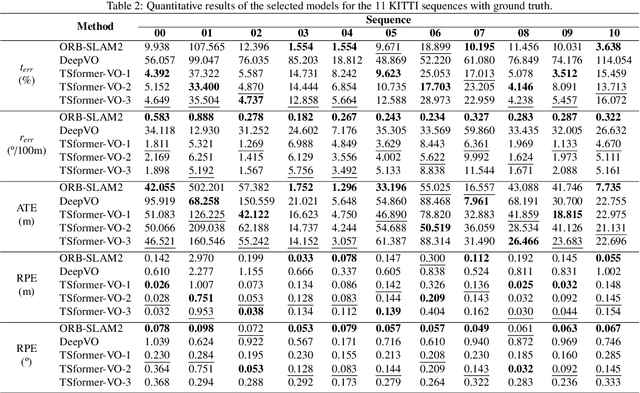

Transformer-based model for monocular visual odometry: a video understanding approach

May 10, 2023

Estimating the camera pose given images of a single camera is a traditional task in mobile robots and autonomous vehicles. This problem is called monocular visual odometry and it often relies on geometric approaches that require engineering effort for a specific scenario. Deep learning methods have shown to be generalizable after proper training and a considerable amount of available data. Transformer-based architectures have dominated the state-of-the-art in natural language processing and computer vision tasks, such as image and video understanding. In this work, we deal with the monocular visual odometry as a video understanding task to estimate the 6-DoF camera's pose. We contribute by presenting the TSformer-VO model based on spatio-temporal self-attention mechanisms to extract features from clips and estimate the motions in an end-to-end manner. Our approach achieved competitive state-of-the-art performance compared with geometry-based and deep learning-based methods on the KITTI visual odometry dataset, outperforming the DeepVO implementation highly accepted in the visual odometry community.

The Image of the Process Interpretation of Regular Expressions is Not Closed under Bisimulation Collapse

Mar 15, 2023Axiomatization and expressibility problems for Milner's process semantics (1984) of regular expressions modulo bisimilarity have turned out to be difficult for the full class of expressions with deadlock 0 and empty step~1. We report on a phenomenon that arises from the added presence of 1 when 0 is available, and that brings a crucial reason for this difficulty into focus. To wit, while interpretations of 1-free regular expressions are closed under bisimulation collapse, this is not the case for the interpretations of arbitrary regular expressions. Process graph interpretations of 1-free regular expressions satisfy the loop existence and elimination property LEE, which is preserved under bisimulation collapse. These features of LEE were applied for showing that an equational proof system for 1-free regular expressions modulo bisimilarity is complete, and that it is decidable in polynomial time whether a process graph is bisimilar to the interpretation of a 1-free regular expression. While interpretations of regular expressions do not satisfy the property LEE in general, we show that LEE can be recovered by refined interpretations as graphs with 1-transitions refined interpretations with 1-transitions (which are similar to silent steps for automata). This suggests that LEE can be expedient also for the general axiomatization and expressibility problems. But a new phenomenon emerges that needs to be addressed: the property of a process graph `to can be refined into a process graph with 1-transitions and with LEE' is not preserved under bisimulation collapse. We provide a 10-vertex graph with two 1-transitions that satisfies LEE, and in which a pair of bisimilar vertices cannot be collapsed on to each other while preserving the refinement property. This implies that the image of the process interpretation of regular expressions is not closed under bisimulation collapse.

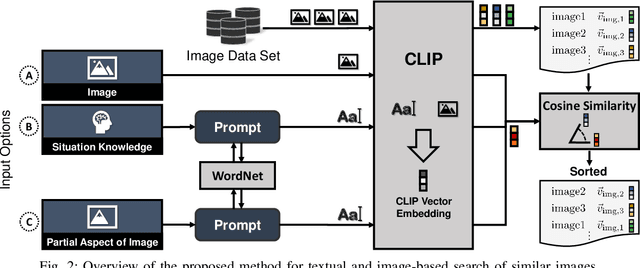

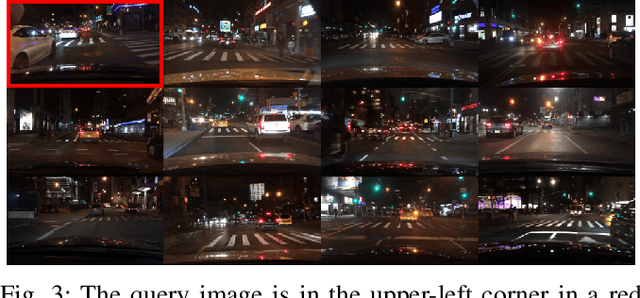

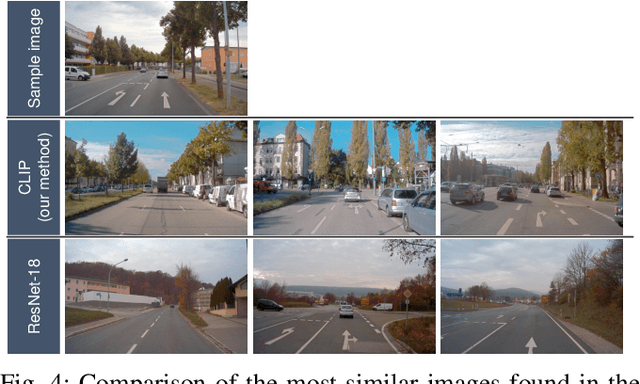

Focus on the Challenges: Analysis of a User-friendly Data Search Approach with CLIP in the Automotive Domain

Apr 21, 2023

Handling large amounts of data has become a key for developing automated driving systems. Especially for developing highly automated driving functions, working with images has become increasingly challenging due to the sheer size of the required data. Such data has to satisfy different requirements to be usable in machine learning-based approaches. Thus, engineers need to fully understand their large image data sets for the development and test of machine learning algorithms. However, current approaches lack automatability, are not generic and are limited in their expressiveness. Hence, this paper aims to analyze a state-of-the-art text and image embedding neural network and guides through the application in the automotive domain. This approach enables the search for similar images and the search based on a human understandable text-based description. Our experiments show the automatability and generalizability of our proposed method for handling large data sets in the automotive domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge