"Image To Image Translation": models, code, and papers

Multi-Modality Image Super-Resolution using Generative Adversarial Networks

Jun 22, 2022

Over the past few years deep learning-based techniques such as Generative Adversarial Networks (GANs) have significantly improved solutions to image super-resolution and image-to-image translation problems. In this paper, we propose a solution to the joint problem of image super-resolution and multi-modality image-to-image translation. The problem can be stated as the recovery of a high-resolution image in a modality, given a low-resolution observation of the same image in an alternative modality. Our paper offers two models to address this problem and will be evaluated on the recovery of high-resolution day images given low-resolution night images of the same scene. Promising qualitative and quantitative results will be presented for each model.

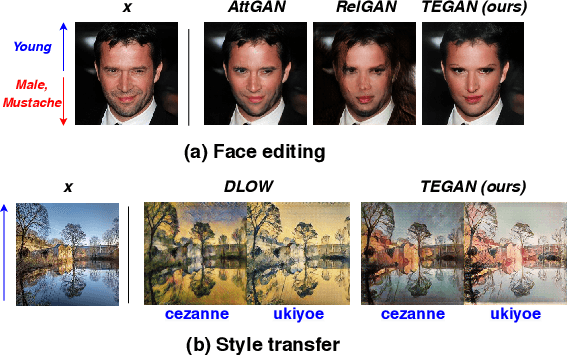

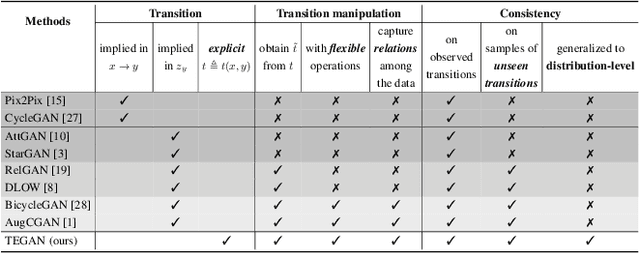

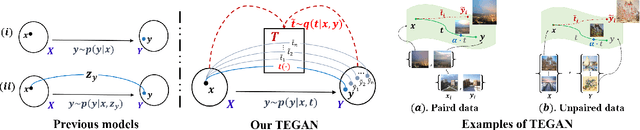

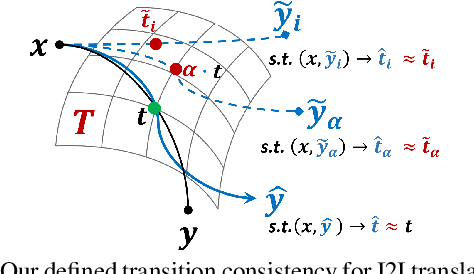

Generative Transition Mechanism to Image-to-Image Translation via Encoded Transformation

Mar 09, 2021

In this paper, we revisit the Image-to-Image (I2I) translation problem with transition consistency, namely the consistency defined on the conditional data mapping between each data pairs. Explicitly parameterizing each data mappings with a transition variable $t$, i.e., $x \overset{t(x,y)}{\mapsto}y$, we discover that existing I2I translation models mainly focus on maintaining consistency on results, e.g., image reconstruction or attribute prediction, named result consistency in our paper. This restricts their generalization ability to generate satisfactory results with unseen transitions in the test phase. Consequently, we propose to enforce both result consistency and transition consistency for I2I translation, to benefit the problem with a closer consistency between the input and output. To benefit the generalization ability of the translation model, we propose transition encoding to facilitate explicit regularization of these two {kinds} of consistencies on unseen transitions. We further generalize such explicitly regularized consistencies to distribution-level, thus facilitating a generalized overall consistency for I2I translation problems. With the above design, our proposed model, named Transition Encoding GAN (TEGAN), can poss superb generalization ability to generate realistic and semantically consistent translation results with unseen transitions in the test phase. It also provides a unified understanding of the existing GAN-based I2I transition models with our explicitly modeling of the data mapping, i.e., transition. Experiments on four different I2I translation tasks demonstrate the efficacy and generality of TEGAN.

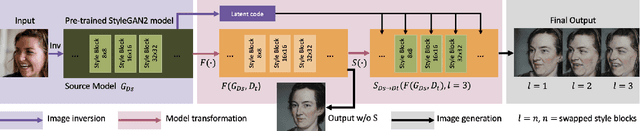

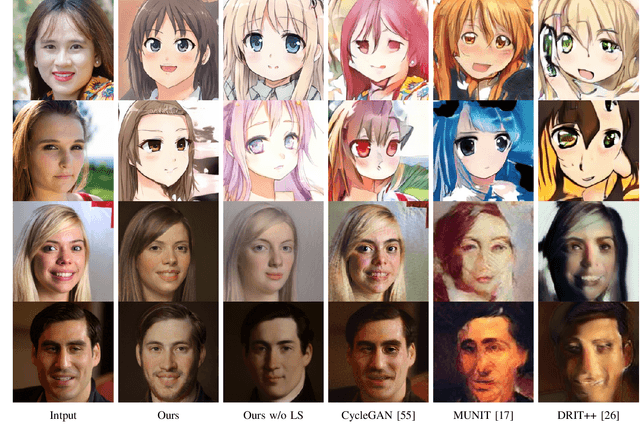

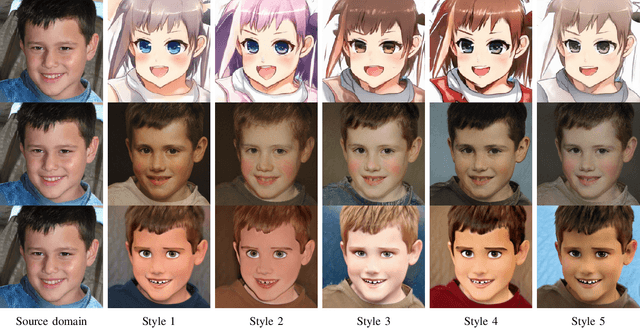

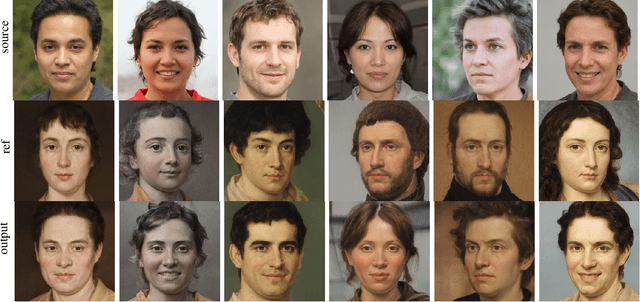

Unsupervised Image-to-Image Translation via Pre-trained StyleGAN2 Network

Oct 12, 2020

Image-to-Image (I2I) translation is a heated topic in academia, and it also has been applied in real-world industry for tasks like image synthesis, super-resolution, and colorization. However, traditional I2I translation methods train data in two or more domains together. This requires lots of computation resources. Moreover, the results are of lower quality, and they contain many more artifacts. The training process could be unstable when the data in different domains are not balanced, and modal collapse is more likely to happen. We proposed a new I2I translation method that generates a new model in the target domain via a series of model transformations on a pre-trained StyleGAN2 model in the source domain. After that, we proposed an inversion method to achieve the conversion between an image and its latent vector. By feeding the latent vector into the generated model, we can perform I2I translation between the source domain and target domain. Both qualitative and quantitative evaluations were conducted to prove that the proposed method can achieve outstanding performance in terms of image quality, diversity and semantic similarity to the input and reference images compared to state-of-the-art works.

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

Aug 30, 2018

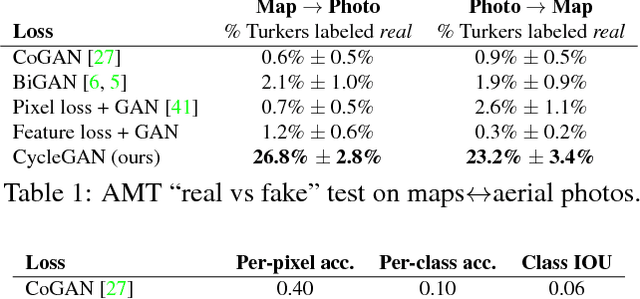

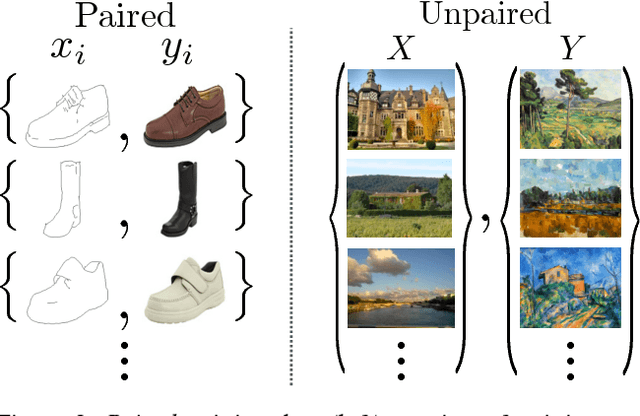

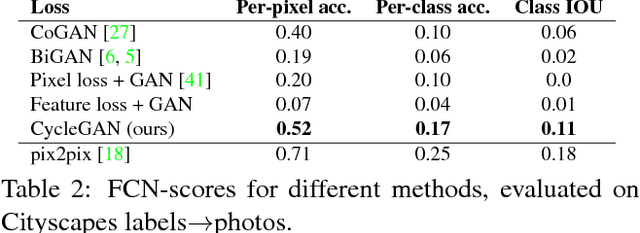

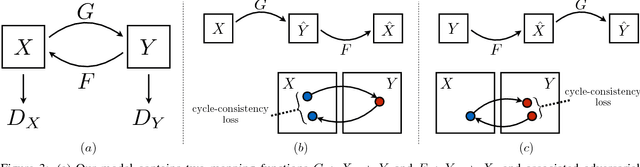

Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image using a training set of aligned image pairs. However, for many tasks, paired training data will not be available. We present an approach for learning to translate an image from a source domain $X$ to a target domain $Y$ in the absence of paired examples. Our goal is to learn a mapping $G: X \rightarrow Y$ such that the distribution of images from $G(X)$ is indistinguishable from the distribution $Y$ using an adversarial loss. Because this mapping is highly under-constrained, we couple it with an inverse mapping $F: Y \rightarrow X$ and introduce a cycle consistency loss to push $F(G(X)) \approx X$ (and vice versa). Qualitative results are presented on several tasks where paired training data does not exist, including collection style transfer, object transfiguration, season transfer, photo enhancement, etc. Quantitative comparisons against several prior methods demonstrate the superiority of our approach.

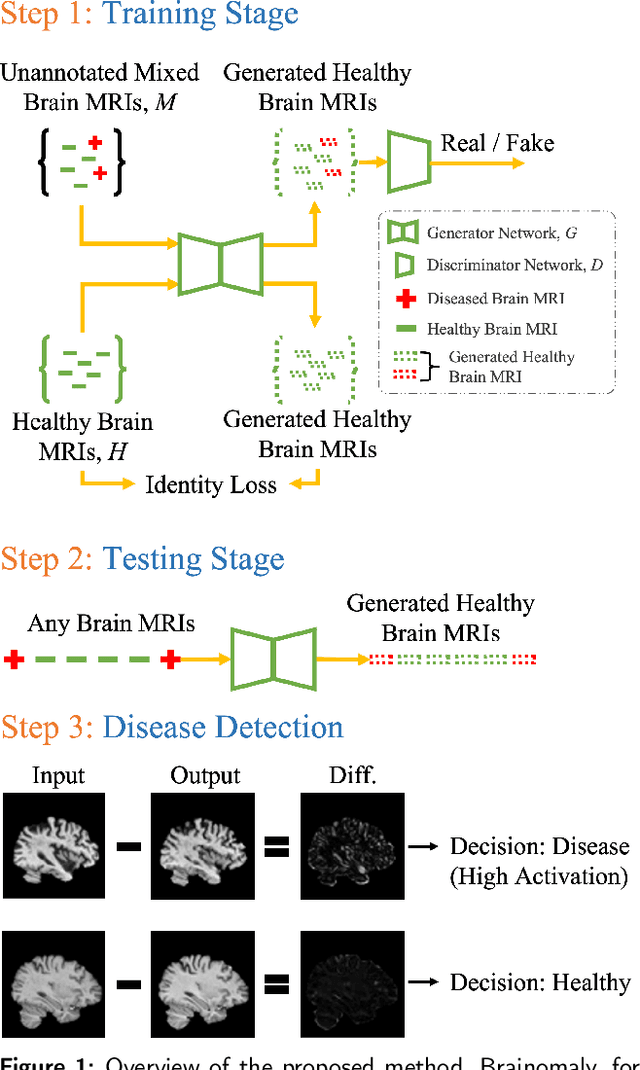

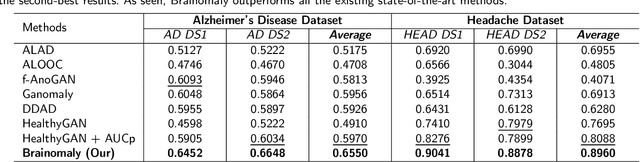

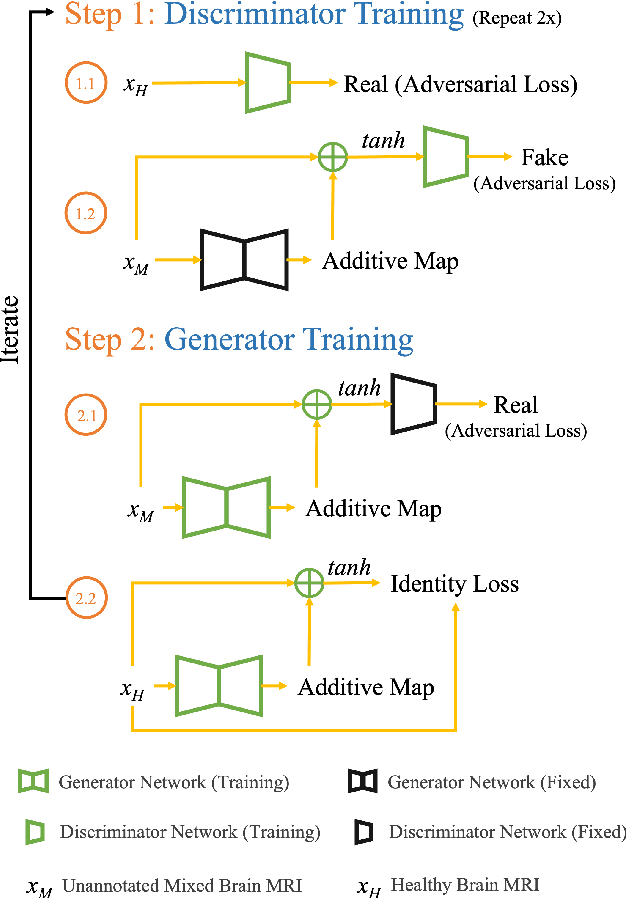

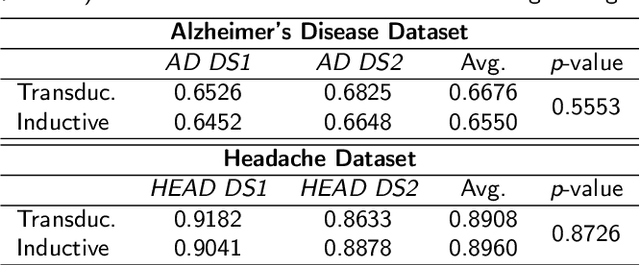

Brainomaly: Unsupervised Neurologic Disease Detection Utilizing Unannotated T1-weighted Brain MR Images

Feb 18, 2023

Deep neural networks have revolutionized the field of supervised learning by enabling accurate predictions through learning from large annotated datasets. However, acquiring large annotated medical imaging datasets is a challenging task, especially for rare diseases, due to the high cost, time, and effort required for annotation. In these scenarios, unsupervised disease detection methods, such as anomaly detection, can save significant human effort. A typically used approach for anomaly detection is to learn the images from healthy subjects only, assuming the model will detect the images from diseased subjects as outliers. However, in many real-world scenarios, unannotated datasets with a mix of healthy and diseased individuals are available. Recent studies have shown improvement in unsupervised disease/anomaly detection using such datasets of unannotated images from healthy and diseased individuals compared to datasets that only include images from healthy individuals. A major issue remains unaddressed in these studies, which is selecting the best model for inference from a set of trained models without annotated samples. To address this issue, we propose Brainomaly, a GAN-based image-to-image translation method for neurologic disease detection using unannotated T1-weighted brain MRIs of individuals with neurologic diseases and healthy subjects. Brainomaly is trained to remove the diseased regions from the input brain MRIs and generate MRIs of corresponding healthy brains. Instead of generating the healthy images directly, Brainomaly generates an additive map where each voxel indicates the amount of changes required to make the input image look healthy. In addition, Brainomaly uses a pseudo-AUC metric for inference model selection, which further improves the detection performance. Our Brainomaly outperforms existing state-of-the-art methods by large margins.

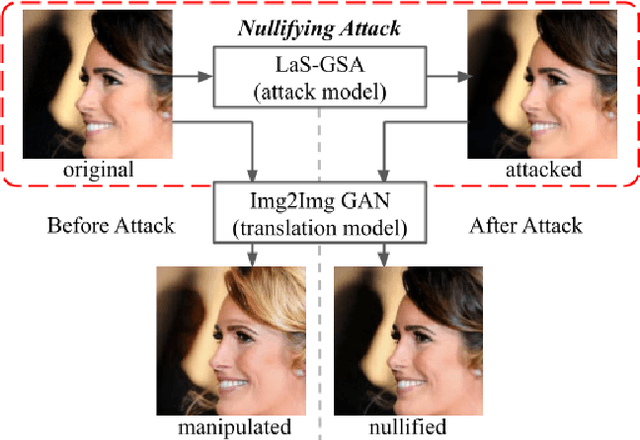

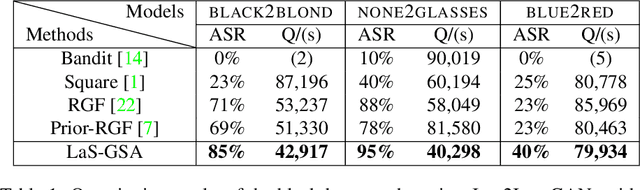

Attack as the Best Defense: Nullifying Image-to-image Translation GANs via Limit-aware Adversarial Attack

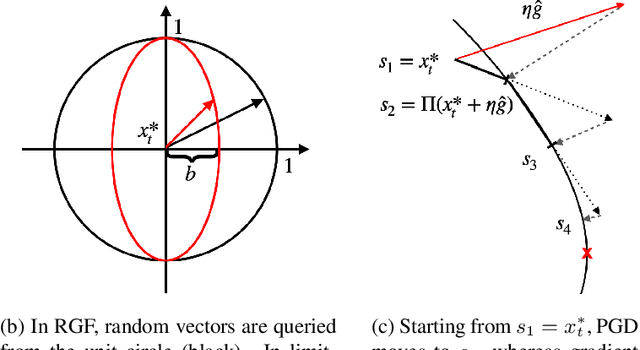

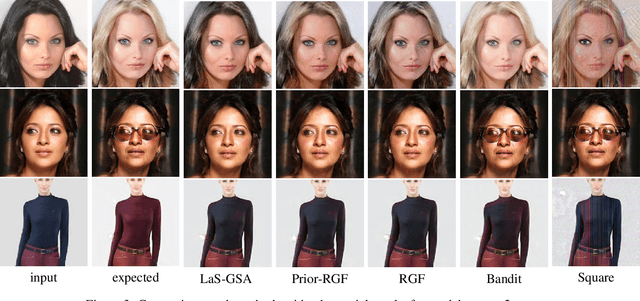

Oct 06, 2021

With the successful creation of high-quality image-to-image (Img2Img) translation GANs comes the non-ethical applications of DeepFake and DeepNude. Such misuses of img2img techniques present a challenging problem for society. In this work, we tackle the problem by introducing the Limit-Aware Self-Guiding Gradient Sliding Attack (LaS-GSA). LaS-GSA follows the Nullifying Attack to cancel the img2img translation process under a black-box setting. In other words, by processing input images with the proposed LaS-GSA before publishing, any targeted img2img GANs can be nullified, preventing the model from maliciously manipulating the images. To improve efficiency, we introduce the limit-aware random gradient-free estimation and the gradient sliding mechanism to estimate the gradient that adheres to the adversarial limit, i.e., the pixel value limitations of the adversarial example. Theoretical justifications validate how the above techniques prevent inefficiency caused by the adversarial limit in both the direction and the step length. Furthermore, an effective self-guiding prior is extracted solely from the threat model and the target image to efficiently leverage the prior information and guide the gradient estimation process. Extensive experiments demonstrate that LaS-GSA requires fewer queries to nullify the image translation process with higher success rates than 4 state-of-the-art black-box methods.

Improving Diffusion-based Image Translation using Asymmetric Gradient Guidance

Jun 07, 2023

Diffusion models have shown significant progress in image translation tasks recently. However, due to their stochastic nature, there's often a trade-off between style transformation and content preservation. Current strategies aim to disentangle style and content, preserving the source image's structure while successfully transitioning from a source to a target domain under text or one-shot image conditions. Yet, these methods often require computationally intense fine-tuning of diffusion models or additional neural networks. To address these challenges, here we present an approach that guides the reverse process of diffusion sampling by applying asymmetric gradient guidance. This results in quicker and more stable image manipulation for both text-guided and image-guided image translation. Our model's adaptability allows it to be implemented with both image- and latent-diffusion models. Experiments show that our method outperforms various state-of-the-art models in image translation tasks.

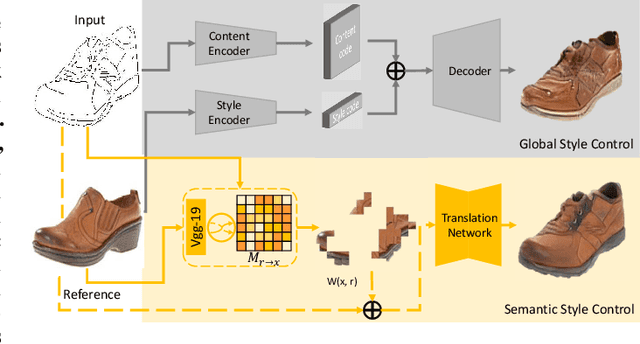

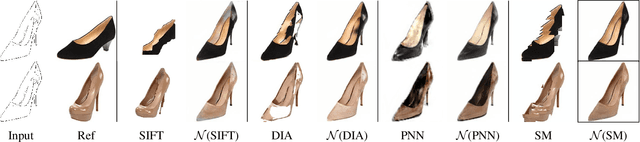

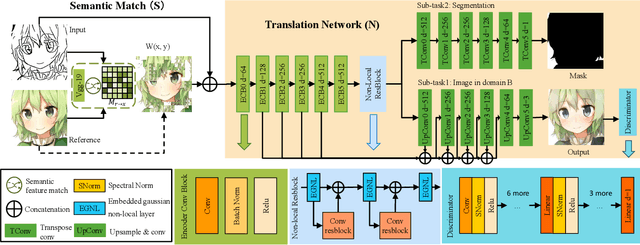

Semantic Example Guided Image-to-Image Translation

Oct 04, 2019

Many image-to-image (I2I) translation problems are in nature of high diversity that a single input may have various counterparts. Prior works proposed the multi-modal network that can build a many-to-many mapping between two visual domains. However, most of them are guided by sampled noises. Some others encode the reference images into a latent vector, by which the semantic information of the reference image will be washed away. In this work, we aim to provide a solution to control the output based on references semantically. Given a reference image and an input in another domain, a semantic matching is first performed between the two visual contents and generates the auxiliary image, which is explicitly encouraged to preserve semantic characteristics of the reference. A deep network then is used for I2I translation and the final outputs are expected to be semantically similar to both the input and the reference; however, no such paired data can satisfy that dual-similarity in a supervised fashion, so we build up a self-supervised framework to serve the training purpose. We improve the quality and diversity of the outputs by employing non-local blocks and a multi-task architecture. We assess the proposed method through extensive qualitative and quantitative evaluations and also presented comparisons with several state-of-art models.

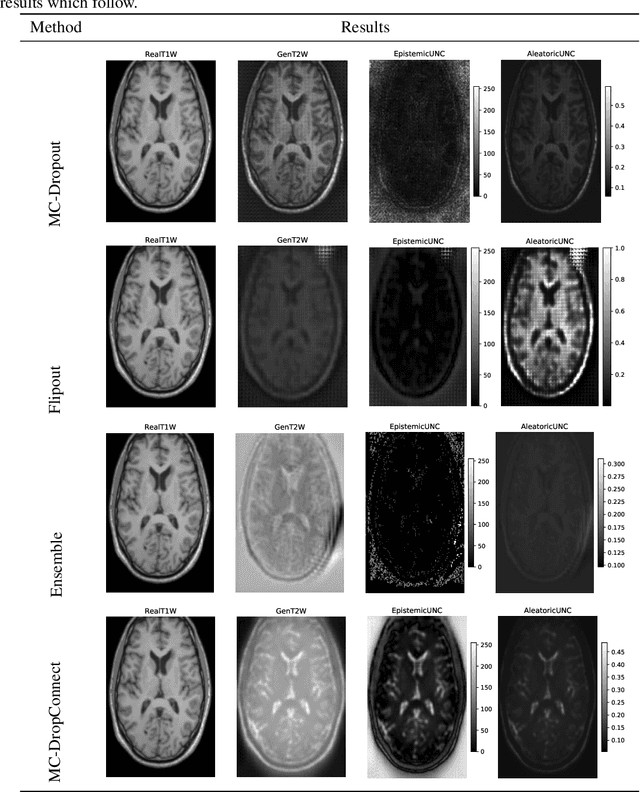

Disentangled Uncertainty and Out of Distribution Detection in Medical Generative Models

Nov 11, 2022

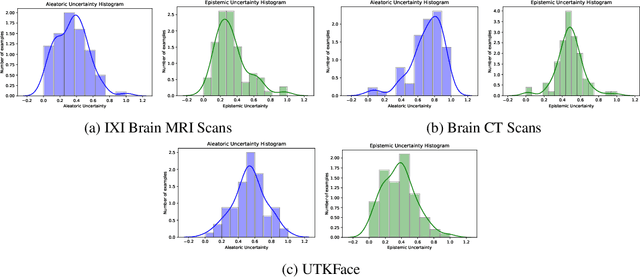

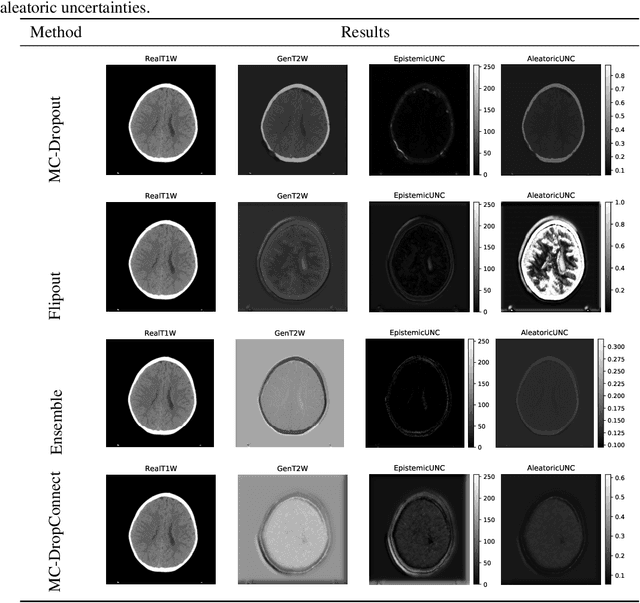

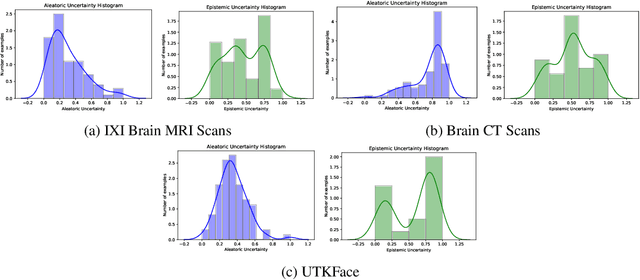

Trusting the predictions of deep learning models in safety critical settings such as the medical domain is still not a viable option. Distentangled uncertainty quantification in the field of medical imaging has received little attention. In this paper, we study disentangled uncertainties in image to image translation tasks in the medical domain. We compare multiple uncertainty quantification methods, namely Ensembles, Flipout, Dropout, and DropConnect, while using CycleGAN to convert T1-weighted brain MRI scans to T2-weighted brain MRI scans. We further evaluate uncertainty behavior in the presence of out of distribution data (Brain CT and RGB Face Images), showing that epistemic uncertainty can be used to detect out of distribution inputs, which should increase reliability of model outputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge