The Text-Based Adventure AI Competition

Paper and Code

Oct 19, 2018

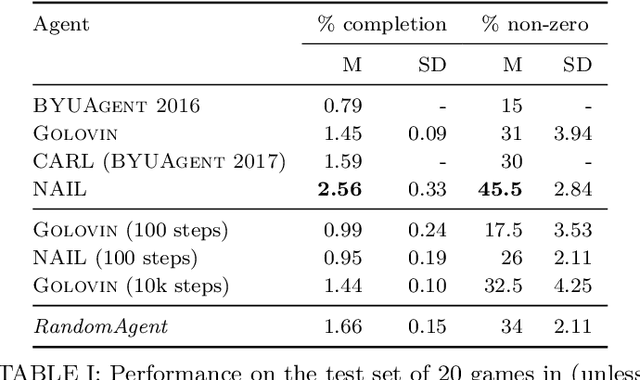

In 2016, 2017, and 2018 at the IEEE Conference on Computational Intelligence in Games, the authors of this paper ran a competition for agents that can play classic text-based adventure games. This competition fills a gap in existing game AI competitions that have typically focussed on traditional card/board games or modern video games with graphical interfaces. By providing a platform for evaluating agents in text-based adventures, the competition provides a novel benchmark for game AI with unique challenges for natural language understanding and generation. This paper summarises the three competitions ran in 2016, 2017, and 2018 (including details of open source implementations of both the competition framework and our competitors) and presents the results of an improved evaluation of these competitors across 20 games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge