Efficient Adaptive Regret Minimization

Paper and Code

Jul 13, 2022

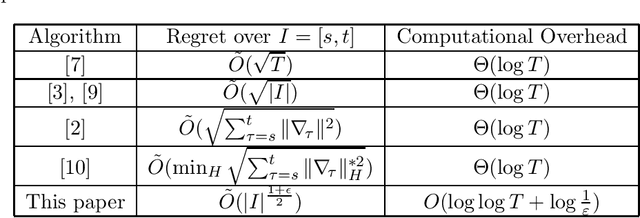

In online convex optimization the player aims to minimize her regret against a fixed comparator over the entire repeated game. Algorithms that minimize standard regret may converge to a fixed decision, which is undesireable in changing or dynamic environments. This motivates the stronger metric of adaptive regret, or the maximum regret over any continuous sub-interval in time. Existing adaptive regret algorithms suffer from a computational penalty - typically on the order of a multiplicative factor that grows logarithmically in the number of game iterations. In this paper we show how to reduce this computational penalty to be doubly logarithmic in the number of game iterations, and with minimal degradation to the optimal attainable adaptive regret bounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge