Code-switched Language Models Using Dual RNNs and Same-Source Pretraining

Paper and Code

Sep 06, 2018

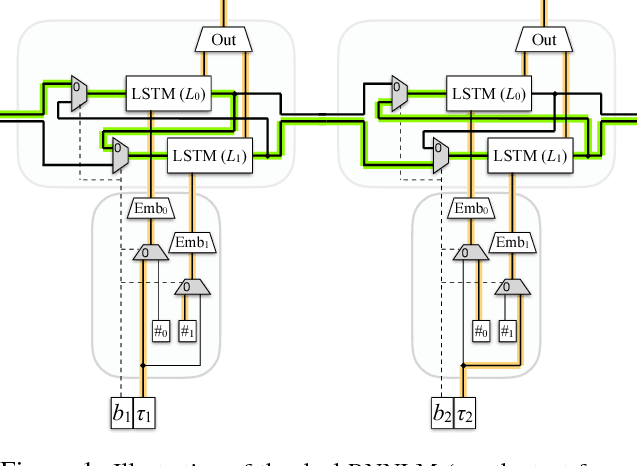

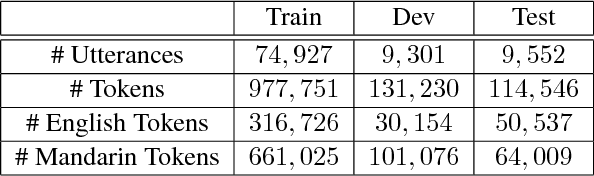

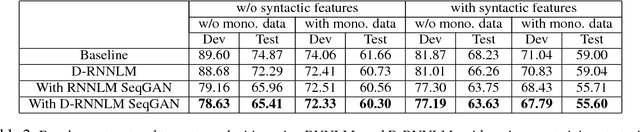

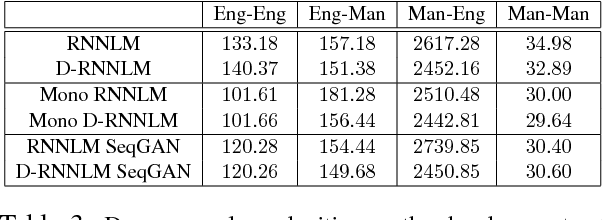

This work focuses on building language models (LMs) for code-switched text. We propose two techniques that significantly improve these LMs: 1) A novel recurrent neural network unit with dual components that focus on each language in the code-switched text separately 2) Pretraining the LM using synthetic text from a generative model estimated using the training data. We demonstrate the effectiveness of our proposed techniques by reporting perplexities on a Mandarin-English task and derive significant reductions in perplexity.

* Accepted at EMNLP 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge