Breaking Batch Normalization for better explainability of Deep Neural Networks through Layer-wise Relevance Propagation

Paper and Code

Feb 24, 2020

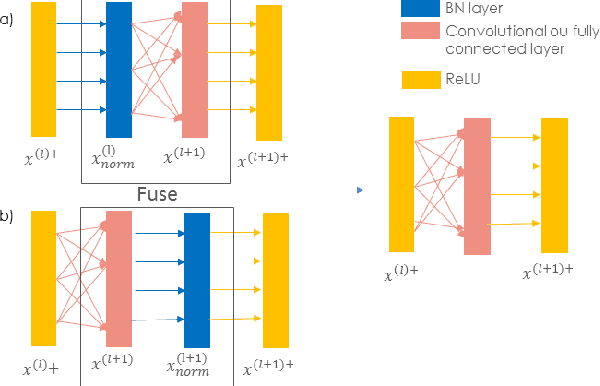

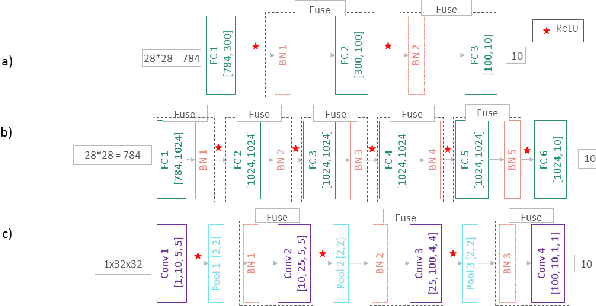

The lack of transparency of neural networks stays a major break for their use. The Layerwise Relevance Propagation technique builds heat-maps representing the relevance of each input in the model s decision. The relevance spreads backward from the last to the first layer of the Deep Neural Network. Layer-wise Relevance Propagation does not manage normalization layers, in this work we suggest a method to include normalization layers. Specifically, we build an equivalent network fusing normalization layers and convolutional or fully connected layers. Heatmaps obtained with our method on MNIST and CIFAR 10 datasets are more accurate for convolutional layers. Our study also prevents from using Layerwise Relevance Propagation with networks including a combination of connected layers and normalization layer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge