Yuji Matsumoto

Dependency Parsing with LSTMs: An Empirical Evaluation

Jun 30, 2016

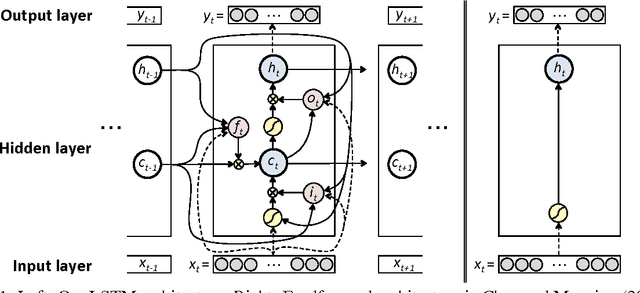

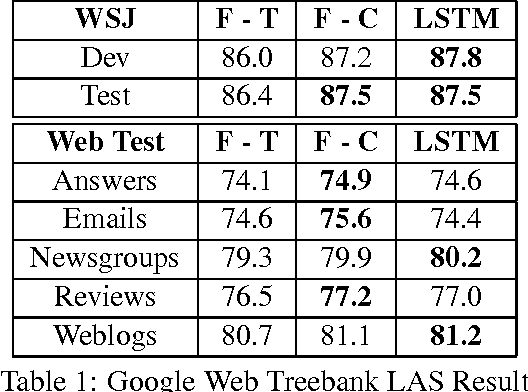

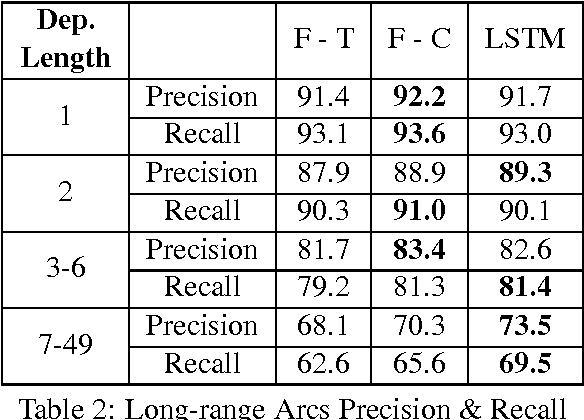

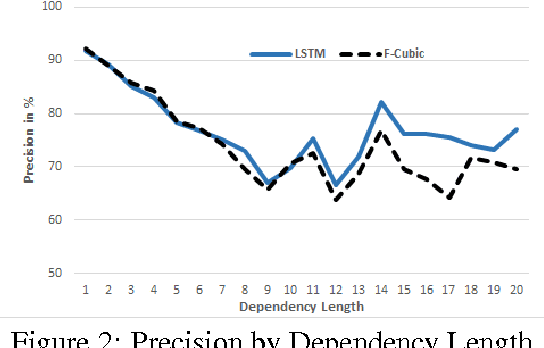

Abstract:We propose a transition-based dependency parser using Recurrent Neural Networks with Long Short-Term Memory (LSTM) units. This extends the feedforward neural network parser of Chen and Manning (2014) and enables modelling of entire sequences of shift/reduce transition decisions. On the Google Web Treebank, our LSTM parser is competitive with the best feedforward parser on overall accuracy and notably achieves more than 3% improvement for long-range dependencies, which has proved difficult for previous transition-based parsers due to error propagation and limited context information. Our findings additionally suggest that dropout regularisation on the embedding layer is crucial to improve the LSTM's generalisation.

Ridge Regression, Hubness, and Zero-Shot Learning

Jul 03, 2015

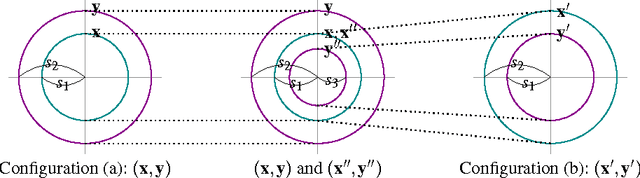

Abstract:This paper discusses the effect of hubness in zero-shot learning, when ridge regression is used to find a mapping between the example space to the label space. Contrary to the existing approach, which attempts to find a mapping from the example space to the label space, we show that mapping labels into the example space is desirable to suppress the emergence of hubs in the subsequent nearest neighbor search step. Assuming a simple data model, we prove that the proposed approach indeed reduces hubness. This was verified empirically on the tasks of bilingual lexicon extraction and image labeling: hubness was reduced with both of these tasks and the accuracy was improved accordingly.

Japanese-Spanish Thesaurus Construction Using English as a Pivot

Mar 06, 2013

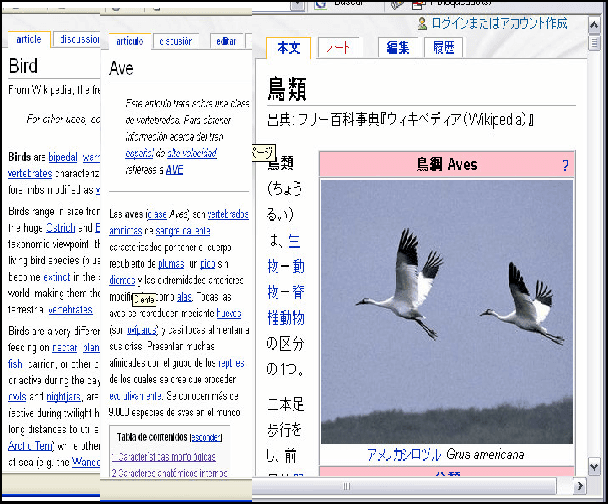

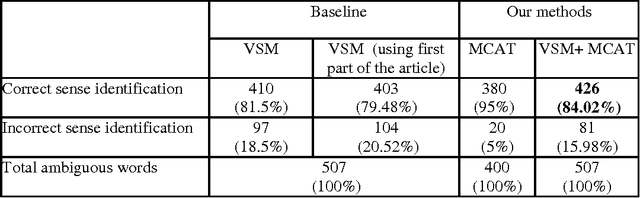

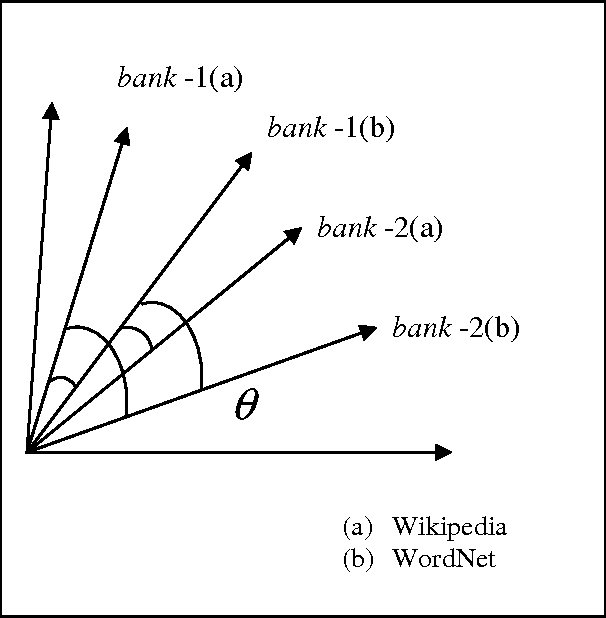

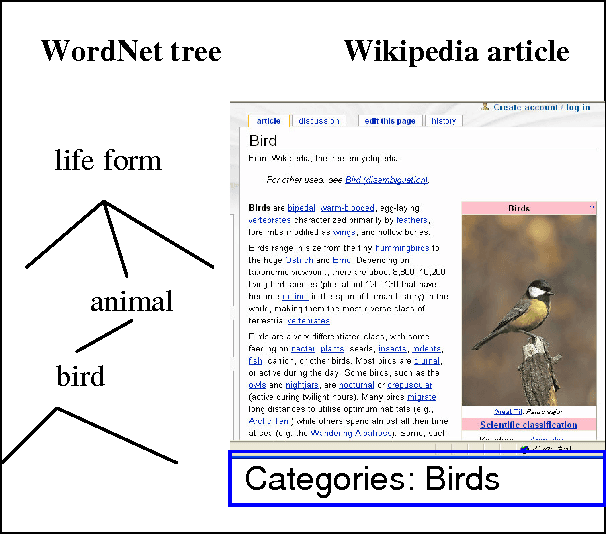

Abstract:We present the results of research with the goal of automatically creating a multilingual thesaurus based on the freely available resources of Wikipedia and WordNet. Our goal is to increase resources for natural language processing tasks such as machine translation targeting the Japanese-Spanish language pair. Given the scarcity of resources, we use existing English resources as a pivot for creating a trilingual Japanese-Spanish-English thesaurus. Our approach consists of extracting the translation tuples from Wikipedia, disambiguating them by mapping them to WordNet word senses. We present results comparing two methods of disambiguation, the first using VSM on Wikipedia article texts and WordNet definitions, and the second using categorical information extracted from Wikipedia, We find that mixing the two methods produces favorable results. Using the proposed method, we have constructed a multilingual Spanish-Japanese-English thesaurus consisting of 25,375 entries. The same method can be applied to any pair of languages that are linked to English in Wikipedia.

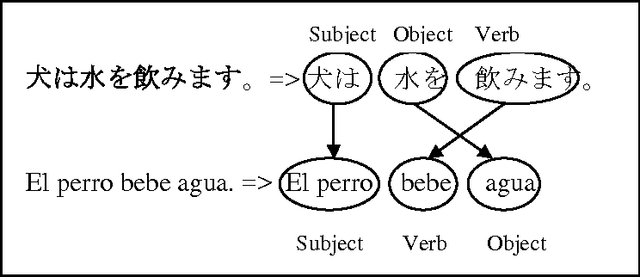

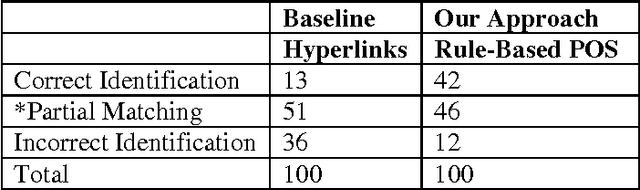

A Rule-Based Approach For Aligning Japanese-Spanish Sentences From A Comparable Corpora

Nov 19, 2012

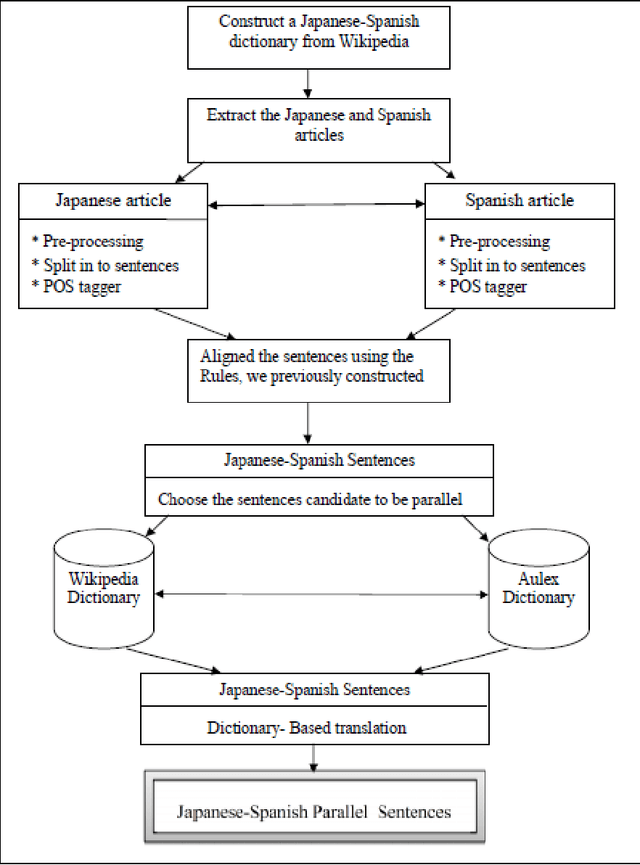

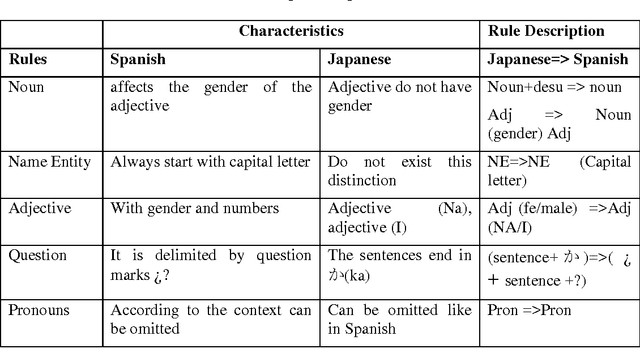

Abstract:The performance of a Statistical Machine Translation System (SMT) system is proportionally directed to the quality and length of the parallel corpus it uses. However for some pair of languages there is a considerable lack of them. The long term goal is to construct a Japanese-Spanish parallel corpus to be used for SMT, whereas, there are a lack of useful Japanese-Spanish parallel Corpus. To address this problem, In this study we proposed a method for extracting Japanese-Spanish Parallel Sentences from Wikipedia using POS tagging and Rule-Based approach. The main focus of this approach is the syntactic features of both languages. Human evaluation was performed over a sample and shows promising results, in comparison with the baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge