Marilyn A. Walker

ATT Labs - Research

The Effect of Resource Limits and Task Complexity on Collaborative Planning in Dialogue

Nov 15, 1995

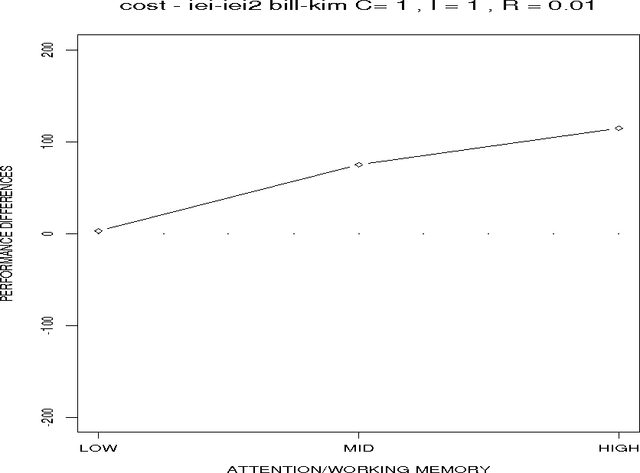

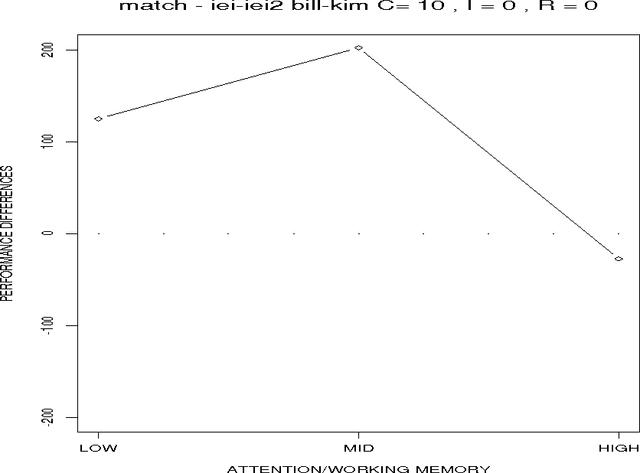

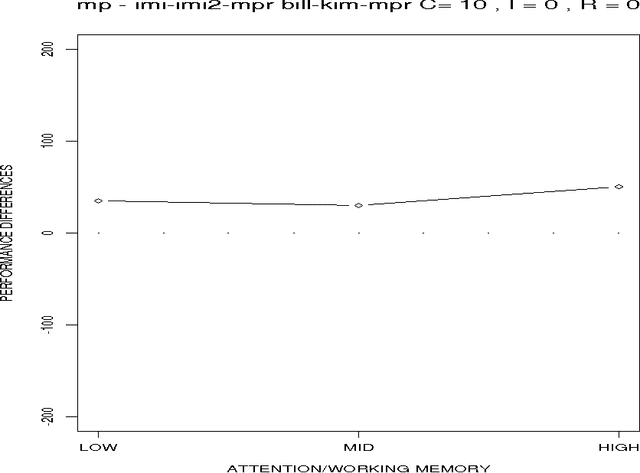

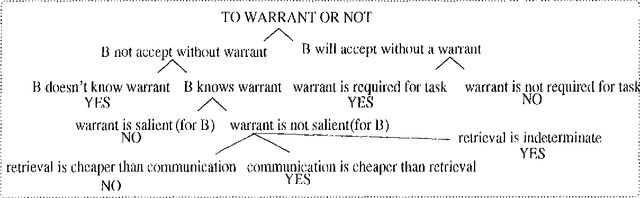

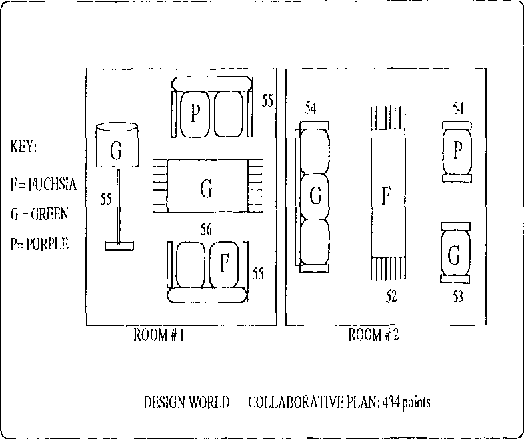

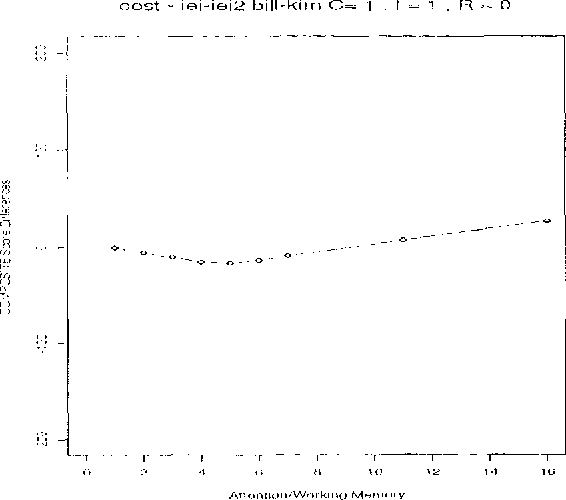

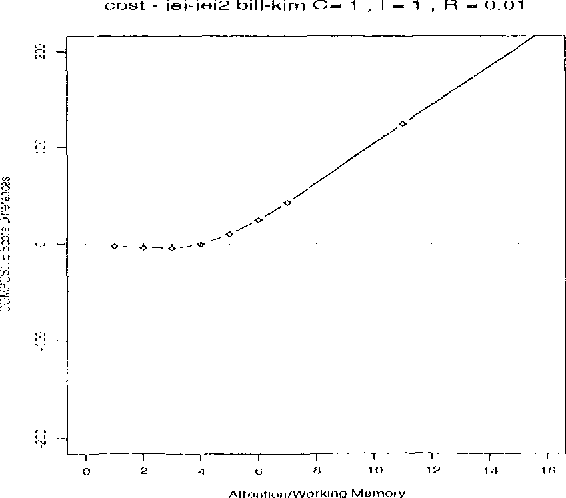

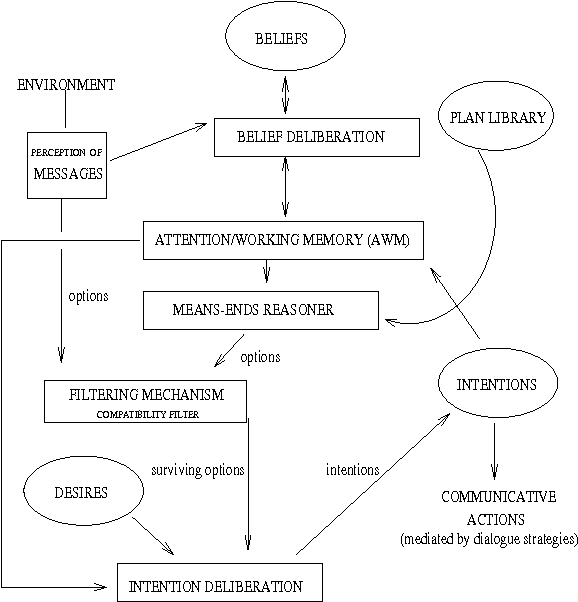

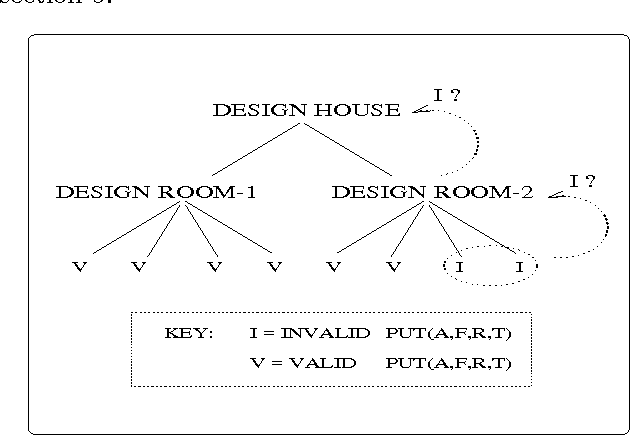

Abstract:This paper shows how agents' choice in communicative action can be designed to mitigate the effect of their resource limits in the context of particular features of a collaborative planning task. I first motivate a number of hypotheses about effective language behavior based on a statistical analysis of a corpus of natural collaborative planning dialogues. These hypotheses are then tested in a dialogue testbed whose design is motivated by the corpus analysis. Experiments in the testbed examine the interaction between (1) agents' resource limits in attentional capacity and inferential capacity; (2) agents' choice in communication; and (3) features of communicative tasks that affect task difficulty such as inferential complexity, degree of belief coordination required, and tolerance for errors. The results show that good algorithms for communication must be defined relative to the agents' resource limits and the features of the task. Algorithms that are inefficient for inferentially simple, low coordination or fault-tolerant tasks are effective when tasks require coordination or complex inferences, or are fault-intolerant. The results provide an explanation for the occurrence of utterances in human dialogues that, prima facie, appear inefficient, and provide the basis for the design of effective algorithms for communicative choice for resource limited agents.

* 64 pages, uses psfig, lingmacros, named

Discourse and Deliberation: Testing a Collaborative Strategy

Mar 16, 1995

Abstract:A discourse strategy is a strategy for communicating with another agent. Designing effective dialogue systems requires designing agents that can choose among discourse strategies. We claim that the design of effective strategies must take cognitive factors into account, propose a new method for testing the hypothesized factors, and present experimental results on an effective strategy for supporting deliberation. The proposed method of computational dialogue simulation provides a new empirical basis for computational linguistics.

* 8 pages, psfig.sty, lingmacros.sty, reformatted version of Coling94 paper

Redundancy in Collaborative Dialogue

Mar 16, 1995

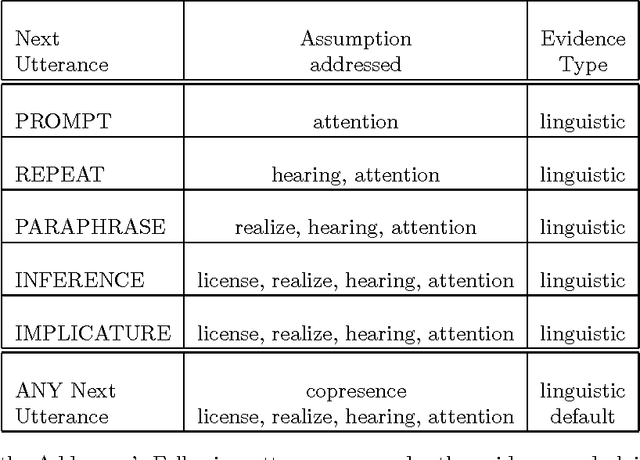

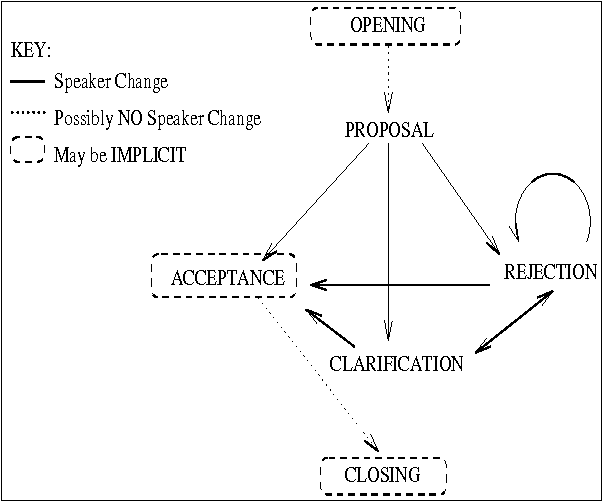

Abstract:In dialogues in which both agents are autonomous, each agent deliberates whether to accept or reject the contributions of the current speaker. A speaker cannot simply assume that a proposal or an assertion will be accepted. However, an examination of a corpus of naturally-occurring problem-solving dialogues shows that agents often do not explicitly indicate acceptance or rejection. Rather the speaker must infer whether the hearer understands and accepts the current contribution based on indirect evidence provided by the hearer's next dialogue contribution. In this paper, I propose a model of the role of informationally redundant utterances in providing evidence to support inferences about mutual understanding and acceptance. The model (1) requires a theory of mutual belief that supports mutual beliefs of various strengths; (2) explains the function of a class of informationally redundant utterances that cannot be explained by other accounts; and (3) contributes to a theory of dialogue by showing how mutual beliefs can be inferred in the absence of the master-slave assumption.

* 8 pages, lingmacros, reformatted version of Coling92 paper

Evaluating Discourse Processing Algorithms

Oct 11, 1994

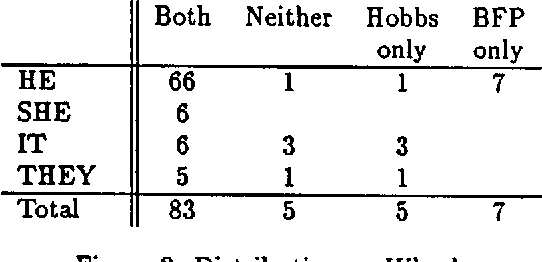

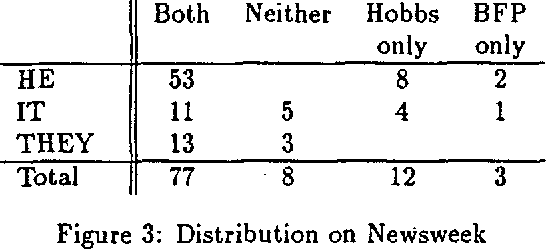

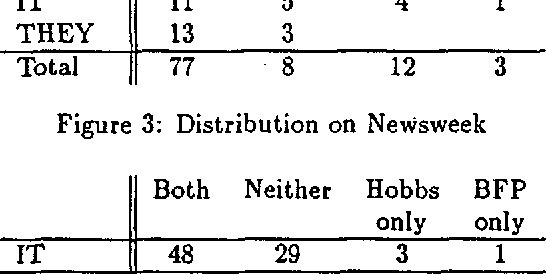

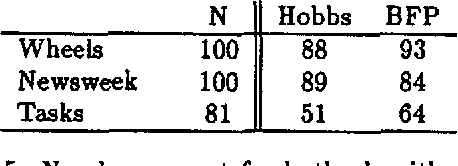

Abstract:In order to take steps towards establishing a methodology for evaluating Natural Language systems, we conducted a case study. We attempt to evaluate two different approaches to anaphoric processing in discourse by comparing the accuracy and coverage of two published algorithms for finding the co-specifiers of pronouns in naturally occurring texts and dialogues. We present the quantitative results of hand-simulating these algorithms, but this analysis naturally gives rise to both a qualitative evaluation and recommendations for performing such evaluations in general. We illustrate the general difficulties encountered with quantitative evaluation. These are problems with: (a) allowing for underlying assumptions, (b) determining how to handle underspecifications, and (c) evaluating the contribution of false positives and error chaining.

* plain latex but includes psfig.tex, 11 pages with one psfig, published in 27th Annual Meeting of the ACL, 1989

Experimentally Evaluating Communicative Strategies: The Effect of the Task

Aug 24, 1994

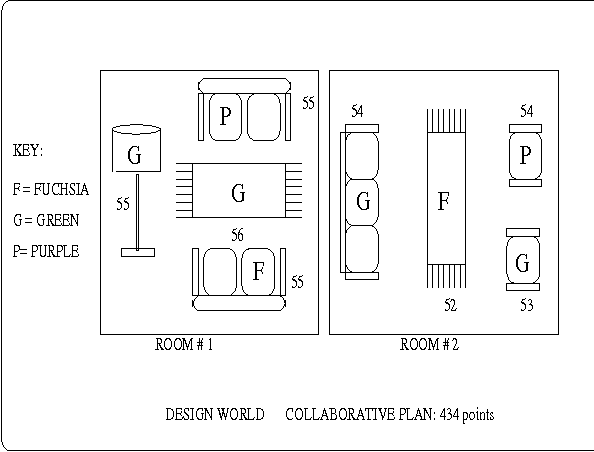

Abstract:Effective problem solving among multiple agents requires a better understanding of the role of communication in collaboration. In this paper we show that there are communicative strategies that greatly improve the performance of resource-bounded agents, but that these strategies are highly sensitive to the task requirements, situation parameters and agents' resource limitations. We base our argument on two sources of evidence: (1) an analysis of a corpus of 55 problem solving dialogues, and (2) experimental simulations of collaborative problem solving dialogues in an experimental world, Design-World, where we parameterize task requirements, agents' resources and communicative strategies.

* 8 pages, latex with psfig, lingmacros.sty, available at ftp://atlantic.merl.com/pub/walker/aaai94.ps.Z

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge