Jesse Hoey

SPUDD: Stochastic Planning using Decision Diagrams

Jan 23, 2013

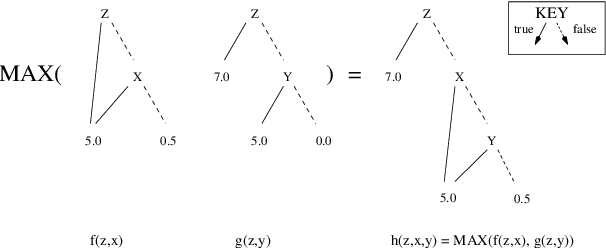

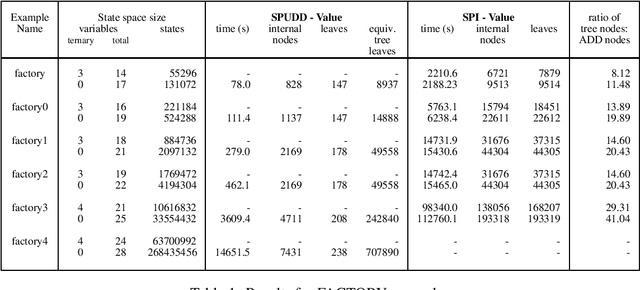

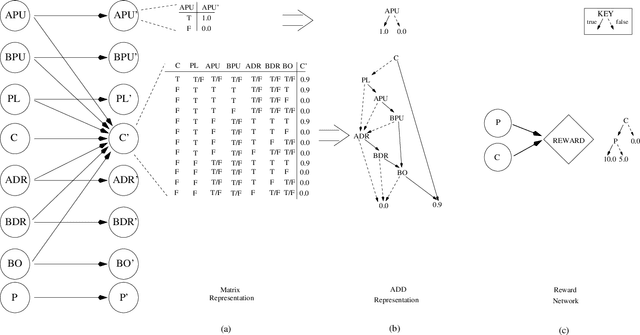

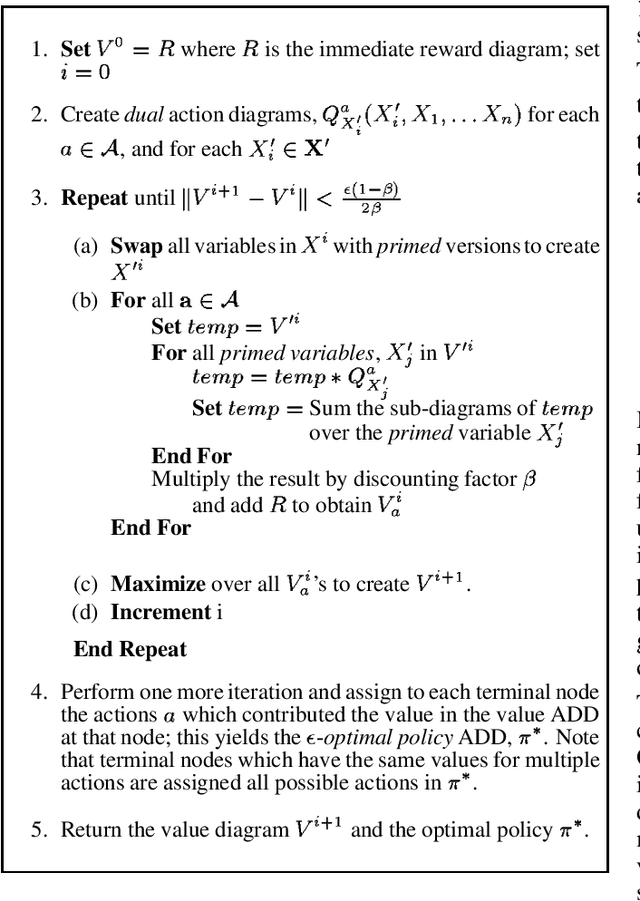

Abstract:Markov decisions processes (MDPs) are becoming increasing popular as models of decision theoretic planning. While traditional dynamic programming methods perform well for problems with small state spaces, structured methods are needed for large problems. We propose and examine a value iteration algorithm for MDPs that uses algebraic decision diagrams(ADDs) to represent value functions and policies. An MDP is represented using Bayesian networks and ADDs and dynamic programming is applied directly to these ADDs. We demonstrate our method on large MDPs (up to 63 million states) and show that significant gains can be had when compared to tree-structured representations (with up to a thirty-fold reduction in the number of nodes required to represent optimal value functions).

Relational Approach to Knowledge Engineering for POMDP-based Assistance Systems as a Translation of a Psychological Model

Jun 25, 2012

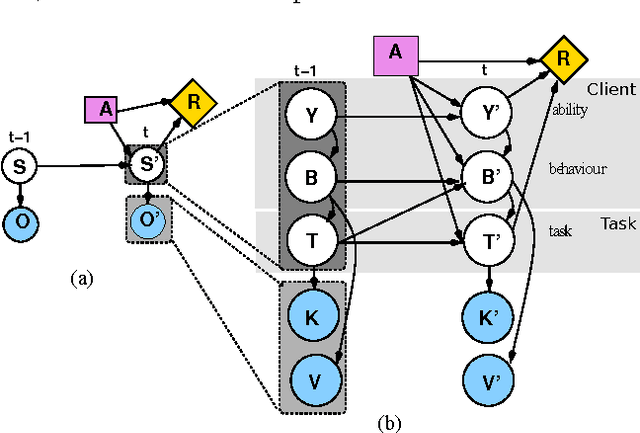

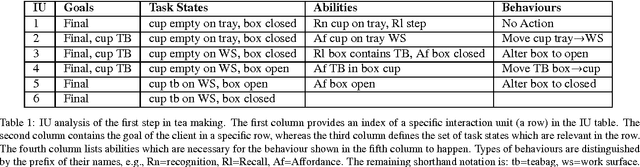

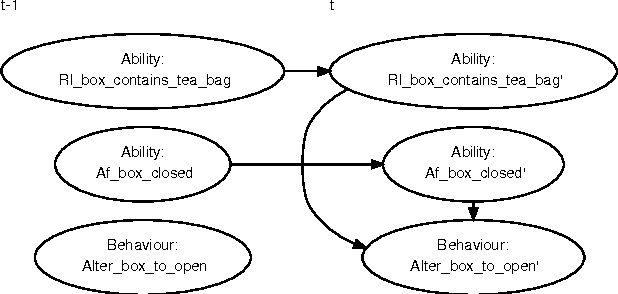

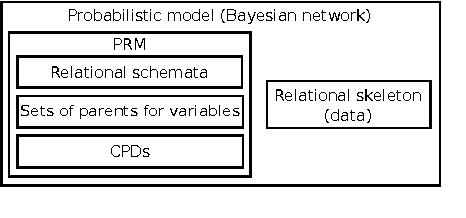

Abstract:Assistive systems for persons with cognitive disabilities (e.g. dementia) are difficult to build due to the wide range of different approaches people can take to accomplishing the same task, and the significant uncertainties that arise from both the unpredictability of client's behaviours and from noise in sensor readings. Partially observable Markov decision process (POMDP) models have been used successfully as the reasoning engine behind such assistive systems for small multi-step tasks such as hand washing. POMDP models are a powerful, yet flexible framework for modelling assistance that can deal with uncertainty and utility. Unfortunately, POMDPs usually require a very labour intensive, manual procedure for their definition and construction. Our previous work has described a knowledge driven method for automatically generating POMDP activity recognition and context sensitive prompting systems for complex tasks. We call the resulting POMDP a SNAP (SyNdetic Assistance Process). The spreadsheet-like result of the analysis does not correspond to the POMDP model directly and the translation to a formal POMDP representation is required. To date, this translation had to be performed manually by a trained POMDP expert. In this paper, we formalise and automate this translation process using a probabilistic relational model (PRM) encoded in a relational database. We demonstrate the method by eliciting three assistance tasks from non-experts. We validate the resulting POMDP models using case-based simulations to show that they are reasonable for the domains. We also show a complete case study of a designer specifying one database, including an evaluation in a real-life experiment with a human actor.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge