Farzad Khalvati

Evolution-based Fine-tuning of CNNs for Prostate Cancer Detection

Nov 04, 2019

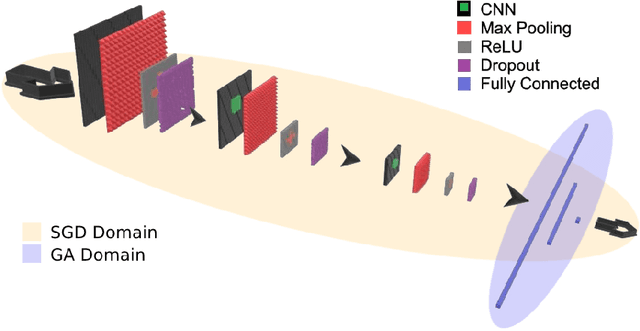

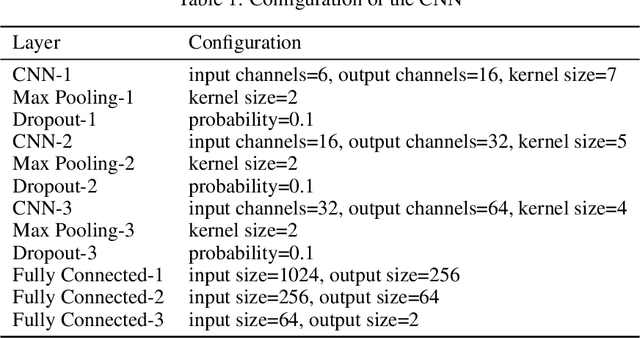

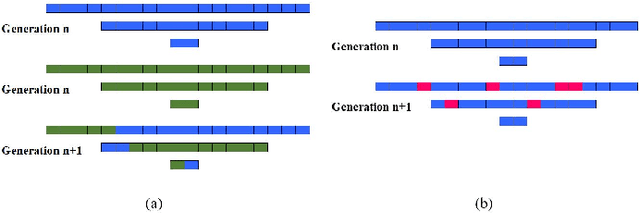

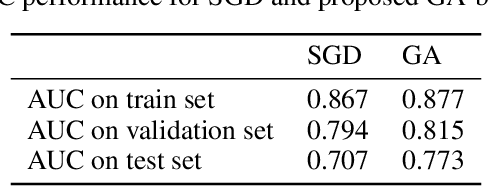

Abstract:Convolutional Neural Networks (CNNs) have been used for automated detection of prostate cancer where Area Under Receiver Operating Characteristic (ROC) curve (AUC) is usually used as the performance metric. Given that AUC is not differentiable, common practice is to train the CNN using a loss functions based on other performance metrics such as cross entropy and monitoring AUC to select the best model. In this work, we propose to fine-tune a trained CNN for prostate cancer detection using a Genetic Algorithm to achieve a higher AUC. Our dataset contained 6-channel Diffusion-Weighted MRI slices of prostate. On a cohort of 2,955 training, 1,417 validation, and 1,334 test slices, we reached test AUC of 0.773; a 9.3% improvement compared to the base CNN model.

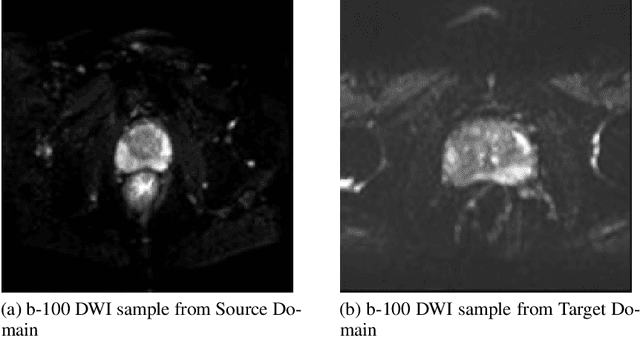

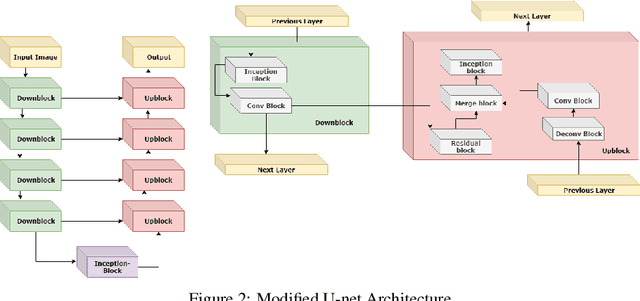

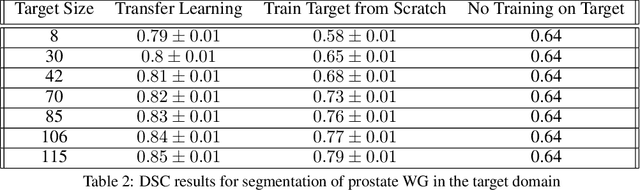

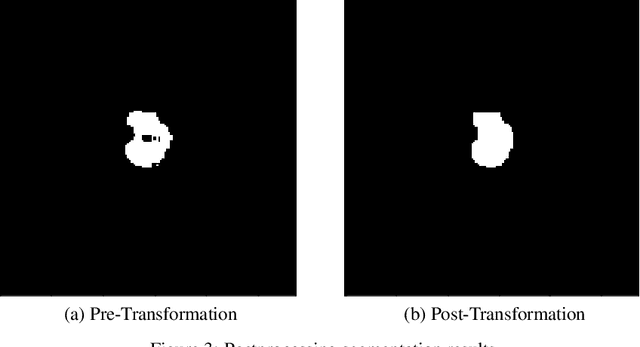

A Transfer Learning Approach for Automated Segmentation of Prostate Whole Gland and Transition Zone in Diffusion Weighted MRI

Sep 20, 2019

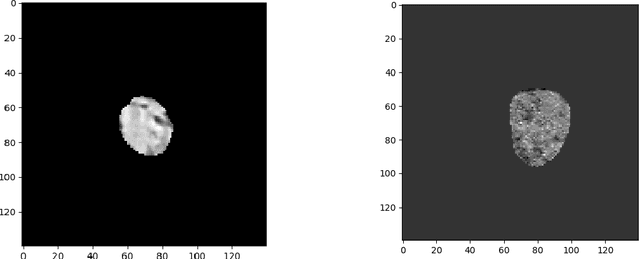

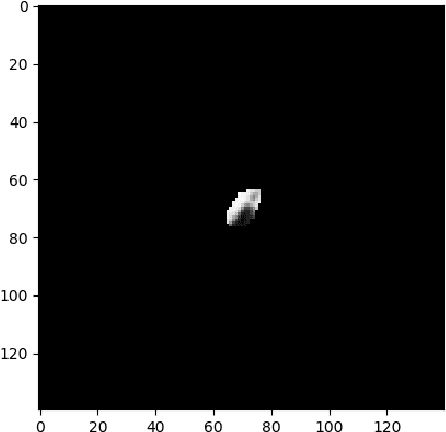

Abstract:The segmentation of prostate whole gland and transition zone in Diffusion Weighted MRI (DWI) are the first step in designing computer-aided detection algorithms for prostate cancer. However, variations in MRI acquisition parameters and scanner manufacturing result in different appearances of prostate tissue in the images. Convolutional neural networks (CNNs) which have shown to be successful in various medical image analysis tasks including segmentation are typically sensitive to the variations in imaging parameters. This sensitivity leads to poor segmentation performance of CNNs trained on a source cohort and tested on a target cohort from a different scanner and hence, it limits the applicability of CNNs for cross-cohort training and testing. Contouring prostate whole gland and transition zone in DWI images are time-consuming and expensive. Thus, it is important to enable CNNs pretrained on images of source domain, to segment images of target domain with minimum requirement for manual segmentation of images from the target domain. In this work, we propose a transfer learning method based on a modified U-net architecture and loss function, for segmentation of prostate whole gland and transition zone in DWIs using a CNN pretrained on a source dataset and tested on the target dataset. We explore the effect of the size of subset of target dataset used for fine-tuning the pre-trained CNN on the overall segmentation accuracy. Our results show that with a fine-tuning data as few as 30 patients from the target domain, the proposed transfer learning-based algorithm can reach dice score coefficient of 0.80 for both prostate whole gland and transition zone segmentation. Using a fine-tuning data of 115 patients from the target domain, dice score coefficient of 0.85 and 0.84 are achieved for segmentation of whole gland and transition zone, respectively, in the target domain.

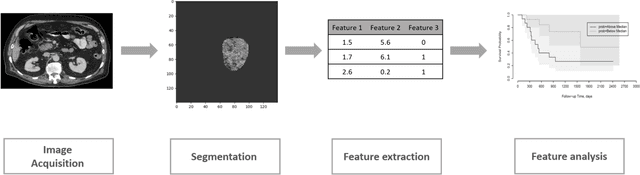

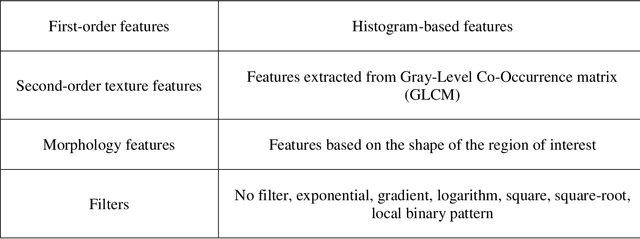

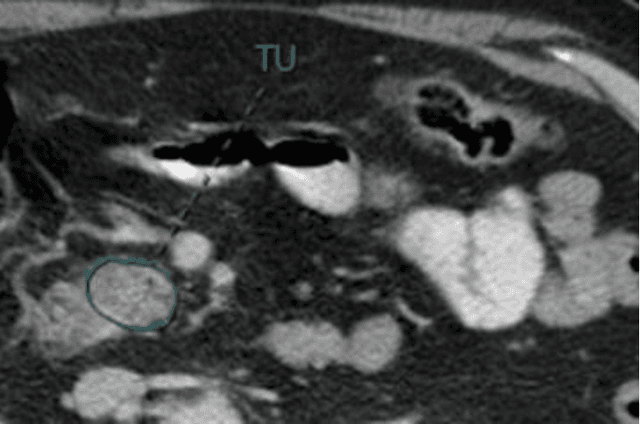

Improving Prognostic Performance in Resectable Pancreatic Ductal Adenocarcinoma using Radiomics and Deep Learning Features Fusion in CT Images

Jul 10, 2019

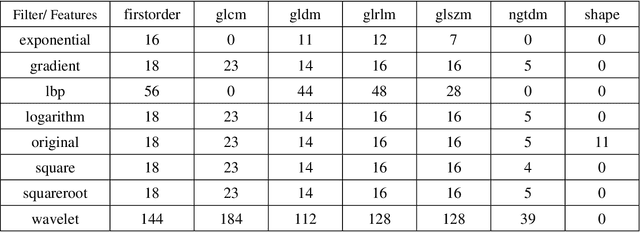

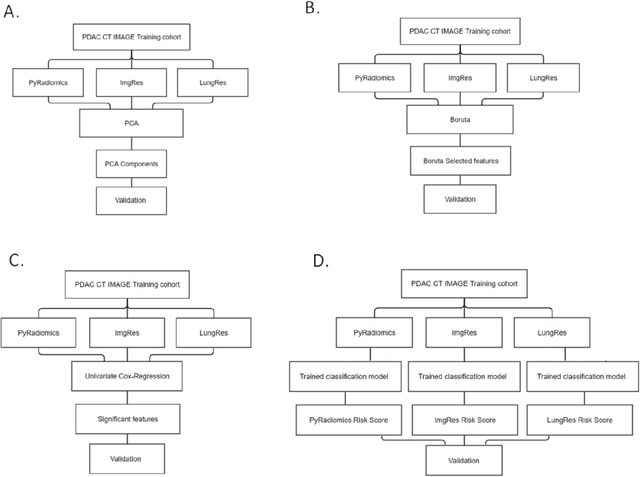

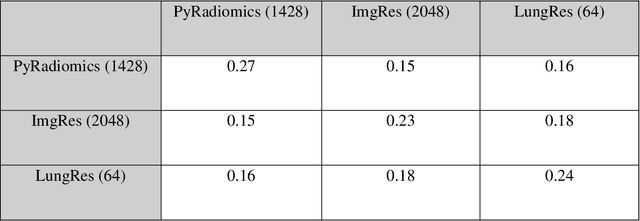

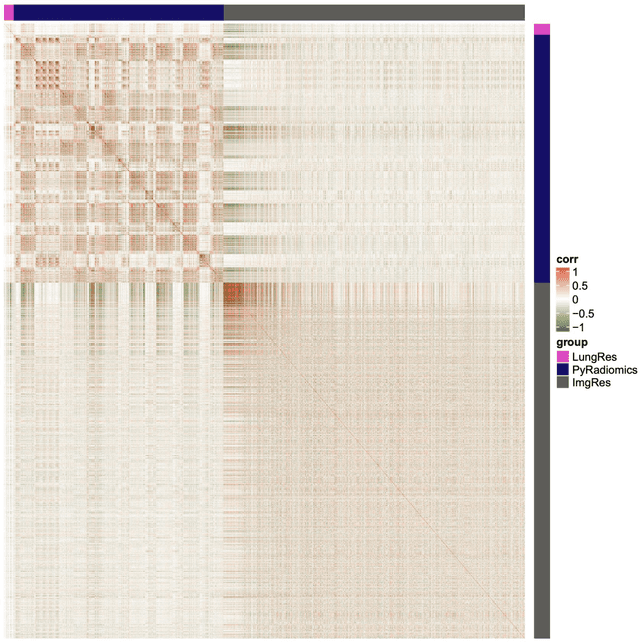

Abstract:As an analytic pipeline for quantitative imaging feature extraction and analysis, radiomics has grown rapidly in the past a few years. Recent studies in radiomics aim to investigate the relationship between tumors imaging features and clinical outcomes. Open source radiomics feature banks enable the extraction and analysis of thousands of predefined features. On the other hand, recent advances in deep learning have shown significant potential in the quantitative medical imaging field, raising the research question of whether predefined radiomics features have predictive information in addition to deep learning features. In this study, we propose a feature fusion method and investigate whether a combined feature bank of deep learning and predefined radiomics features can improve the prognostics performance. CT images from resectable Pancreatic Adenocarcinoma (PDAC) patients were used to compare the prognosis performance of common feature reduction and fusion methods and the proposed risk-score based feature fusion method for overall survival. It was shown that the proposed feature fusion method significantly improves the prognosis performance for overall survival in resectable PDAC cohorts, elevating the area under ROC curve by 51% compared to predefined radiomics features alone, by 16% compared to deep learning features alone, and by 32% compared to existing feature fusion and reduction methods for a combination of deep learning and predefined radiomics features.

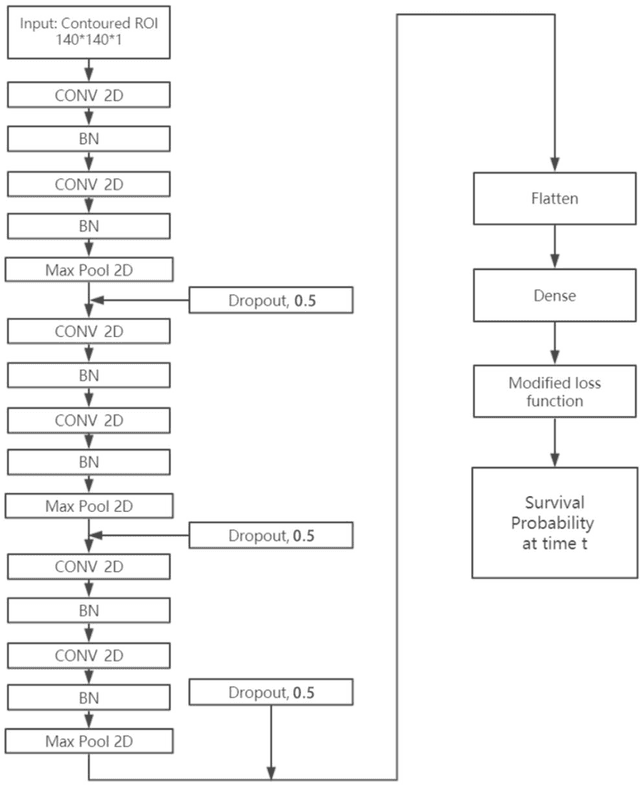

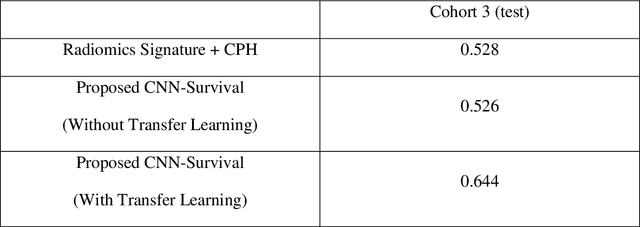

CNN-based Survival Model for Pancreatic Ductal Adenocarcinoma in Medical Imaging

Jun 25, 2019

Abstract:Cox proportional hazard model (CPH) is commonly used in clinical research for survival analysis. In quantitative medical imaging (radiomics) studies, CPH plays an important role in feature reduction and modeling. However, the underlying linear assumption of CPH model limits the prognostic performance. In addition, the multicollinearity of radiomic features and multiple testing problem further impedes the CPH models performance. In this work, using transfer learning, a convolutional neural network (CNN) based survival model was built and tested on preoperative CT images of resectable Pancreatic Ductal Adenocarcinoma (PDAC) patients. The proposed CNN-based survival model outperformed the traditional CPH-based radiomics approach in terms of concordance index by 22%, providing a better fit for patients' survival patterns. The proposed CNN-based survival model outperforms CPH-based radiomics pipeline in PDAC prognosis. This approach offers a better fit for survival patterns based on CT images and overcomes the limitations of conventional survival models.

Prostate Cancer Detection using Deep Convolutional Neural Networks

May 30, 2019

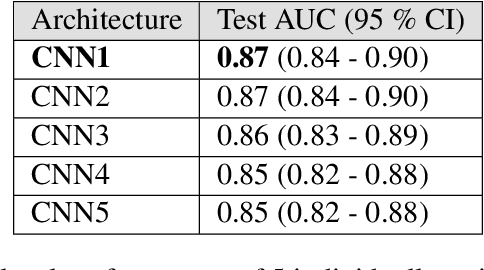

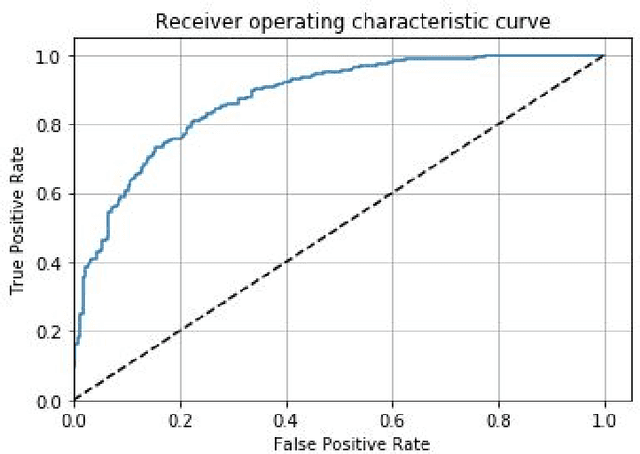

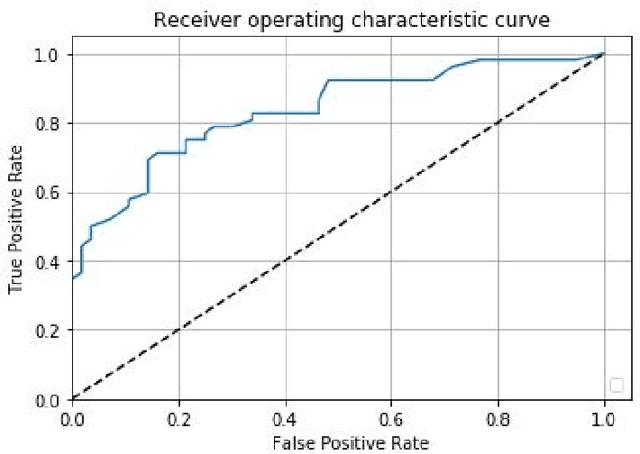

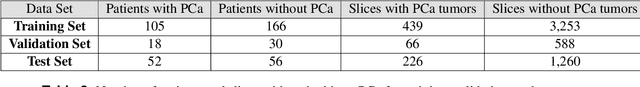

Abstract:Prostate cancer is one of the most common forms of cancer and the third leading cause of cancer death in North America. As an integrated part of computer-aided detection (CAD) tools, diffusion-weighted magnetic resonance imaging (DWI) has been intensively studied for accurate detection of prostate cancer. With deep convolutional neural networks (CNNs) significant success in computer vision tasks such as object detection and segmentation, different CNNs architectures are increasingly investigated in medical imaging research community as promising solutions for designing more accurate CAD tools for cancer detection. In this work, we developed and implemented an automated CNNs-based pipeline for detection of clinically significant prostate cancer (PCa) for a given axial DWI image and for each patient. DWI images of 427 patients were used as the dataset, which contained 175 patients with PCa and 252 healthy patients. To measure the performance of the proposed pipeline, a test set of 108 (out of 427) patients were set aside and not used in the training phase. The proposed pipeline achieved area under the receiver operating characteristic curve (AUC) of 0.87 (95% Confidence Interval (CI): 0.84-0.90) and 0.84 (95% CI: 0.76-0.91) at slice level and patient level, respectively.

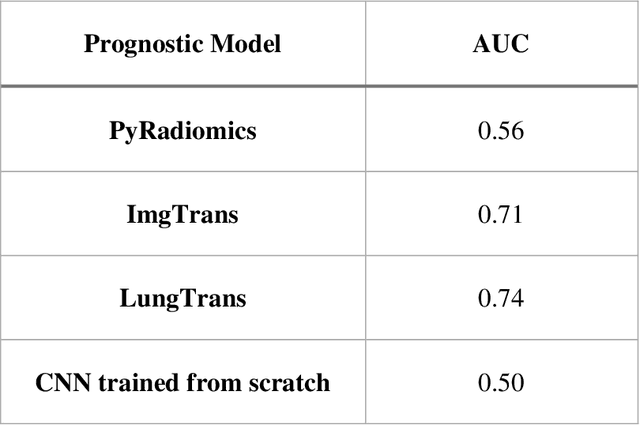

Improving Prognostic Value of CT Deep Radiomic Features in Pancreatic Ductal Adenocarcinoma Using Transfer Learning

May 23, 2019

Abstract:Pancreatic ductal adenocarcinoma (PDAC) is one of the most aggressive cancers with an extremely poor prognosis. Radiomics has shown prognostic ability in multiple types of cancer including PDAC. However, the prognostic value of traditional radiomics pipelines, which are based on hand-crafted radiomic features alone, is limited due to multicollinearity of features and multiple testing problem, and limited performance of conventional machine learning classifiers. Deep learning architectures, such as convolutional neural networks (CNNs), have been shown to outperform traditional techniques in computer vision tasks, such as object detection. However, they require large sample sizes for training which limits their development. As an alternative solution, CNN-based transfer learning has shown the potential for achieving reasonable performance using datasets with small sample sizes. In this work, we developed a CNN-based transfer learning approach for prognostication in PDAC patients for overall survival. The results showed that transfer learning approach outperformed the traditional radiomics model on PDAC data. A transfer learning approach may fill the gap between radiomics and deep learning analytics for cancer prognosis and improve performance beyond what CNNs can achieve using small datasets.

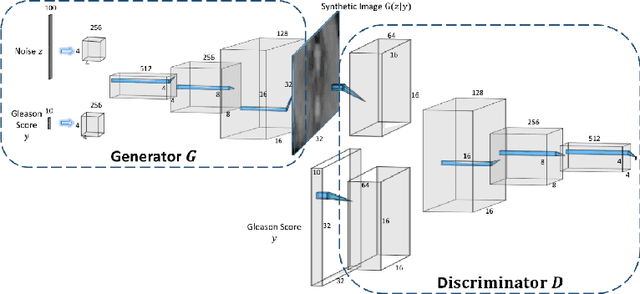

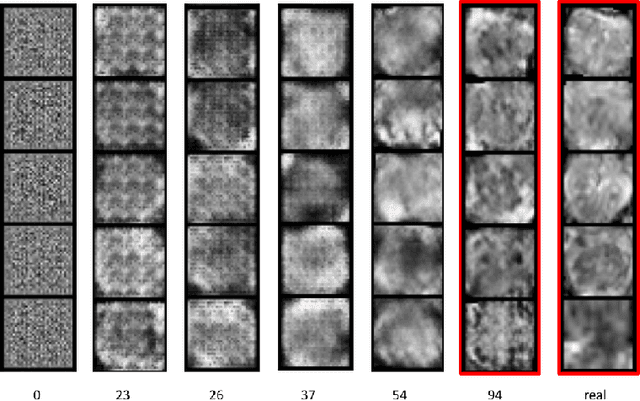

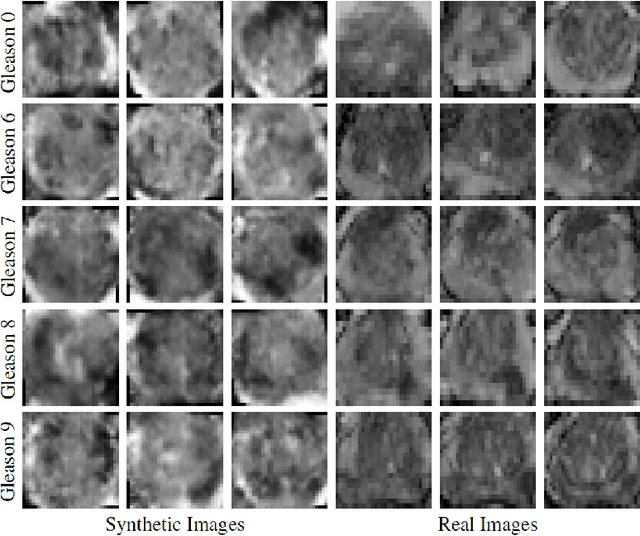

ProstateGAN: Mitigating Data Bias via Prostate Diffusion Imaging Synthesis with Generative Adversarial Networks

Nov 21, 2018

Abstract:Generative Adversarial Networks (GANs) have shown considerable promise for mitigating the challenge of data scarcity when building machine learning-driven analysis algorithms. Specifically, a number of studies have shown that GAN-based image synthesis for data augmentation can aid in improving classification accuracy in a number of medical image analysis tasks, such as brain and liver image analysis. However, the efficacy of leveraging GANs for tackling prostate cancer analysis has not been previously explored. Motivated by this, in this study we introduce ProstateGAN, a GAN-based model for synthesizing realistic prostate diffusion imaging data. More specifically, in order to generate new diffusion imaging data corresponding to a particular cancer grade (Gleason score), we propose a conditional deep convolutional GAN architecture that takes Gleason scores into consideration during the training process. Experimental results show that high-quality synthetic prostate diffusion imaging data can be generated using the proposed ProstateGAN for specified Gleason scores.

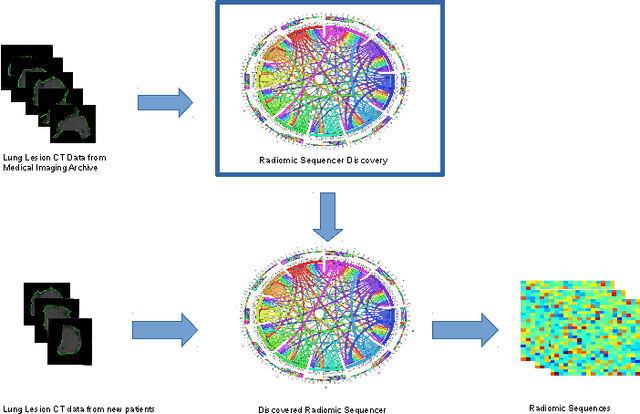

Discovery Radiomics via Evolutionary Deep Radiomic Sequencer Discovery for Pathologically-Proven Lung Cancer Detection

Oct 20, 2017Abstract:While lung cancer is the second most diagnosed form of cancer in men and women, a sufficiently early diagnosis can be pivotal in patient survival rates. Imaging-based, or radiomics-driven, detection methods have been developed to aid diagnosticians, but largely rely on hand-crafted features which may not fully encapsulate the differences between cancerous and healthy tissue. Recently, the concept of discovery radiomics was introduced, where custom abstract features are discovered from readily available imaging data. We propose a novel evolutionary deep radiomic sequencer discovery approach based on evolutionary deep intelligence. Motivated by patient privacy concerns and the idea of operational artificial intelligence, the evolutionary deep radiomic sequencer discovery approach organically evolves increasingly more efficient deep radiomic sequencers that produce significantly more compact yet similarly descriptive radiomic sequences over multiple generations. As a result, this framework improves operational efficiency and enables diagnosis to be run locally at the radiologist's computer while maintaining detection accuracy. We evaluated the evolved deep radiomic sequencer (EDRS) discovered via the proposed evolutionary deep radiomic sequencer discovery framework against state-of-the-art radiomics-driven and discovery radiomics methods using clinical lung CT data with pathologically-proven diagnostic data from the LIDC-IDRI dataset. The evolved deep radiomic sequencer shows improved sensitivity (93.42%), specificity (82.39%), and diagnostic accuracy (88.78%) relative to previous radiomics approaches.

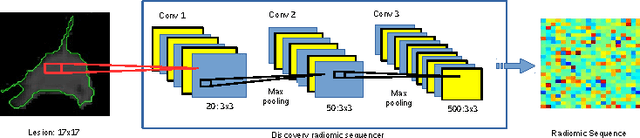

Discovery Radiomics for Pathologically-Proven Computed Tomography Lung Cancer Prediction

Mar 28, 2017

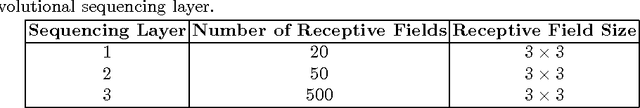

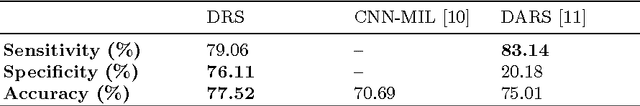

Abstract:Lung cancer is the leading cause for cancer related deaths. As such, there is an urgent need for a streamlined process that can allow radiologists to provide diagnosis with greater efficiency and accuracy. A powerful tool to do this is radiomics: a high-dimension imaging feature set. In this study, we take the idea of radiomics one step further by introducing the concept of discovery radiomics for lung cancer prediction using CT imaging data. In this study, we realize these custom radiomic sequencers as deep convolutional sequencers using a deep convolutional neural network learning architecture. To illustrate the prognostic power and effectiveness of the radiomic sequences produced by the discovered sequencer, we perform cancer prediction between malignant and benign lesions from 97 patients using the pathologically-proven diagnostic data from the LIDC-IDRI dataset. Using the clinically provided pathologically-proven data as ground truth, the proposed framework provided an average accuracy of 77.52% via 10-fold cross-validation with a sensitivity of 79.06% and specificity of 76.11%, surpassing the state-of-the art method.

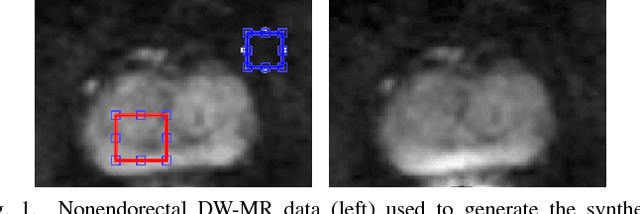

Noise-Compensated, Bias-Corrected Diffusion Weighted Endorectal Magnetic Resonance Imaging via a Stochastically Fully-Connected Joint Conditional Random Field Model

Jul 05, 2016

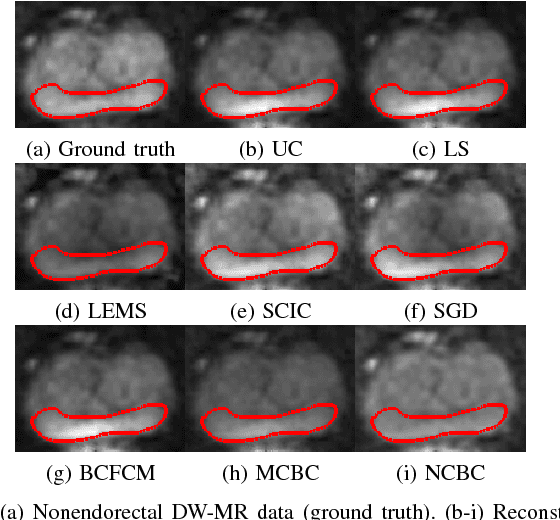

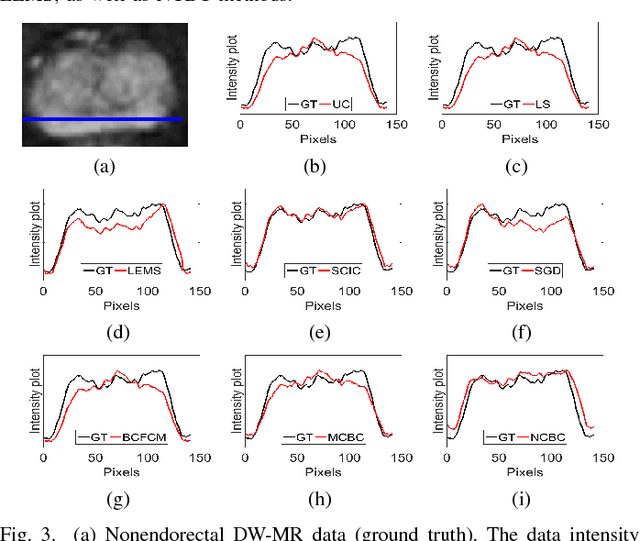

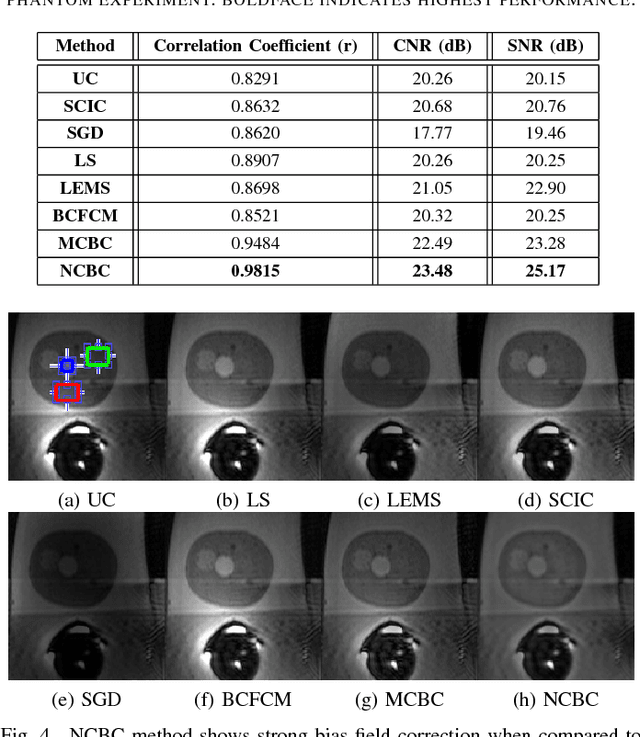

Abstract:Diffusion weighted magnetic resonance imaging (DW-MR) is a powerful tool in imaging-based prostate cancer screening and detection. Endorectal coils are commonly used in DW-MR imaging to improve the signal-to-noise ratio (SNR) of the acquisition, at the expense of significant intensity inhomogeneities (bias field) that worsens as we move away from the endorectal coil. The presence of bias field can have a significant negative impact on the accuracy of different image analysis tasks, as well as prostate tumor localization, thus leading to increased inter- and intra-observer variability. Retrospective bias correction approaches are introduced as a more efficient way of bias correction compared to the prospective methods such that they correct for both of the scanner and anatomy-related bias fields in MR imaging. Previously proposed retrospective bias field correction methods suffer from undesired noise amplification that can reduce the quality of bias-corrected DW-MR image. Here, we propose a unified data reconstruction approach that enables joint compensation of bias field as well as data noise in DW-MR imaging. The proposed noise-compensated, bias-corrected (NCBC) data reconstruction method takes advantage of a novel stochastically fully connected joint conditional random field (SFC-JCRF) model to mitigate the effects of data noise and bias field in the reconstructed MR data. The proposed NCBC reconstruction method was tested on synthetic DW-MR data, physical DW-phantom as well as real DW-MR data all acquired using endorectal MR coil. Both qualitative and quantitative analysis illustrated that the proposed NCBC method can achieve improved image quality when compared to other tested bias correction methods. As such, the proposed NCBC method may have potential as a useful retrospective approach for improving the consistency of image interpretations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge