Carola-Bibiane Schonlieb

Beyond Supervised Classification: Extreme Minimal Supervision with the Graph 1-Laplacian

Jun 20, 2019

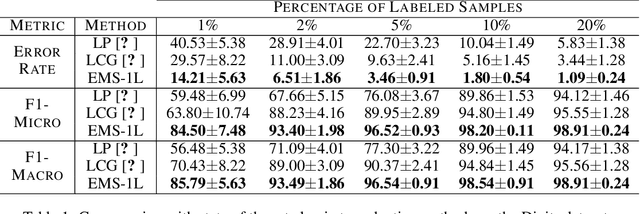

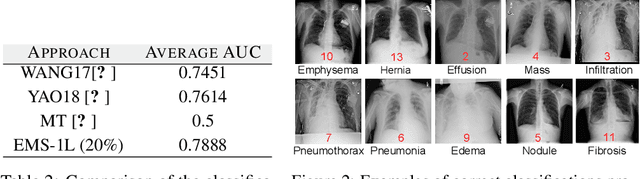

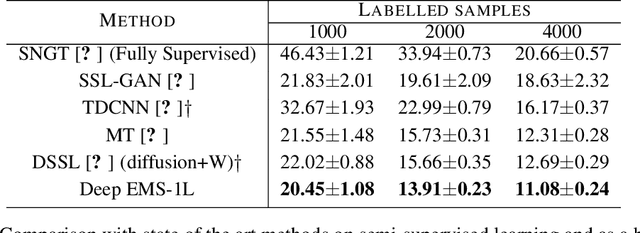

Abstract:We consider the task of classifying when an extremely reduced amount of labelled data is available. This problem is of a great interest, in several real-world problems, as obtaining large amounts of labelled data is expensive and time consuming. We present a novel semi-supervised framework for multi-class classification that is based on the normalised and non-smooth graph 1-Laplacian. Our transductive framework is framed under a novel functional with carefully selected class priors - that enforces a sufficiently smooth solution that strengthens the intrinsic relation between the labelled and unlabelled data. We demonstrate through extensive experimental results on large datasets CIFAR-10 and ChestX-ray14, that our method outperforms classic methods and readily competes with recent deep-learning approaches.

Compressed Sensing Plus Motion (CS+M): A New Perspective for Improving Undersampled MR Image Reconstruction

Oct 25, 2018

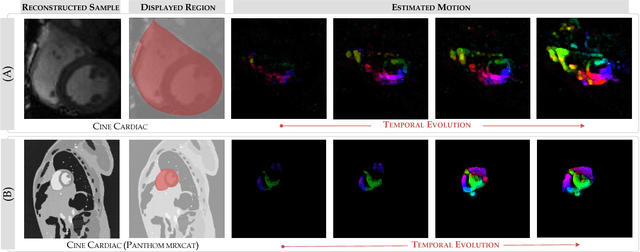

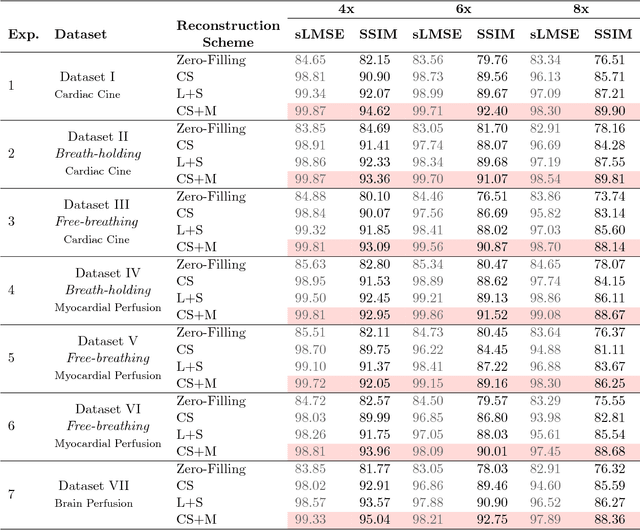

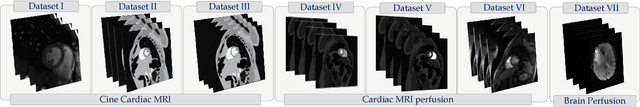

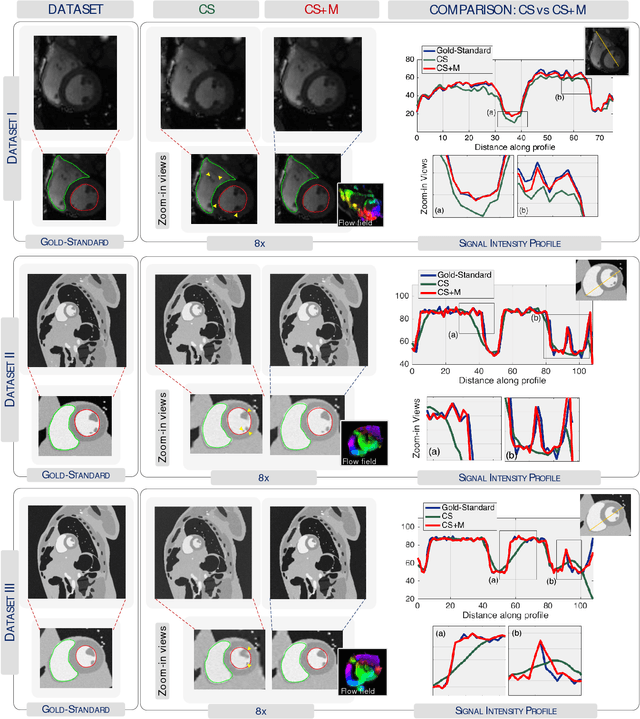

Abstract:Purpose: To obtain high-quality reconstructions from highly undersampled dynamic MRI data with the goal of reducing the acquisition time and towards improving physicians' outcome in clinical practice in a range of clinical applications. Theory and Methods: In dynamic MRI scans, the interaction between the target structure and the physical motion affects the acquired measurements. We exploit the strong repercussion of motion in MRI by proposing a variational framework - called Compressed Sensing Plus Motion (CS+M) - that links in a single model, simultaneously and explicitly, the computation of the algorithmic MRI reconstruction and the physical motion. Most precisely, we recast the image reconstruction and motion estimation problems as a single optimisation problem that is solved, iteratively, by breaking it up into two more computationally tractable problems. The potentials and generalisation capabilities of our approach are demonstrated in different clinical applications including cardiac cine, cardiac perfusion and brain perfusion imaging. Results: The proposed scheme reduces blurring artefacts and preserves the target shape and fine details whilst observing the lowest reconstruction error under highly undersampling up to 12x. This results in lower residual aliasing artefacts than the compared reconstructions algorithms. Overall, the results coming from our scheme exhibit more stable behaviour and generate a reconstruction closer to the gold-standard. Conclusion: We show that incorporating physical motion to the CS computation yields a significant improvement of the MR image reconstruction, that in fact, is closer to the gold-standard. This translates to higher reconstruction quality whilst requiring less measurements.

Linkage between Piecewise Constant Mumford-Shah model and ROF model and its virtue in image segmentation

Jul 26, 2018

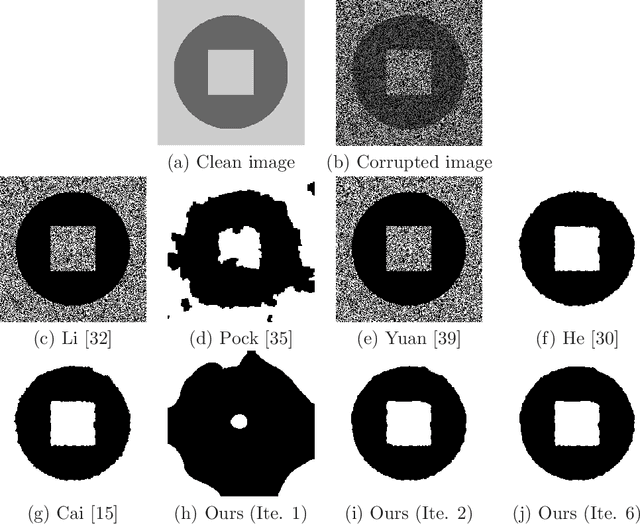

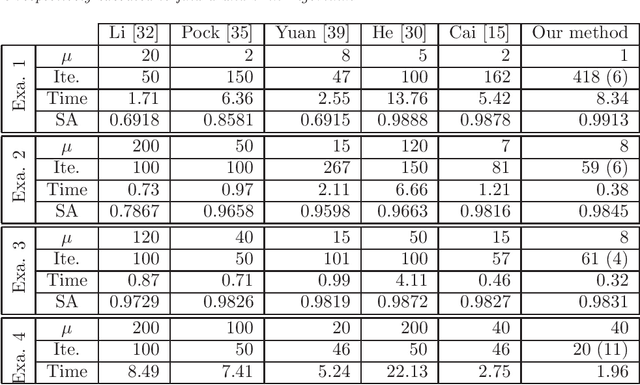

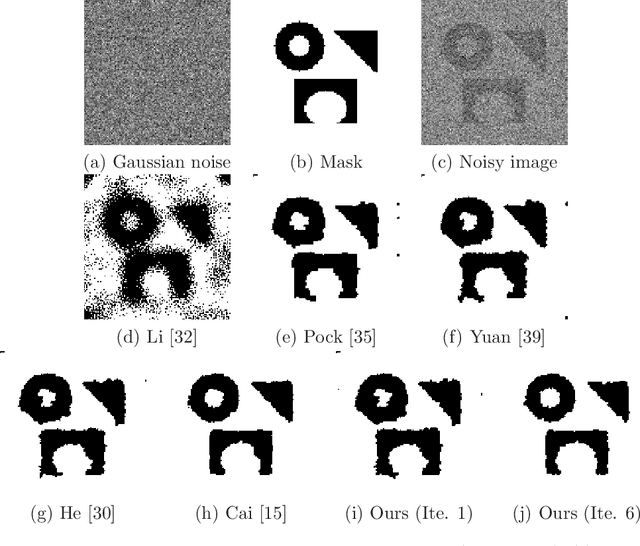

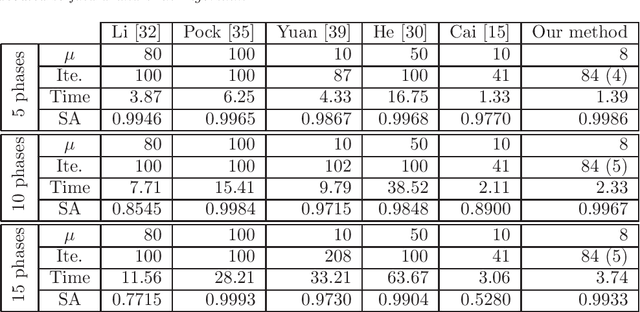

Abstract:The piecewise constant Mumford-Shah (PCMS) model and the Rudin-Osher-Fatemi (ROF) model are two of the most famous variational models in image segmentation and image restoration, respectively. They have ubiquitous applications in image processing. In this paper, we explore the linkage between these two important models. We prove that for two-phase segmentation problem the optimal solution of the PCMS model can be obtained by thresholding the minimizer of the ROF model. This linkage is still valid for multiphase segmentation under mild assumptions. Thus it opens a new segmentation paradigm: image segmentation can be done via image restoration plus thresholding. This new paradigm, which circumvents the innate non-convex property of the PCMS model, therefore improves the segmentation performance in both efficiency (much faster than state-of-the-art methods based on PCMS model, particularly when the phase number is high) and effectiveness (producing segmentation results with better quality) due to the flexibility of the ROF model in tackling degraded images, such as noisy images, blurry images or images with information loss. As a by-product of the new paradigm, we derive a novel segmentation method, coined thresholded-ROF (T-ROF) method, to illustrate the virtue of manipulating image segmentation through image restoration techniques. The convergence of the T-ROF method under certain conditions is proved, and elaborate experimental results and comparisons are presented.

A graph cut approach to 3D tree delineation, using integrated airborne LiDAR and hyperspectral imagery

Jan 24, 2017

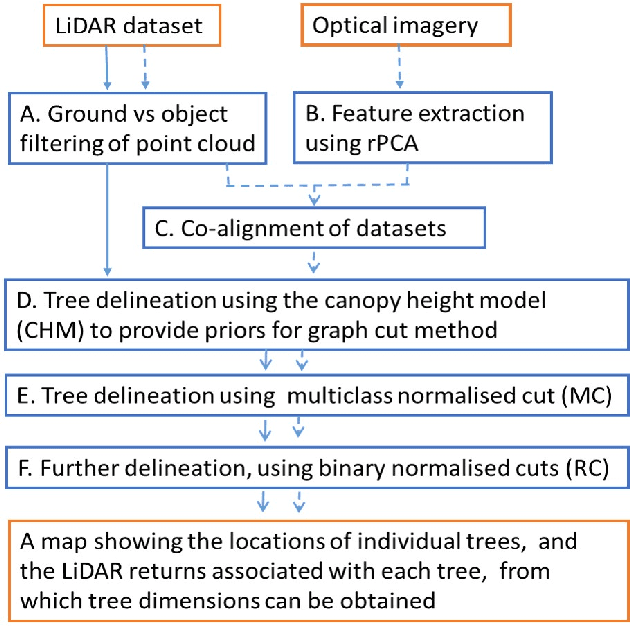

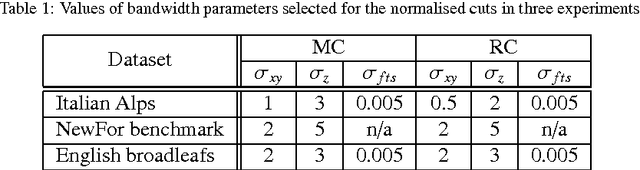

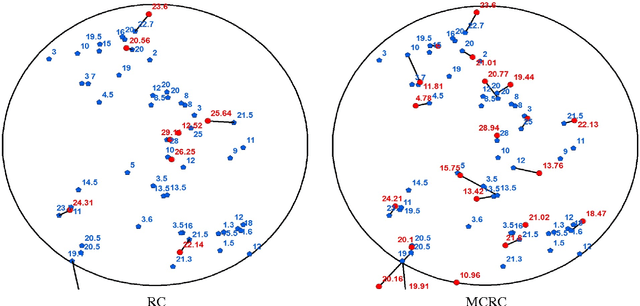

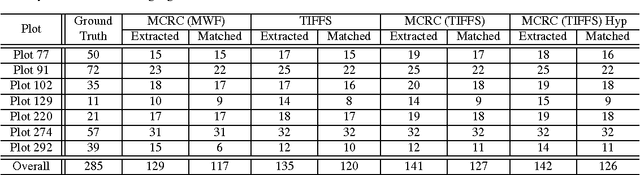

Abstract:Recognising individual trees within remotely sensed imagery has important applications in forest ecology and management. Several algorithms for tree delineation have been suggested, mostly based on locating local maxima or inverted basins in raster canopy height models (CHMs) derived from Light Detection And Ranging (LiDAR) data or photographs. However, these algorithms often lead to inaccurate estimates of forest stand characteristics due to the limited information content of raster CHMs. Here we develop a 3D tree delineation method which uses graph cut to delineate trees from the full 3D LiDAR point cloud, and also makes use of any optical imagery available (hyperspectral imagery in our case). First, conventional methods are used to locate local maxima in the CHM and generate an initial map of trees. Second, a graph is built from the LiDAR point cloud, fused with the hyperspectral data. For computational efficiency, the feature space of hyperspectral imagery is reduced using robust PCA. Third, a multi-class normalised cut is applied to the graph, using the initial map of trees to constrain the number of clusters and their locations. Finally, recursive normalised cut is used to subdivide, if necessary, each of the clusters identified by the initial analysis. We call this approach Multiclass Cut followed by Recursive Cut (MCRC). The effectiveness of MCRC was tested using three datasets: i) NewFor, ii) a coniferous forest in the Italian Alps, and iii) a deciduous woodland in the UK. The performance of MCRC was usually superior to that of other delineation methods, and was further improved by including high-resolution optical imagery. Since MCRC delineates the entire LiDAR point cloud in 3D, it allows individual crown characteristics to be measured. By making full use of the data available, graph cut has the potential to considerably improve the accuracy of tree delineation.

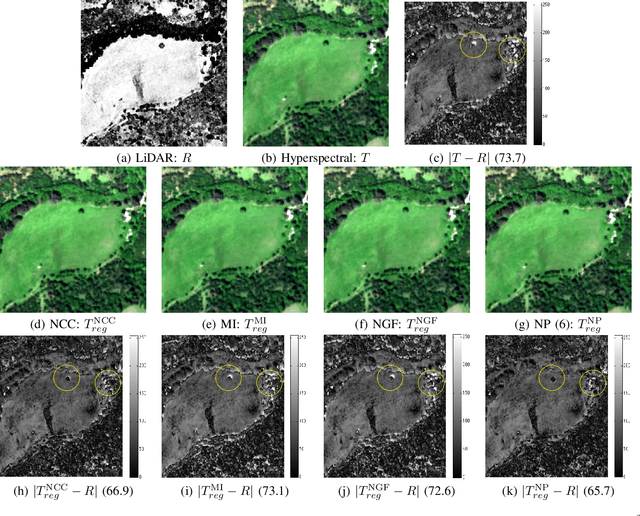

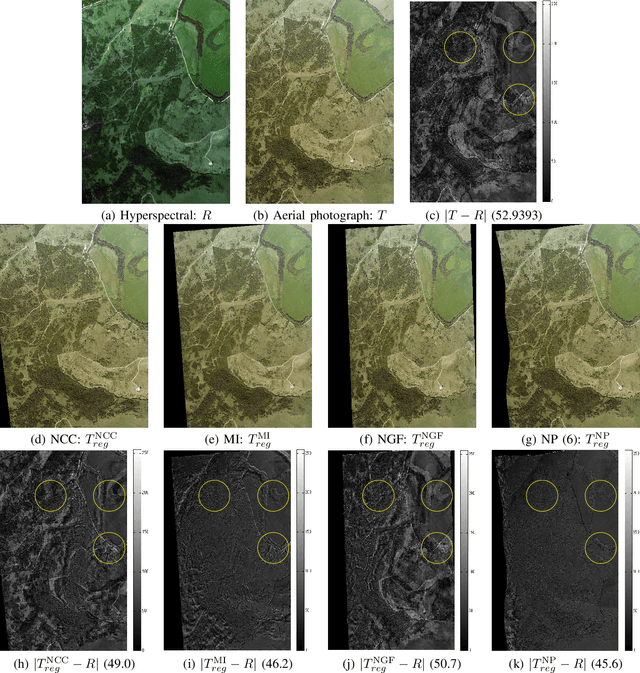

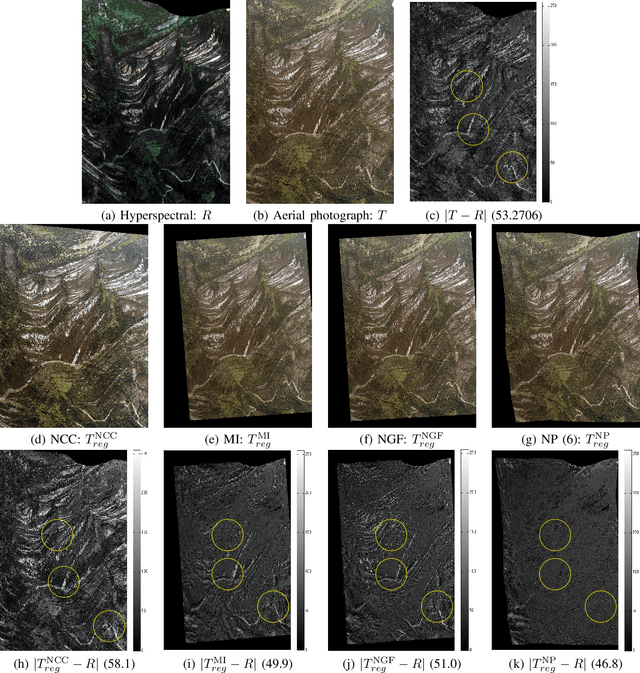

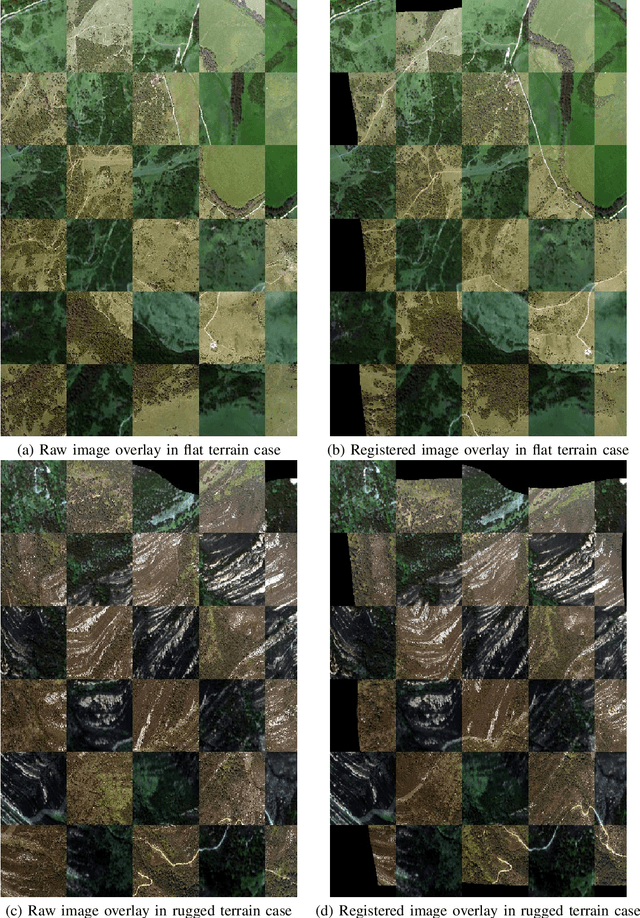

Non-parametric Image Registration of Airborne LiDAR, Hyperspectral and Photographic Imagery of Forests

Jul 28, 2014

Abstract:There is much current interest in using multi-sensor airborne remote sensing to monitor the structure and biodiversity of forests. This paper addresses the application of non-parametric image registration techniques to precisely align images obtained from multimodal imaging, which is critical for the successful identification of individual trees using object recognition approaches. Non-parametric image registration, in particular the technique of optimizing one objective function containing data fidelity and regularization terms, provides flexible algorithms for image registration. Using a survey of woodlands in southern Spain as an example, we show that non-parametric image registration can be successful at fusing datasets when there is little prior knowledge about how the datasets are interrelated (i.e. in the absence of ground control points). The validity of non-parametric registration methods in airborne remote sensing is demonstrated by a series of experiments. Precise data fusion is a prerequisite to accurate recognition of objects within airborne imagery, so non-parametric image registration could make a valuable contribution to the analysis pipeline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge